Generative AI is no longer just a buzzword — it’s a rapidly emerging core technology that is reshaping how businesses operate, create, and compete. From writing code and generating content to powering intelligent assistants, tools like ChatGPT and Claude are helping companies unlock new efficiencies and customer experiences. In this article, we’ll explore how ChatGPT-like artificial intelligence works, where it’s being applied, and what decision-makers need to know to integrate it into real-world software solutions.

What is Generative AI

Generative AI refers to artificial intelligence systems that can create new content, such as text, images, videos, or code, by learning from existing data. Unlike traditional AI, which classifies or predicts using existing data, generative models produce original material in response to prompts. These models are powered by advanced deep neural networks, particularly the Transformer architecture, which learn patterns and structures from massive datasets. Improvements in transformer-based neural networks during the 2010s enabled today's generative AI boom — notably, the invention of the Transformer in 2017, which allows AI to process sequences in parallel with "self-attention," capturing long-range context far more efficiently than prior recurrent neural networks. This breakthrough has led to AI systems that can generate text remarkably similar to human writing and highly realistic images.

Generative AI has evolved rapidly from early approaches, such as variational autoencoders and GANs for images, to modern large language models (LLMs) that can engage in complex conversations. The launch of ChatGPT in late 2022, a chatbot based on OpenAI’s GPT series, brought generative AI into the mainstream and demonstrated how far these models have advanced. Today, a variety of generative AI tools are available:

- OpenAI ChatGPT (GPT-4): An AI chatbot that can answer questions, write content, and hold conversations.

- OpenAI DALL·E: An image-generation model that creates pictures from text descriptions.

- Anthropic Claude: A large language model focused on helpful, harmless dialogue, developed with an emphasis on AI safety.

- Google Gemini: An upcoming multimodal model from Google DeepMind, expected to rival GPT-4 in capability.

These are just a few examples (others include GitHub Copilot for code and Midjourney for art). Artificial intelligence technologies like ChatGPT and DALL·E exemplify how generative AI can produce creative outputs on demand. However, the rapid rise of this technology brings up new questions about originality, as models generate content by extrapolating from training data rather than retrieving exact facts. Nevertheless, due to its potential to automate content creation and enhance human creativity, generative AI is now a strategic priority across industries.

How Large Language Models Like GPT-4 Work

To understand how artificial intelligence works in the context of large language models, it helps to demystify how systems like GPT-4 operate. GPT-4 is a generative pre-trained transformer model – essentially a huge neural network with billions of parameters trained on diverse text from the internet.

During pre-training, the model ingested enormous amounts of text, including web pages, books, articles, and code, and learned to predict the next word (or "token") in a sentence. This training process uses self-supervised learning: The model is given part of a sentence and tries to fill in a plausible continuation. It adjusts its internal weights whenever it makes a mistake. Through millions of such "fill-in-the-blank" exercises across terabytes of data, the model statistically encodes patterns of grammar, facts, and reasoning. The end result is a neural network that can generate coherent sentences and solve problems by leveraging the vast information it absorbed.

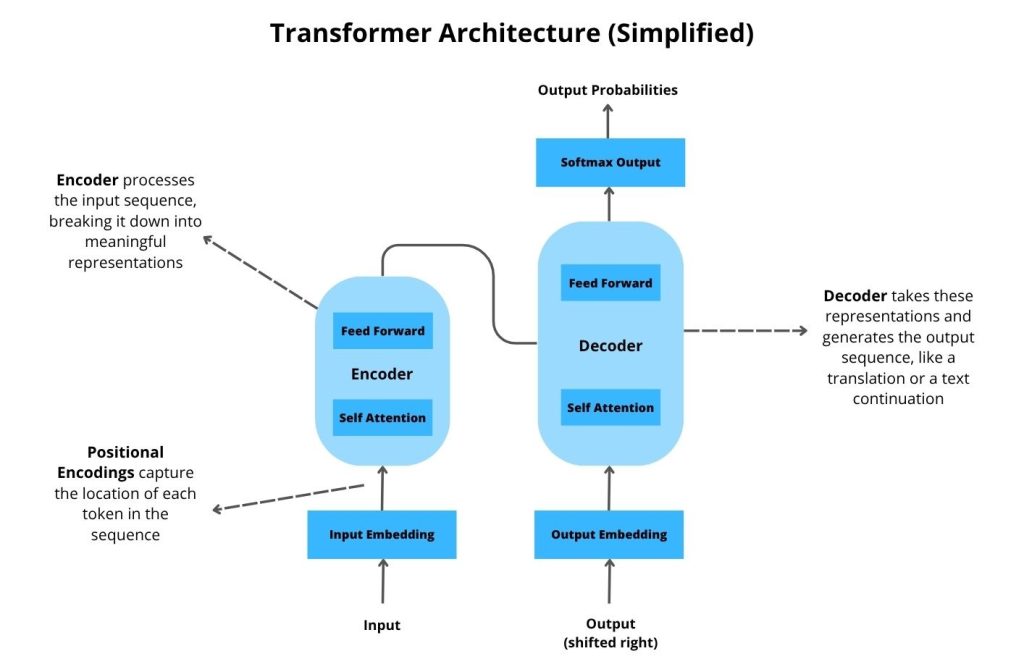

GPT-4 and other large language models (LLMs) are built on the Transformer architecture, which processes text in parallel using a mechanism called self-attention. Unlike older RNNs, which read word-by-word, Transformers attend to all words in a context at once, learning which terms are related or important to each other. This enables the model to capture long-range dependencies in language, such as linking a pronoun to a noun mentioned in an earlier sentence, and to handle much larger input sizes. GPT-4 can handle input contexts of several thousand tokens, and specialized versions can handle tens of thousands. For example, OpenAI offers GPT-4 32K with a 32,000-token window, and Anthropic’s Claude has an impressive 100,000-token context window. In practical terms, a larger context enables the AI to consider more text or instructions at once, allowing it to process lengthy documents or conversations.

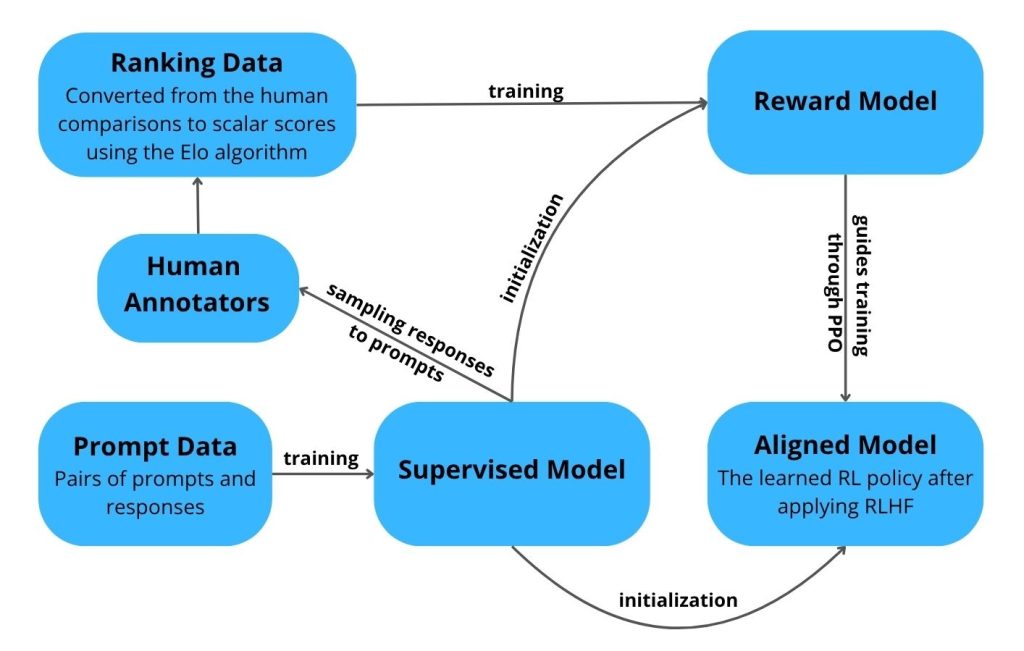

Before deployment, models like GPT-4 undergo fine-tuning to enhance their usefulness and safety. One aspect of this process is instruction tuning, in which the model is trained to follow human instructions. Another crucial step is reinforcement learning from human feedback (RLHF), in which the model generates answers that human reviewers rate. Those ratings then train the model to produce more helpful and accurate responses. This is how ChatGPT became adept at dialogue — it learned from numerous demonstrations and corrections. Fine-tuning aligns the AI with user expectations (and company policies), reducing the model’s tendency to produce irrelevant or harmful outputs.

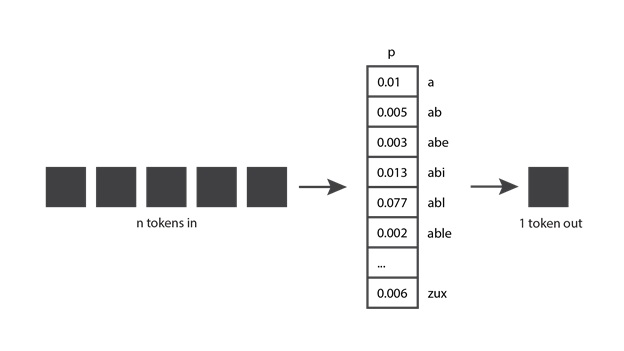

Understanding how an LLM represents text internally is also important. Before sentences are fed into the network, the text is broken into small pieces called tokens (words or subword fragments). The model then converts these tokens into numerical vectors and processes them through dozens of neural network layers. It uses attention to weigh relevant context.

When generating a response, the model works one token at a time. It outputs a probability distribution for the next token, selects one (often the one with the highest probability), and appends it to the sequence. Then, it repeats this process for the next token. This iterative process continues until an end-of-answer token or length limit is reached.

Notably, the model doesn’t “decide” on an entire sentence at once. It's like autocomplete on steroids, guided by extensive statistical knowledge. Because it is probabilistic, responses can vary with each run (there isn’t a single fixed answer to any prompt), though the overall accuracy and coherence are remarkably high with enough training. For example, GPT-4 has demonstrated superior performance on a range of knowledge and reasoning benchmarks compared to its predecessor, GPT-3.5.

In summary, large generative models learn language and knowledge structures from big data and then generate new content by predicting contextual tokens.

GPT-4's fluent, almost human-like writing ability stems from the transformer architecture and its enormous scale. However, GPT-4 does not truly "understand" in a human sense. It lacks true reasoning or awareness and instead relies on pattern recognition. This is why these models sometimes hallucinate, producing plausible-sounding but incorrect statements despite their impressive overall performance. With thoughtful fine-tuning and human feedback, developers can harness the power of LLMs while mitigating their weaknesses.

Real-World Applications

Generative AI is not just a lab curiosity – it’s being applied across industries to boost productivity and unlock new capabilities. Here are some major real-world applications of generative AI and how they add value:

Software Development - AI Coding Assistants: Developers use tools like GitHub Copilot (powered by OpenAI Codex) to write code more quickly and accurately. Copilot suggests code snippets or entire functions within your IDE. Studies have shown that programmers complete tasks much faster with AI assistance. A GitHub experiment showed that developers using Copilot finished a coding task 55% faster than those who did not use it. These gains translate to significant efficiency in software projects. Beyond coding, generative AI can generate software documentation, write unit tests, and convert legacy code from one language to another, thereby accelerating the development lifecycle.

Customer Support - AI Chatbots and Agents: Artificial intelligence, like ChatGPT, is transforming customer service by handling routine inquiries through chat or voice. Companies deploy these chatbots on their websites, messaging apps, and in their call centers to provide instant answers and troubleshoot common issues. This improves response times and allows human agents to focus on more complex cases. In fact, modern generative AI chatbots can resolve a large portion of customer requests without human intervention. For example, Tidio’s AI assistant, Lyro, can automate up to 70% of customer requests through Chattidio.com. By deflecting repetitive queries, businesses reduce support costs, and customers receive 24/7 assistance. According to Gartner, 80% of customer service organizations will be using generative AI in some form this year, whether for front-line bots or agent support (like summarizing customer history during a call).

Marketing & Content Creation - Generating Copy and Media: Generative AI is a game-changer for producing written and visual content on a large scale. Tools like Jasper and Copy.ai can generate marketing copy, product descriptions, blog posts, and social media content based on a brief prompt. These tools help content teams overcome writer's block and produce more material tailored to each audience segment. Brands are also using image generators (e.g., DALL·E or Stable Diffusion) to create custom visuals or videos from text prompts, which is useful for ads, design prototypes, and creative campaigns. The ROI is significant. Jasper’s platform has helped companies reduce content creation time by 6–10 times while maintaining their brand voice. For example, a marketing agency used Jasper to write over 40 client blog posts in six months - a feat that "wouldn't have been possible" without generative AI. Automating content generation allows businesses to execute campaigns faster and engage customers with personalized messaging that would be impractical to create manually at scale.

Education - Personalized Learning and Tutoring: Educators and ed-tech firms are exploring the use of generative AI to provide students with personalized tutoring and content. ChatGPT and similar AIs can act as virtual tutors, explaining concepts in different ways until students understand or creating practice questions and flashcards tailored to students' progress. For instance, Khan Academy introduced "Khanmigo," a GPT-4-powered assistant that helps students with math problems by asking guided questions instead of providing answers. Generative AI can also simplify complex texts for different reading levels, generate examples and analogies, and role-play as an examiner to help students prepare for exams. Although AI tutors cannot replace human teachers, they can provide on-demand assistance and offer individualized attention to every learner. Early pilots suggest that students appreciate the immediate feedback and the ability to ask AI "dumb questions" without fear, which could improve learning outcomes. In higher education, generative AI is used to summarize research papers and translate materials, improving accessibility.

Healthcare - Medical Documentation and Insights: Generative AI assists with healthcare tasks such as summarizing patient records, drafting clinical reports, and suggesting treatment plans (with human oversight). AI scribes can transcribe and organize doctor-patient conversations into structured notes, saving doctors significant time spent on documentation. Models like GPT-4 have been tested for suggesting differential diagnoses and answering medical questions, essentially functioning as medical references that physicians can consult. Microsoft and Epic Systems, for instance, are integrating GPT-based tools into electronic health record systems to help draft responses to patient messages and prepare chart notes for review. Additionally, generative AI is used in medical research to generate hypotheses and analyze scientific literature. For example, Elicit.org uses an LLM to summarize academic papers. Caution is paramount in this field due to accuracy and liability concerns, but early applications show promise in reducing administrative burdens and providing clinicians with quick insights. There are even patient-facing applications: some mental health apps use generative AI for cognitive behavioral therapy exercises or health chatbots (with clear disclaimers that they are not human professionals). As trust and regulation evolve over time, we will likely see more AI copilots assisting healthcare workers, improving efficiency, and enabling a greater focus on patient care.

Check out a related article:

AI in Medicine: Opportunities, Challenges, and What’s Next

Security & Compliance Concerns

Although generative AI creates value, it also introduces security, ethical, and compliance risks that companies must address. Some key concerns include:

Hallucinations (Incorrect Outputs)

Generative models can produce information that is factually incorrect or entirely fabricated with confidence. For example, the AI might cite nonexistent sources or laws, or provide dangerous misinformation, all while sounding plausible. For instance, a model may generate a seemingly legitimate medical citation that is actually made up.

These hallucinations occur because the AI is a probability engine without a built-in truth gauge - it fills in gaps with whatever seems statistically likely. In critical domains such as law and medicine, these errors can have serious consequences. Mitigation strategies include human review of AI outputs, fact-verification tools, and constraining the model to a knowledge base via techniques like retrieval-augmented generation.

Model providers are working to reduce hallucinations. For instance, OpenAI has refined GPT-4's prompt instructions to acknowledge uncertainty and avoid speculation, and Anthropic’s Claude is trained with a constitutional AI approach to minimize false or harmful statements.

Bias and Fairness

Generative AI systems learn from vast amounts of data on the internet, which unfortunately includes societal biases, stereotypes, and unfair representations. Consequently, these models may exhibit bias in their outputs. For instance, they may generate different quality responses for different demographic groups or use biased language. There have been cases of AI image generators producing sexist or racist images due to skewed training data. Without careful tuning, an AI might reinforce stereotypes (e.g., making gendered assumptions about occupations or describing certain groups in an unfavorable manner).

Businesses must be aware of these biases because deploying a biased AI could lead to public relations problems or even legal liabilities. Techniques to address this include curating training data, detecting and correcting algorithmic bias, and including guidelines in prompts to promote fairness. Some providers, like Anthropic, explicitly incorporate ethical principles into the model's training to mitigate bias. Furthermore, AI ethics reviews and testing are recommended before launching any customer-facing AI - similar to vetting employees or content for bias, AI should be audited.

Data Privacy and Leakage

A significant compliance concern is how generative AI handles sensitive data. If users input proprietary or personal data into an AI service, that data could be exposed or used in unintended ways. There are two angles here: outbound leakage and inbound data usage.

- Outbound: There’s a risk the model could inadvertently reveal confidential information from its training set or from data provided earlier. For example, an AI trained on internal code might output some of that code to an unrelated user. This is why many firms prohibit pasting secret source code into ChatGPT.

- Inbound: If you send customer data to a third-party AI API, it must be handled in accordance with privacy laws such as GDPR. This issue came to a head when Italy’s data regulator temporarily banned ChatGPT for privacy compliance issues and accused OpenAI of unlawfully processing personal data without consent. OpenAI adjusted its policies, allowing data opt-outs and adding age controls, to get reinstated. However, this highlighted the regulatory scrutiny on AI.

There have also been real instances of data leaks. For example, a ChatGPT bug briefly allowed some users to see others’ chat history, and some Samsung employees reportedly pasted sensitive files or passwords into ChatGPT, which led to internal bans.

To mitigate privacy risks, organizations adopt clear AI usage policies (e.g., prohibiting the posting of confidential information in public chatbots), select providers with robust privacy policies (many of which offer the option to opt out of data retention), and implement encryption. Some organizations turn to self-hosted models, such as the open-source LLaMA, to maintain the highest level of control by keeping all data in-house. On the provider side, leaders like OpenAI and Anthropic have introduced business plans that do not involve training on client data. They also offer data deletion options to comply with regulations.

Model Security and Abuse

Generative AI can be misused by malicious actors, which is a security concern for society and companies. For example, attackers could use AI to generate convincing phishing emails, malware code, or disinformation on a large scale.

There is also a risk of prompt-based attacks on the model itself, such as prompt injection, where a user provides input that causes the AI to ignore its safety instructions. If a company's internal chatbot is not configured securely, an outside user might manipulate it into revealing information or behaving maliciously.

Mitigating these risks requires implementing AI-specific security measures, such as robust prompt filtering, user authentication, rate limiting for AI endpoints, and monitoring AI outputs for potential misuse. Companies deploying generative AI should stay updated on emerging threats. For example, research shows that you can sometimes recover parts of the training data by systematically querying an AI. Privacy researchers are concerned about extracting memorized personal data. However, differential privacy techniques and careful training can reduce that leakage.

Intellectual Property (IP) and Plagiarism

Since generative models are trained on vast amounts of data, much of which is scraped without explicit permission, there are legal questions about the outputs.

For example, does using an AI-generated image that was trained on millions of copyrighted images violate those copyrights? Some artists and authors argue that it does. There are ongoing lawsuits claiming that AI models infringe on intellectual property (IP) rights by regurgitating style or code from the training set. Similarly, if an AI writes a song "in the style of" a famous artist, who owns the copyright to the output?

Currently, purely AI-created works are not copyrightable in the US because they lack human authorship. However, if there is human creative input, the issue becomes more complex. Companies need to tread carefully, for example, by filtering training data for licensing and honoring content owner requests. OpenAI and others have started allowing artists to opt out of training. Companies may also need to restrict commercial use of outputs in certain cases.

Regarding plagiarism: AI can accidentally produce chunks of text from the training data. There have been cases of code generation that reproduced licensed code verbatim. Using such outputs may violate licenses. To be safe, have human reviewers run code or text outputs through similarity checks if intellectual property is a concern.

To tackle these issues, a combination of policy, process, and technology is needed. Frameworks for AI governance and compliance are emerging. Many firms are establishing AI ethics committees or usage guidelines to ensure responsible deployment. Technically, companies implement content filters to detect hate speech and personal data coming from the AI, as well as watermarking and auditing of AI outputs. Improvements are also being made by model providers themselves — for example, OpenAI’s latest models are less likely to produce disallowed content, and Anthropic’s Claude has an embedded “constitution” of rules. Researchers are also developing tools that verify AI outputs by cross-checking facts or tracing the source of a statement. This is a challenging problem, but progress is being made.

In summary, although generative AI offers significant opportunities, a proactive approach to security and compliance is essential for its adoption. Recognizing risks such as hallucinations, bias, data leakage, and legal uncertainties allows organizations to implement controls to mitigate these risks. Thorough testing, a gradual rollout, and oversight are prudent; treat the AI like a junior employee whose work requires review. When implemented correctly, these risks can be managed to an acceptable level, allowing the benefits to outweigh the drawbacks.

Integration Challenges

Beyond safety and ethics concerns, companies face practical challenges when integrating generative AI into production systems. Some of the major hurdles include:

Cost of Implementation

Deploying advanced AI can be expensive. For example, it took millions of dollars in computing power to train GPT-3/GPT-4-sized models. Thankfully, most businesses will use pre-trained models via API or open source. However, even then, usage costs can add up. For example, making thousands of queries per day via an API like GPT-4 can result in a significant monthly bill.

Many companies underestimate the ongoing expense of inference, which requires powerful GPUs that must be rented or purchased. There are also engineering costs to integrate the AI and maintain that integration. To control costs, companies should pilot with smaller models or rate limits and carefully calculate ROI - sometimes a slightly less accurate open-source model running on your own servers might be cheaper in the long run than a costly per-call API.

Additionally, vendors are aware of this challenge, and we are seeing pricing competition. Open-source alternatives are driving down the cost of generative AI. For example, hosting a LLaMA-2 13B model on one cloud GPU might cost only a few dollars per hour. Cloud providers also offer discounts or fixed subscriptions for high-volume AI use. Nevertheless, budgeting for AI is now as important as budgeting for cloud storage or bandwidth.

Infrastructure & Scalability

Implementing generative AI on a large scale may necessitate new infrastructure and skills. These models often require GPU acceleration or specialized AI hardware to run efficiently. Many IT environments are not initially set up for GPU workloads. Companies may need to invest in new servers with GPUs or use cloud GPU instances, both of which complicate deployment.

Additionally, scaling is an issue; supporting thousands of concurrent AI queries with low latency is challenging. Organizations must design load-balancing systems and may need to use model compression or distillation techniques to handle real-time demands.

Furthermore, integrating AI into existing software may require refactoring workflows. For example, customer chats may first be routed to an AI, and then to a human. Infrastructure monitoring is also important, such as tracking model response times and the uptime of the external API. Some opt for a hybrid approach: running smaller models on-premises for fast responses and sending only heavy queries to a cloud API.

Fortunately, cloud platforms are rapidly introducing managed AI services to reduce this friction. Azure, AWS, and GCP all offer managed model endpoints. Nonetheless, the initial setup poses a challenge. In a recent survey, many firms cited a lack of in-house infrastructure and technological integration as barriers to AI adoption.

In short, companies must assess whether their technology stack can handle generative AI and plan for necessary upgrades, from data pipelines to DevOps for AI.

Data Preparation and Integration

“Garbage in, garbage out” applies to AI as well. To obtain useful results, it is often necessary to integrate the AI with company-specific data, such as product documentation for a support bot or proprietary financial data for an AI analyst. Preparing this data can be a big task, including cleaning it, formatting it into prompts, and maintaining updates.

Some integrations use a technique called RAG (retrieval-augmented generation), in which the AI is given relevant documents from a knowledge base to ground its answers. Implementing this requires setting up a vector database, indexing documents, and connecting it with the LLM.

Ensuring the AI always has the latest data (e.g., new policies or inventory changes) requires data engineering work. Additionally, if a company wants to fine-tune a model on its data, it needs a dataset of Q&A pairs or examples. This dataset may not exist, so it would have to be created or manually labeled.

All of this adds to the project's timeline and complexity. Companies often underestimate the effort required to make an AI system truly "knowledgeable" about their unique business. It won't magically know internal information unless you provide it. Thus, knowledge integration is a key challenge: bridging the gap between general AI capability and the company’s specific context.

Security and Access Control

Integrating AI into enterprise systems raises questions about who has access to them and what they can do. Who is allowed to use the system, and what can they do? If an AI system is connected to internal data sources, it must ensure that it doesn’t reveal data to unauthorized users. This requires building permission layers or filters on the AI’s outputs.

This is a new kind of security concern. For instance, it is necessary to prevent an employee in department A from querying the AI about department B’s confidential data. One solution is to pass user IDs to the back-end retrieval system so that it only retrieves documents the user is permitted to access.

Another solution is to instruct the model to refuse if the answer is outside the scope of the query. Furthermore, integrating with external APIs introduces an external attack surface. For instance, if the external AI service is compromised or communication isn't properly encrypted, data could leak.

Therefore, network security, encryption, and careful API key management are important. Some companies keep the AI entirely within their firewall and use open models to avoid these issues. As previously mentioned, prompt injection attacks are also a risk of integration - someone could trick the AI into revealing secure information by including malicious instructions in the input. Mitigating this risk may require input validation or the use of multiple AI agents, where one monitors the other. In short, standard cybersecurity practices must now extend to cover AI systems as well.

API Limits and Vendor Dependency

Using third-party AI APIs (OpenAI, etc.) means accepting their usage limits and potential changes. Many APIs have rate limits, such as X requests per minute, which could slow down your application or require you to negotiate higher tiers. If your usage spikes unexpectedly, you could reach your limit and lose requests. Similarly, there are limits on the length of contexts (as discussed, GPT-4 has a maximum token window, so you can’t input more than that in one request).

Your integration must be built around these constraints, perhaps by chunking inputs or summarizing on the fly. There’s also the issue of model updates and versioning. AI providers frequently update models. For example, OpenAI deprecated some older models and required users to switch endpoints within a few months. While model improvements are generally positive, they can introduce changes to the output that affect your application’s behavior. If you’ve built a lot of prompt-based logic, a model update could render the prompt ineffective or necessitate re-testing.

Essentially, you’re tied to the vendor’s roadmap. This can create uncertainty and be challenging. For example, imagine you tuned your customer support prompts for GPT-4, but then GPT-5 behaves slightly differently, requiring you to adjust. Some vendors offer version locking or extended deprecation timelines to ensure stability, but not all do.

Dependence on an external service also means designing for downtime or outages, e.g., having a fallback if the API is unreachable. Enterprise architects must plan for these considerations (just as they do for any critical SaaS dependency).

Check out a related article:

Artificial Intelligence in a Nutshell: Types, Principles, and History

Organizational and Skill Challenges

Lastly, integrating AI requires more than just technical expertise; it also requires the right talent and mindset. Many organizations lack the necessary AI/ML expertise to lead the integration process. Often, it’s necessary to upskill developers or hire ML engineers who understand prompt engineering, fine-tuning, and model constraints.

Business stakeholders also need training to understand AI’s limitations (so customer service teams, for example, don't blindly trust every AI response). There can be internal resistance as well - teams might worry that AI will replace jobs or disrupt workflows. Change management and clear communication about the role of AI (“augments staff, doesn’t replace them”) are important to gain buy-in.

A pilot phase with champion users can demonstrate value and address issues before a broader rollout. C-suite support is also crucial; without it, projects can stall. In essence, integrating generative AI affects technical, human, and process domains simultaneously.

Despite the challenges involved, adopting generative AI can be immensely rewarding. Companies can learn and iterate by anticipating issues and starting with small, manageable projects. As technology advances, many integration hurdles, such as cost, speed, and security, are gradually becoming less significant (hardware is becoming cheaper, and new tools are making processes more straightforward). Careful planning, partnering with experienced AI development teams, and focusing on iterative development (proof of concept -> minimum viable product -> scaling) will greatly increase the odds of smooth integration.

ROI Potential

Investing in generative AI can yield a substantial return on investment (ROI) through increased efficiency, reduced costs, and new revenue opportunities. Tech decision-makers and CTOs want to see tangible benefits, and early adopters are reporting positive outcomes. Below are some ways companies are realizing ROI with generative AI, supported by examples:

Increased Productivity and Efficiency

Generative AI often acts as a force multiplier for employees. For example, GitHub Copilot enables developers to code up to 55% faster. This productivity boost means more features can be delivered in the same amount of time, or smaller teams can accomplish what used to require more personnel. In content creation, Jasper’s clients achieved content production speeds that were 50% to 10 times faster, compressing writing processes from days to hours.

These efficiency gains directly lower labor costs per unit of output. For instance, if a marketing team can produce blogs in half the time, they can double their output without hiring more people or reassign staff to more strategic work, improving quality and creativity. Routine tasks across many functions, such as drafting emails, summarizing reports, and scheduling, can be offloaded to AI "assistants," freeing up highly paid professionals to focus on higher-value activities.

One concrete case: Morgan Stanley implemented a GPT-4-powered assistant for its financial advisors, enabling them to query the firm’s extensive research library using natural language. This saved advisors a significant amount of time that was previously spent searching through documents. What used to take hours can now be done in seconds. By reducing search and preparation time, advisors can spend more time with clients, which boosts client satisfaction and potentially increases revenue via better advice or more client meetings. Internally, this translates to a more efficient use of an advisor’s working hours, providing a clear productivity ROI.

Cost Savings and Process Automation

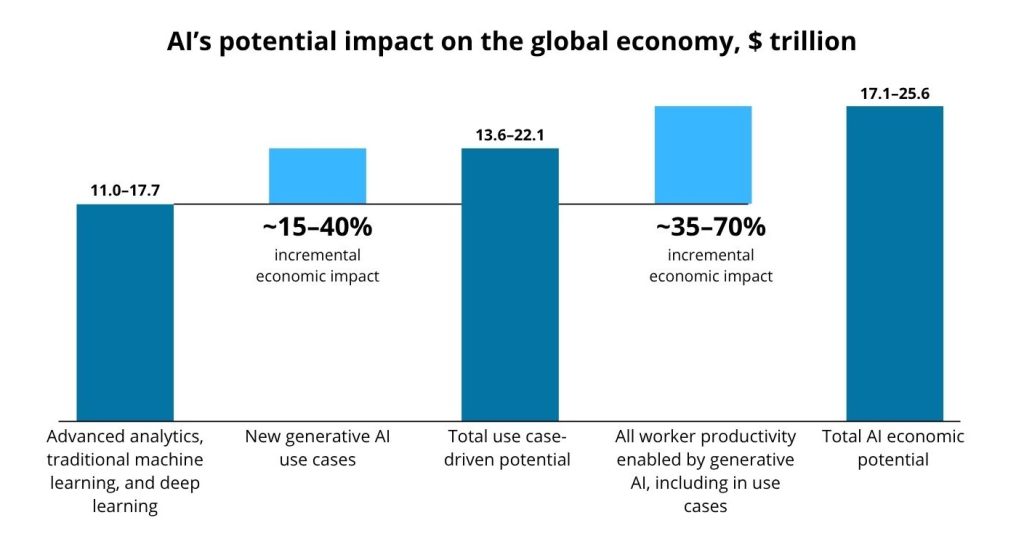

Generative AI can automate manual, time-intensive tasks, leading to cost savings. Customer service is a prime example: AI chatbots can handle frequently asked questions (FAQs) and level-one support at a fraction of the cost of human agents. This can save companies millions in support center expenses. Even if the bot only resolves 50% of inquiries, that’s 50% fewer calls to pay for. According to a McKinsey analysis, generative AI could save hundreds of billions of work hours across sectors by automating customer operations, software engineering, and more, contributing to trillions of dollars in economic value.

In a Tidio survey, 28% of business leaders said they use AI primarily to cut costs in their company. For example, they might streamline supply chain planning or automate report writing instead of outsourcing it. Additionally, generative AI can reduce error rates by preventing costly mistakes in code or marketing copy, which could lead to rework or compliance fines.

Another cost-saving dimension is training and knowledge transfer. AI assistants can onboard new employees faster by answering their questions and generating training materials, which can shorten learning curves.

Over time, these operational efficiencies compound. Initial implementation may involve costs, such as consulting and training the AI on your data, but the marginal cost of an AI handling an additional task is low once in production. The key is to identify use cases in which AI can reliably match or exceed human output. When successful, you’re essentially redeploying labor from mundane tasks to more valuable ones or avoiding the need to hire extra staff as the company grows. Many firms see AI as a way to achieve scalability without proportional increases in headcount, which fundamentally improves the cost structure of certain business processes.

Improved customer experience and revenue uplift

Generative AI can drive top-line growth by improving customer engagement and offering new products. For example, personalized AI recommendations and marketing can increase conversion rates. E-commerce companies that use AI to generate tailored product descriptions or marketing emails have seen higher click-through rates and sales.

According to a BCG study, personalization done right can lead to revenue increases of 10% or more. Customers appreciate fast, 24/7 service. AI-powered chat and self-service can increase customer satisfaction, which improves retention and lifetime value.

According to Zendesk, 57% of customers expect AI to improve service speed in the coming years, and companies that deliver on this expectation will earn customer loyalty. Companies known for excellent customer experience tend to outperform their competitors in revenue growth — by some estimates, by 4–8%.

Generative AI is becoming a key tool for delivering standout experiences at scale, such as providing instant multilingual support or proactively reaching out to customers with personalized suggestions.

On the product side, entirely new revenue streams are emerging. For example, there are AI-generated content services and the use of generative AI to enable mass customization of products, such as custom AI-generated designs for apparel or AI-driven game content that keeps players engaged and spending.

There are also examples in media and entertainment. For instance, companies are exploring AI-generated scripts and marketing materials. This reduces content production costs and time to market for new campaigns, indirectly boosting revenue by accelerating cycles.

In B2B sales, some firms use AI to draft more effective sales proposals and responses to RFPs, thereby improving their win rates. In short, better and faster output leads to happier customers, and happy customers drive revenue. For example, an insurance company’s marketing team integrated an AI writing assistant and saw a 40% increase in web traffic to their blog (attributed to more frequent, quality content), which generated more sales leads. The ROI here was not just cost saved in writing, but actual new business gained.

Innovation and Competitive Advantage

There is an important, albeit intangible, ROI in terms of innovation potential. Generative AI can enable companies to achieve feats they couldn't previously, creating competitive differentiation. For instance, a startup could use AI to make itself appear larger than it is by providing high-quality customer chat support and extensive knowledge base articles with a small team. Similarly, a manufacturing firm could use generative AI to optimize designs through AI-generated prototypes, leading to better products and more market share. Early adopters of AI can capture market attention; being seen as cutting-edge can attract customers and talent.

Companies that develop an AI strategy early stand to capture a disproportionate share of the value, whereas those that lag behind might struggle to catch up. We also see ROI in knowledge management. Internal generative AI tools can prevent knowledge loss and silos by making institutional information accessible on demand.

The value of faster, smarter decisions facilitated by AI is immense, especially in fast-moving industries, although it is hard to quantify. For many executives, the real return on AI investment will be maintaining competitiveness in an AI-enabled world. If your competitors use AI to deliver in one day what takes you one week, they will outpace you. Thus, adopting generative AI is as much about offense (creating value) as defense (not falling behind). In many boardrooms, this is how the ROI discussion is framed: beyond immediate dollar returns, AI capability is critical to business survival and long-term growth.

Examples of Business ROI

To illustrate, consider Morgan Stanley’s use of GPT-4 to assist its wealth management advisors, as mentioned earlier. The immediate ROI was increased efficiency — advisors saved time. In the long term, however, it will likely improve client retention (advisors can answer client questions faster and more accurately with the help of AI) and potentially allow each advisor to handle more clients, thus increasing revenue per advisor.

Another example: CarMax, a used-car retailer, used generative AI (GPT-3) to automatically generate thousands of customer review summaries for their website. This task would have required an enormous effort from their content team. This improved their site's SEO and user experience, contributing to higher conversion and online sales. CarMax estimated substantial savings in content creation costs and a better customer research experience — a clear ROI win.

In the gaming industry, some companies use generative AI to create dialogue or entire quests and NPC interactions, reducing development time and costs while providing players with a more dynamic experience that can increase engagement (and revenue through retention or in-game purchases).

When creating a business case for generative AI (gen AI), it’s wise to identify specific key performance indicators (KPIs) for each use case, such as faster resolution time in customer support, higher lead conversion in marketing, or fewer hours spent on a process. Then, pilot results can be measured against those KPIs. Many organizations start with a small pilot to ensure the AI performs as expected in their environment, then expand investment once ROI is demonstrated. Fortunately, generative AI often provides quick wins. Employees are generally eager to offload tedious tasks to AI and use it to be more creative. Initial productivity boosts can appear within weeks of adoption.

In summary, the ROI potential of generative AI is multifaceted: direct cost savings, efficiency gains, revenue increases, and strategic advantages. Conservative estimates (like those of McKinsey) indicate a significant impact on economic output. At the company level, even a small improvement in productivity or sales can translate to millions of dollars. As with any investment, results will vary based on execution, but companies that effectively leverage GenAI are already reaping rewards, from higher margins to faster growth. The key is to align AI initiatives with clear business objectives and track outcomes to build the business case for broader deployment.

Tool Comparison Table

With the proliferation of generative AI systems, tech leaders must compare which model best fits their needs. Below is a comparison of several prominent generative AI models – OpenAI’s ChatGPT (GPT-4), Anthropic’s Claude, Google’s Gemini, open-source Mistral, and Meta’s LLaMA – across key criteria:

| Tool | Accuracy & Capabilities | Context Length | Pricing | API Integration | Data Privacy & Control |

| ChatGPT (GPT-4) | Excellent overall accuracy and reasoning; top-tier performance on many benchmarks (passes professional exams, coding tests). Handles complex queries and multi-turn dialogue reliably. Multimodal input (image + text) supported in latest version. | 8K tokens standard context (approx. ~6,000 words); 32K-token extended model available for larger inputs. | Free basic access via web (with throttling). ChatGPT Plus is $20/month for individuals. API usage is billed per token (e.g. ~$0.03 per 1K tokens for GPT-4 input). | Robust API offered by OpenAI (RESTful JSON). Widely supported in SDKs and third-party integrations. Can be fine-tuned on custom data (for GPT-3.5; GPT-4 fine-tuning in beta). | User prompts may be used for training future models unless you opt out or use ChatGPT Enterprise. Enterprise/commercial plans offer data isolation – OpenAI won’t retain or learn from your data. GDPR compliance is evolving (OpenAI was fined for privacy issues). |

| Claude (Anthropic) | Highly capable LLM comparable to GPT-4 on many tasks. Often writes in a slightly more verbose, “friendly” style. Excels at analyzing long documents and giving detailed, context-rich answers. Designed with a focus on being helpful and harmless (uses Anthropic’s “Constitutional AI” for alignment). | 100K tokens in Claude 2 (around 75,000 words) – industry-leading context window, great for processing lengthy files or conversations. (Enterprise Claude can even extend to 500K tokens). | Limited free trial access. API pricing (as of 2025) is usage-based, roughly ~$11 per million input tokens and $32 per million output tokens for Claude 2. Various pricing tiers for business usage. | Yes – Anthropic provides a cloud API (similar JSON interface). Claude is integrated into platforms like Slack and Zoom. No fine-tuning yet, but you can customize behavior via system prompts. | Stronger privacy stance by default: Anthropic does not use customer API data to train models. Claude Enterprise offers features like encrypted data storage, and data retention policies (option to not log content). Suitable for companies concerned about sensitive data, since prompts stay confidential. |

| Google Gemini | (Planned for release in 2024/2025) A next-generation model from Google DeepMind. Expected to be on par with GPT-4 or beyond in accuracy, with cutting-edge capabilities in coding, Q&A, and reasoning (likely leveraging Google’s extensive AI research). Will be multimodal, combining text with images/possibly audio. | Unknown exact context length, but likely very large (Google’s PaLM 2 model uses 32K tokens; Gemini may extend further). Emphasis on integrating with search and real-time information, so context may effectively include live data. | Pricing not yet public. Google will likely offer Gemini through Google Cloud Vertex AI services, with a pay-as-you-go model (similar to other Google Cloud AI APIs). Possibly competitive pricing to attract enterprises. | Yes – will be accessible via Google Cloud’s AI platform (API and ecosystem integrations). Google is integrating Gemini into many products (Google Workspace apps, Search, etc.), so integration within Google’s ecosystem will be seamless. | Google assures enterprise customers that data submitted isn’t used to train models or improve services without consent (a policy already in place for its Cloud AI). Given Google’s focus on business AI, expect compliance with GDPR, data residency options, and strong security audits. (However, using Gemini via public Google services might mean data is processed by Google – terms will govern usage.) |

| Mistral (7B open model) | An open-source generative model (7 billion parameters) released by startup Mistral AI in 2023. Surprising capability for its size – can generate coherent text and code, but not as knowledgeable or fluent as larger models like GPT-4. Best suited for specific tasks on smaller hardware. Openly licensed, so it can be adapted freely. | Around 4K tokens context length (typical for smaller models). Adequate for short prompts and answers, but not for very long documents in one go. (Users can fine-tune or modify the model to handle extended context, at cost of some performance.) | Free – the model weights are openly downloadable. No usage fees. The main “cost” is providing your own compute if running it yourself (e.g. cloud GPU hours). Some third-party ML hubs offer paid API access to Mistral models for convenience. | Not provided as a managed service by the creators. You run it on your own servers or choose a hosting service. This means integration requires more ML engineering effort, but on the flip side you have full control (no external API limits). Many open-source libraries (Hugging Face Transformers, etc.) support deploying Mistral in applications. | Because you deploy it in-house, you retain full data control – no data leaves your environment. Ideal for high privacy needs. You can fine-tune the model on your proprietary data without sharing it. Note that open models don’t come with warranties: you must handle compliance (e.g. filtering outputs if needed) on your own. |

| LLaMA 2 (Meta) | An open-source family of foundation models (available in 7B, 13B, 70B parameter variants) released by Meta in 2023. LLaMA-2 70B is quite powerful – on many tasks it’s close to GPT-3.5 level performance. However, it’s generally less accurate and fine-tuned than GPT-4 or Claude on complex reasoning. Community finetunes (e.g. CodeLLaMA for coding, etc.) improve specific domain performance. LLaMA is a good base for custom model builds. | 4K tokens context for base models. Some fine-tuned versions offer 8K or higher using techniques like rope scaling, but with some trade-offs. Useful for moderate-length inputs; may struggle with very lengthy documents compared to Claude’s 100K. | Free to use under Meta’s open license (allowing commercial use with attribution). No API costs – you download the model. Running the 70B model requires a high-end GPU server, which is an infrastructure cost to factor. Alternatively, several ML service providers host LLaMA 2 models cheaply. | No official API from Meta, but the model is widely available on platforms like Azure (Meta partnered with Microsoft) and AWS marketplaces via third parties. Integration means either calling a third-party API that hosts LLaMA or deploying it on your own cloud/on-prem. It’s not as plug-and-play as ChatGPT, but many toolkits exist for integration. | Very high control – you can deploy on-premises, meaning sensitive data never leaves your servers. This makes it attractive for businesses with strict data governance. Meta does not collect usage data of your deployments. One consideration: you are responsible for implementing safeguards (the open model may produce biased or unsafe content if not moderated, since it’s not reinforced by human feedback by default). |

Future Outlook

As generative AI continues advancing at breakneck speed, we can expect transformative trends in the near future. Here are some developments on the horizon that tech leaders should watch, heading into 2025 and beyond:

- Personalization and Custom AI for Everyone: One size fits all will give way to personalized AI assistants and domain-specific models. We will see AI that adapts to each user or organization. For example, companies might deploy custom fine-tuned LLMs that speak in their brand’s voice and deeply understand their proprietary data. Individuals could have personal AI agents that know their preferences, schedule, and work style – effectively an AI secretary or tutor tailored to you. This trend is enabled by techniques like long-term memory in models and federated or on-device learning. Generative AI is expected to retain more context over time – your AI assistant might remember past conversations from weeks ago and proactively use that context. Major players are working on memory-augmented models. The result will be more context-aware, continuously learning AI that feels less like a generic tool and more like a personalized colleague. In enterprise settings, this means AI agents will maintain profiles for customers to personalize service, or for employees to personalize decision support. Gartner predicts that by 2026, 50% of knowledge workers will regularly use an AI assistant in their daily workflow (up from just 5% in 2023) – essentially, AI becomes as common as email. Companies that leverage this for personalization (while respecting privacy) can greatly enhance user experiences.

- Multimodal and Versatile Generative Models: The next generation of models, such as OpenAI’s rumored GPT-5 or Google’s Gemini, are multimodal, meaning they can handle text, images, audio, and potentially video and actions (e.g., tool use). We already see early examples of this: AI already can take in a complex task and output a variety of content types. For example, you could ask an AI to "create a marketing campaign for product X," and it would generate ad copy, a slogan, a few banner designs, and a video script, all at once. Rather than having separate systems for each modality, models will unify them. This unlocks new possibilities: An architect could sketch a diagram and have the AI produce a project plan in text form plus a 3D model, and a developer could describe an app and receive UI designs, code, and an explanatory video. Text-to-video generation will advance, enabling the creation of on-demand video content from simple prompts, though the quality is still improving. Multimodal AI will also improve understanding. For example, it will be able to read a chart image and the accompanying text to provide a better answer. In essence, we're moving toward AI that can see, hear, and speak within a unified model. This will make AI more applicable in fields such as medicine, where an AI could look at an X-ray image and the doctor’s notes to draft a report, and retail, where an AI could generate an entire e-commerce product page, including the title, description, images, and video demo. This also raises the bar for competitors — the AI that can "do it all" may become the preferred platform.

- Rise of Autonomous AI Agents and Workflows: Perhaps the most exciting (and disruptive) trend is the emergence of agentic AI – systems that don’t just respond to single prompts, but can take initiative, chain together tasks, and interact with other systems to accomplish goals. We’ve seen prototypes like AutoGPT and BabyAGI in 2023: give an AI an objective and it generates sub-tasks and tries to execute them one by one. In 2025, this concept is more refined and reliable. Future AI agents could function like junior employees or autonomous consultants. For example, an AI agent might be told “handle our social media presence.” It could then generate content, post it, analyze engagement, learn and adjust the strategy – all with minimal human input. Or an IT support agent could troubleshoot routine incidents by checking system logs, searching documentation, and only escalating to humans when it’s truly necessary. These agents will have tool use abilities: e.g. call APIs, run database queries, open web browsers – effectively integrating with software and services to act on the world (with proper sandboxing and permissions, of course). The benefit is handling multi-step operations hands-free. Enterprise software vendors are already embedding this: Microsoft’s Copilot vision involves AI agents in Office that can, say, draft an email, then schedule a meeting based on the response, then update a CRM record, all following from one user instruction. Internally, businesses will orchestrate processes with AI: imagine a procurement workflow where an AI agent automatically drafts purchase orders when inventory is low, then another AI in finance approves by cross-checking budget, etc., with humans only overseeing. This could dramatically improve operational efficiency, potentially by an order of magnitude in some areas. However, to reach this, a lot of trust and validation needs to be in place (no one wants fully unchecked autonomy). Initially, we’ll see AI agents working in tandem with humans – perhaps doing 3-4 steps then asking for confirmation. Over time, as confidence grows, more steps get automated. The future vision is AI-powered autonomous workflows that run 24/7, executing business processes end-to-end (from customer request to fulfillment, or from data analysis to decision implementation). Companies effectively gain a digital workforce of bots. This could reshape job roles – routine coordination work might fade, while strategic and creative roles flourish. It’s a bit early, but the trajectory is clear: AI will move from assistant to agent.

- Better AI Reasoning and Reliability: Another outlook point – while current models are impressive, they still make dumb mistakes (like simple math errors or logical lapses). Ongoing research is addressing this through techniques like chain-of-thought prompting (having the AI explain its reasoning) and integrating symbolic logic or external calculators. Future LLMs are likely to be more accurate and less prone to glitches in domains like math, coding, and factual QA. OpenAI, DeepMind and others are working on models that combine neural nets with logic engines or knowledge graphs to ground the outputs. Additionally, continuous improvement via user feedback will make production models more reliable. By 2025, we might have models that can at least check their work using tools (e.g. automatically do a Google search to verify an answer before responding, which some systems already attempt). All this means businesses will get more dependable results, increasing trust and expanding use cases (maybe eventually AI can draft a legal brief that a lawyer only lightly edits, once hallucinations are sufficiently minimized).

- Regulation and Standards: On the non-technical side, the future will bring more clarity on AI regulation. Governments in the EU, US, and elsewhere are formulating rules for AI transparency, bias mitigation, and data usage. We expect requirements like disclosures for AI-generated content (to prevent deepfake misuse), audits for high-risk AI systems (similar to financial audits), and privacy standards for training data (perhaps even new intellectual property frameworks). For enterprises, this likely means establishing compliance documentation for AI – e.g. being able to explain how a model makes decisions (at least at a high level) and proving you’ve tested it for bias or errors. An optimistic view is that industry will converge on best practices and certifications (there might be “AI safety certified” stamps akin to ISO certifications). All of this will make it easier for companies to adopt AI without stepping into legal grey areas. On the flip side, if regulation is too heavy-handed, it could slow innovation in some jurisdictions. Tech decision-makers should stay abreast of AI laws to ensure their implementations are future-proof (for instance, if you plan to use AI for HR recruiting, be aware of emerging laws about algorithmic bias in hiring).

- AI in the Enterprise Stack & Agents for IT: Enterprises will increasingly have AI woven through their tech stack – not just as user-facing tools but in the backend of systems. Databases might auto-optimize using AI, logs might be reviewed by AI for anomalies (already happening in cybersecurity). Software development will also change: we might see AI-driven automatic updates or refactoring in codebases (with human code reviews focusing on higher-level issues). Enterprise knowledge management will be revolutionized – instead of static intranet pages, employees will query a company-wide AI that knows everything permitted within the org (from past project docs to expert knowledge). In this sense, the organization itself becomes more of an “intelligent entity,” with an always-updated memory accessible to all employees via AI. This could break down silos and speed up cross-department collaboration. For example, before, an employee might not know someone in another office had already solved a problem – future AI could instantly surface that solution when the problem arises, regardless of organizational boundaries.

- Human-AI Collaboration and New Roles: We will likely see new job roles emerging, like AI strategy officer, prompt engineer, AI auditor, AI ethicist, etc., institutionalizing the collaboration between humans and AI. Every knowledge worker may need to learn “AI literacy” – analogous to how spreadsheet skills became essential in the past. Companies might routinely train staff on how to effectively leverage the company’s AI tools to amplify their work. This raises an interesting future scenario: the competitive edge may go to teams that work best with AI, not just who have the best AI. In other words, culture and skills around AI use could matter as much as the tech itself. Organizations fostering an AI-friendly culture (encouraging experimentation, sharing AI success stories internally, continuously updating processes) will benefit more from the technology.

In essence, the future outlook for generative AI is one of deeper integration, greater capability, and broader accessibility. AI will transition from a novelty to an everyday utility across white-collar (and some blue-collar) work. Much like the internet and mobile did, generative AI is poised to spawn entirely new business models and possibly even new industries (who would have imagined “virtual influencer” or “AI-powered fashion designer” jobs a few years ago?).

For startups, the field is ripe with opportunities to build specialized generative AI solutions (we already see AI legal tech, AI content platforms, etc.). For established companies, there’s the chance now to reinvent operations with AI at the core – those who do so successfully could achieve leapfrog improvements in productivity.

Enterprise decision-makers should prepare for an AI-driven future where AI is not just a tool but a collaborator. Focus on upskilling teams, upgrading infrastructure, and identifying strategic areas to apply AI. Keep an eye on breakthroughs in multimodal and autonomous agents which could dramatically expand what AI can handle. The organizations that embrace these trends – responsibly and proactively – will be the ones leading their industries in the coming decade. As Satya Nadella (Microsoft’s CEO) put it, we are moving from a world where every person has a computer to where every person has an AI assistant – and soon, every business process will have an AI workflow. The future of generative AI is incredibly exciting, and it’s closer than many realize.

How a company like Intersog can help

Adopting generative AI can be complex – from selecting the right model to integrating it securely into your products. This is where an experienced AI development company like Intersog becomes an invaluable partner.

First, you gain access to specialized AI knowledge without having to hire a full in-house team – our experts stay on top of the latest in AI research and industry best practices. Second, we emphasize a business-centric approach: we start with your business objectives and ensure the AI project delivers tangible value, not just cool tech for its own sake. Third, Intersog’s experience in enterprise consulting means we place a strong focus on reliability, scalability, and security (critical for enterprise AI deployments) – we build with production in mind, not just a demo. Finally, we pride ourselves on transparency and collaboration: you’ll always know the progress of your project, and we work closely with your internal teams so that the solution fits naturally in your organization.

Intersog offers a range of AI development services – from initial strategy consulting to full product development. Whether you need a quick AI workshop to spark ideas or a dedicated team to build a complex AI-driven platform, we can tailor our services to your needs. We’ve helped clients implement AI across various domains, including finance, healthcare, retail, and manufacturing. For example, we built a custom NLP solution for a healthcare provider to analyze patient feedback (improving their service quality), and we developed a predictive analytics engine for a fintech firm to personalize loan offers. Our cross-domain experience means we bring fresh ideas and proven solutions to new challenges.

Leave a Comment