AI’s footprint is expanding across healthcare, from diagnostics and treatment planning to patient monitoring and drug discovery. Advanced algorithms can now analyze medical images, electronic health records, and genomic data with remarkable speed and accuracy, revealing patterns that might escape human clinicians.

This transformation is fueled by breakthroughs in machine learning, an explosion of medical data, and a supportive tech ecosystem. Hospitals and research institutions are increasingly collaborating with artificial intelligence (AI) software companies that offer AI development services tailored to healthcare needs. These partnerships enable the integration of cutting-edge AI solutions into real-world clinical workflows, facilitating the translation of algorithms from the laboratory to the bedside. In short, AI is transitioning from a hype to a reality in medicine, demonstrating its potential to transform how we diagnose diseases, care for patients, and develop new therapies.

Applications of AI in Medicine

AI in Diagnostics (Radiology, Pathology, Ophthalmology)

One of the most mature applications of AI in medicine is diagnostics, particularly in fields such as radiology, pathology, and ophthalmology, which involve interpreting images. AI systems can scan X-rays, CT scans, and MRIs for anomalies with a level of detail and speed that is difficult for humans to match. For instance, the “CHIEF” model, developed at Harvard, analyzes digital pathology slides and has achieved an accuracy rate of about 94% in identifying cancers across 11 different tumor types - a performance comparable to that of expert pathologists.

In ophthalmology, Google DeepMind created an AI that examines retinal OCT scans and can recognize over 50 eye diseases as accurately as specialist doctors. In testing, the AI's treatment recommendations agreed with a panel of ophthalmologists more than 94% of the time.

These real-world results demonstrate that AI can equal or even surpass human clinicians in specific tasks like image analysis. AI diagnostic tools are already helping to flag suspicious lesions on scans, prioritize critical cases, and provide doctors with decision support, suggesting a future where diagnoses are faster and perhaps more accurate than ever before.

Predictive Analytics and Early Disease Detection

Beyond image diagnostics, AI is proving invaluable in predictive analytics, combing through health data to predict diseases or complications before they fully manifest. Modern hospitals generate massive amounts of patient information, such as lab test results, vital signs, and medical histories. AI can analyze these datasets to uncover subtle warning signs. Early indications of illness often hide in routine data. For example, over 70% of medical decisions involve lab tests, and these results can contain early signs of conditions such as cancer or liver disease. By applying machine learning to this data, predictive models can alert physicians to high-risk patients before noticeable symptoms appear.

In a Siemens initiative, for example, an AI model using routine blood tests identified patients at risk for severe liver disease. This enabled earlier intervention to prevent progression to liver cancer.

Similarly, researchers have used AI to forecast events such as sepsis, heart failure, and stroke hours or even days in advance by recognizing patterns in electronic health records. These early detection capabilities enable clinicians to take preventive actions, such as adjusting treatment or monitoring more closely, which can significantly improve outcomes. In short, AI-powered predictive analytics are moving healthcare toward a proactive model by catching diseases in their nascent stages, when they are easier to manage and treat

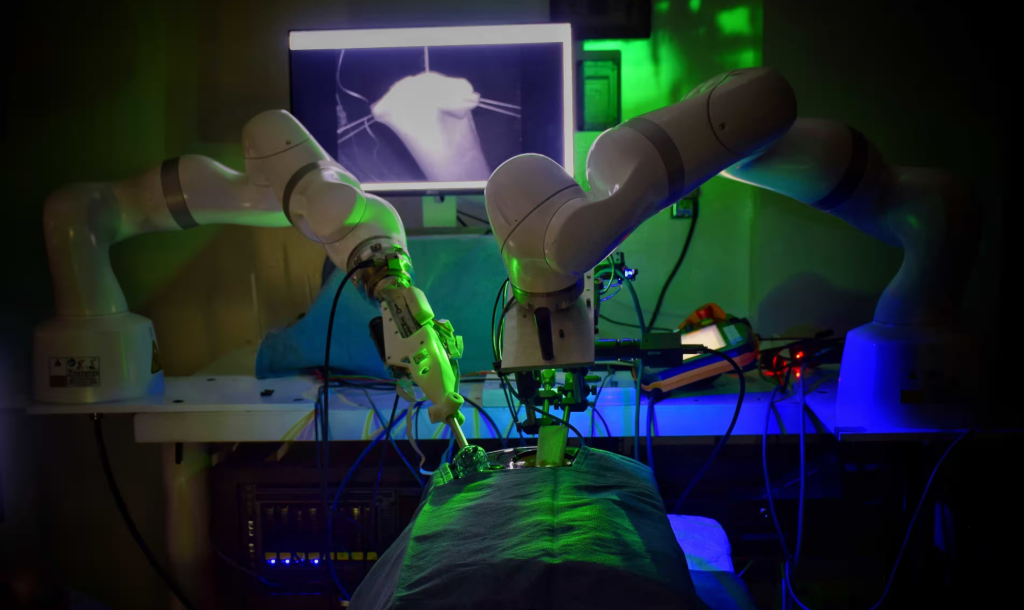

Robotic Surgery and AI-Assisted Treatment

Robotic surgery has already become standard in many operating rooms, and AI is increasingly being used to augment these surgical systems. The da Vinci surgical robot is the most well-known example. Surgeons use it to perform minimally invasive procedures with enhanced precision. To date, over 10 million procedures have been performed with da Vinci systems worldwide.

Current surgical robots are controlled by human surgeons, but AI assists by stabilizing instruments, filtering tremors, and providing real-time guidance, such as highlighting anatomy or safe cutting zones.

Researchers are also advancing the field with semi-autonomous surgical robots. In 2022, a Smart Tissue Autonomous Robot (STAR) successfully performed laparoscopic surgery to reconnect two ends of the intestine in pigs without active human guidance. Notably, it did so "significantly better" than experienced surgeons who performed the same procedure. The experiment, published in Science Robotics, demonstrated that AI-driven robots can perform delicate tasks such as suturing with submillimeter accuracy and consistency. This has the potential to reduce human error in the future.

Outside the operating room, AI assists with treatment planning and delivery. In oncology, for instance, AI algorithms help oncologists design radiation therapy plans by calculating the optimal dose distribution to target tumors while protecting healthy tissue. In personalized medicine, which will be discussed more below, AI can suggest treatment options tailored to individual patients based on predicted responses. Pharmacological AI systems can recommend ideal drug dosages or combinations by analyzing patient data, such as for insulin dosing in diabetes management. While fully autonomous treatment remains on the horizon, these AI-assisted tools act as advanced "co-pilots," enhancing the precision, safety, and efficacy of treatments while leaving final decisions in human hands.

Personalized Medicine and Genomics

In the era of personalized medicine, AI plays a pivotal role in tailoring treatments to an individual’s genetic makeup and unique clinical profile. Analyzing a patient’s genome or other -omics data, such as proteomics or metabolomics, is extremely data-intensive, an area in which machine learning excels. AI algorithms can sift through genetic data to identify clinically important mutations or biomarkers, helping to diagnose genetic disorders and determine how patients will respond to therapy.

In oncology, for example, companies like Tempus use AI-driven platforms with next-generation sequencing to generate comprehensive genomic profiles of cancer patients and match them with the most effective targeted treatments. These systems can cross-reference a tumor’s molecular characteristics against vast databases of clinical trials and research to suggest precision therapies, essentially providing a “personalized” treatment roadmap for each patient.

AI goes beyond genomics to enable pharmacogenomics, which predicts how patients will metabolize or react to certain drugs based on their genes. This information can be used to make better medication choices and dosages, resulting in greater efficacy and fewer side effects. In the case of rare diseases, where diagnosis often involves searching for a needle in a genomic haystack, AI has successfully helped identify the gene variants responsible for the disease, shortening a diagnostic odyssey that would otherwise take years. By analyzing data from genetics, medical history, lifestyle factors, and more, AI facilitates truly personalized care plans. Patients benefit from treatments that are more precisely aligned to their condition, such as selecting a cancer therapy that targets a specific mutation found in their tumor, which improves outcomes. As datasets grow, we can expect AI to become even better at tailoring medical decisions to the individual level - the core promise of personalized medicine.

Virtual Health Assistants and Chatbots

The healthcare industry has seen an increase in the use of AI-powered virtual assistants and chatbots that aim to provide patients with care and support in a scalable way. These range from simple, rule-based chatbots that answer frequently asked questions, to more sophisticated conversational agents that can triage symptoms, or even provide counseling.

For instance, AI chatbots are being used to handle after-hours patient inquiries. They can ask patients about their symptoms and advise them on whether to try home care, schedule a doctor visit, or seek emergency care.

During the pandemic, many organizations deployed chatbots to guide people through screening protocols. Virtual health assistants are also used for routine tasks such as scheduling appointments, providing medication reminders, and offering basic health education. These assistants effectively act as a “digital front door” to the clinic, providing 24/7 access.

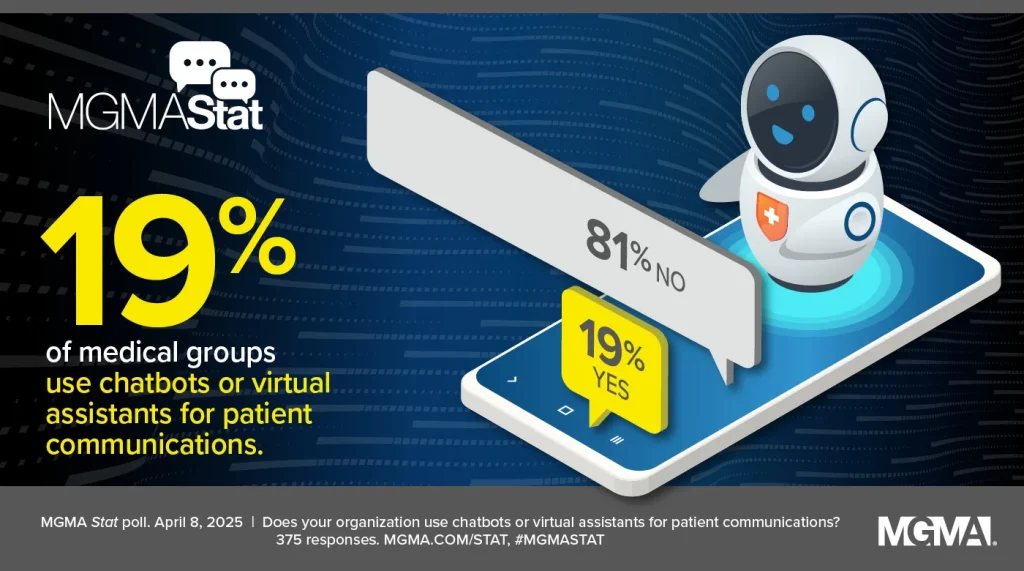

Adoption of these tools is growing steadily. As of 2025, approximately 19% of medical practices reported using chatbots or virtual assistants for patient communication. While this indicates that the majority have yet to implement such tools, the global healthcare chatbot market is expanding rapidly, having already exceeded $1 billion in 2025 and projected to reach approximately $10 billion within the next decade.

The appeal is clear. These AI assistants can reduce staff workload by automatically handling common questions and administrative tasks, and they provide patients with quick answers, eliminating the need to wait on hold. For example, a clinic can use a chatbot to manage appointment requests or remind patients of follow-ups, thereby reducing no-show rates and phone call volume. Some health systems have integrated virtual nurse assistants that use friendly chat to check in with patients with chronic conditions and monitor symptoms or adherence between appointments.

That said, there are limitations and concerns that must be addressed. Chatbots must be carefully designed to provide safe advice and hand off complex or urgent queries to human professionals. They must also maintain patient privacy and data security. When implemented correctly, virtual health assistants can greatly enhance patient engagement and access to information. They essentially act as an always-available support system between formal healthcare encounters.

AI in Drug Development and Clinical Trials

Perhaps one of the most game-changing applications of AI is in drug discovery and development – an area traditionally known for being extremely costly and time-consuming. AI is flipping the script by analyzing chemical and biological data at speeds impossible for humans, helping identify new therapeutic compounds much faster.

A landmark moment occurred in early 2020 when the first AI-designed drug candidate, developed by the UK startup Exscientia, entered human clinical trials. The molecule, which treats obsessive-compulsive disorder, was discovered and optimized using AI algorithms, providing pivotal proof of concept. Since then, the pipeline of AI-discovered drugs has grown. As of 2022, at least 15 AI-designed molecules were reportedly in clinical development across various companies, and this number is rising rapidly.

AI accelerates drug discovery in several ways. Machine learning models can screen millions of chemical structures to predict which are most likely to bind to a disease-related target protein, dramatically narrowing down candidates for synthesis and testing. AI systems like DeepMind’s AlphaFold, which predicts protein structures, opens new possibilities for drug targeting. Generative AI models, such as GANs and transformer-based models in chemistry, can invent novel molecular structures with desired properties. These models suggest new drug leads that have never been synthesized before.

The result is a much shorter research and development (R&D) cycle. For example, Insilico Medicine used generative AI to discover a new drug for pulmonary fibrosis and advance it to Phase I trials in under 30 months - about half the typical time and at a fraction of the cost of traditional discovery. AI handled tasks ranging from identifying a new biological target to designing potential drug molecules, significantly speeding up the project. This kind of efficiency gain is a game-changer in the pharmaceutical industry, where it can take over 10 years and billions of dollars to bring a single drug to market.

AI is also optimizing clinical trials. AI algorithms can scan medical records to quickly find eligible participants, ensuring that trials aren’t delayed by a lack of patients. AI can also assist with trial design by identifying the best patient stratification or predicting which sites might have higher enrollment success. This reduces the risk of costly trial failures. During trials, AI-driven analytics can monitor incoming data in real time, predicting efficacy or safety signals faster than traditional interim analyses. These contributions mean that new treatments could reach patients sooner and at a lower cost. In the long run, this could usher in more innovative therapies and democratize drug development. AI could level the playing field, allowing smaller biotech companies to compete with pharmaceutical giants.

The Most Discussed Issues and Concerns Around AI in Medicine

Can AI truly outperform clinicians in complex diagnostics?

This question lies at the heart of the debate surrounding AI in medicine. AI has shown striking success in controlled tasks. However, these AIs are often specialized for one narrow task and lack the general reasoning and contextual understanding of human clinicians.

Real-world diagnostics can be very complex. Patients may have multiple conditions or present symptoms that don't fit the patterns the AI was trained on. Human doctors bring common sense, intuition, and the ability to integrate diverse information sources, including patient conversations, areas in which AI is weak.

Most experts do not believe that AI will replace doctors outright, but rather augment them. The saying "AI won't replace physicians, but physicians who use AI will replace those who don't" has grown in popularity. In practice, the best outcomes often occur when doctors use AI as a tool. For example, the AI might pre-screen images or data and highlight findings, and then the physician makes the final call after considering the patient’s full clinical picture.

It’s also worth noting that AI systems can make mistakes that a person might catch, such as misidentifying an artifact on an X-ray as a disease, especially if the input data is flawed.

Check out a related article:

Key Types of Healthcare Software with Examples

For complex diagnostic dilemmas, human oversight remains crucial. While AI outperforms clinicians in certain narrow benchmarks, such as detecting specific cancers on scans, it works best as an assistant. The goal is a collaborative model. AI handles repetitive pattern recognition tasks with superhuman consistency, while clinicians apply their broader expertise to verify and act on those findings. This synergy can improve care. However, AI alone isn't ready to safely manage the full complexity of real clinical diagnostics without a human in the loop.

Who is accountable for AI mistakes in patient care?

When an AI system suggests an incorrect diagnosis or dosage of medication, for example, who is responsible if something goes wrong?

This is an urgent concern as AI tools become more autonomous. In traditional healthcare, if a doctor makes a mistake, they (and their institution) can be held accountable for malpractice. However, with AI, the situation is murkier. The accountability could lie with the physician using the AI, the hospital deploying it, the software developers, or even the device manufacturer.

For example, if a radiology AI misses a cancer on a scan that a competent radiologist would have detected, is the radiologist negligent for relying on the AI, or is the fault in the software? If a fully autonomous diagnostic AI falsely assures a patient that they are healthy when they are not, could the patient sue the AI’s creator?

Currently, in most jurisdictions, AI tools in healthcare are considered assistive, and licensed clinicians are expected to verify AI outputs. They are ultimately responsible for care decisions. However, as AI becomes more complex and possibly more opaque due to the nature of machine learning, this expectation becomes problematic. There is active debate and a need for policy clarity here. Regulators and professional bodies are working on guidelines. Some proposals suggest certifying AI in high-stakes roles similarly to medical devices. If an AI acts as an autonomous agent, perhaps a new legal framework (or insurance scheme) is needed to assign liability. Until these frameworks are established, most hospitals mitigate risk by keeping a human in the loop and treating AI recommendations as advisory.

Nonetheless, the ethical dilemma remains: if an AI contributes to an error, “is it the creator, the user, or the AI itself responsible?”. Clear answers will be crucial for building trust in medical AI – clinicians need to know they won’t be hung out to dry for an AI’s mistake, and patients need assurance that someone is accountable for their safety.

How do we balance patient privacy and data sharing?

AI systems, especially those used in healthcare, require large amounts of data to perform well. However, this conflicts with strict patient privacy regulations, such as HIPAA in the U.S. and GDPR in Europe, which protect sensitive health information. The challenge lies in balancing the need to share data for AI development with the obligation to keep patient details confidential. Past incidents have shown that de-identified medical data can sometimes be traced back to individuals if not handled carefully, raising concerns about consent and misuse.

Healthcare AI developers are exploring solutions to this dilemma. One approach is anonymization, which involves stripping datasets of personal identifiers and using techniques to prevent re-identification. Another promising strategy is federated learning, in which an AI model is trained using data from multiple hospitals without any of the data ever leaving the hospitals - only the learned model parameters are shared, not the raw data. Thus, an AI can benefit from the collective wisdom of 20 hospitals' records without a central repository of all patient data. Encryption techniques and secure data enclaves are also being employed to enable AI analysis of data in a protected environment.

Despite these measures, legitimate concerns remain. AI requires vast datasets, which increases the “attack surface” for hackers. A breach in an AI system could potentially leak millions of records if proper safeguards aren’t in place. Incorporating AI doesn't change the fundamentals. Strong data security, including network security, encryption, and audit trails, is essential, as is compliance with privacy laws at every step. Transparency with patients is also critical. Patients should be informed if their data will be used to train AI and, ideally, be asked to provide informed consent for such secondary use.

In summary, we must foster AI innovation without sacrificing privacy. Techniques such as federated learning and robust de-identification, coupled with strict governance, offer a way to have powerful AI models while respecting patient confidentiality.

What runway do healthcare systems have to adopt AI?

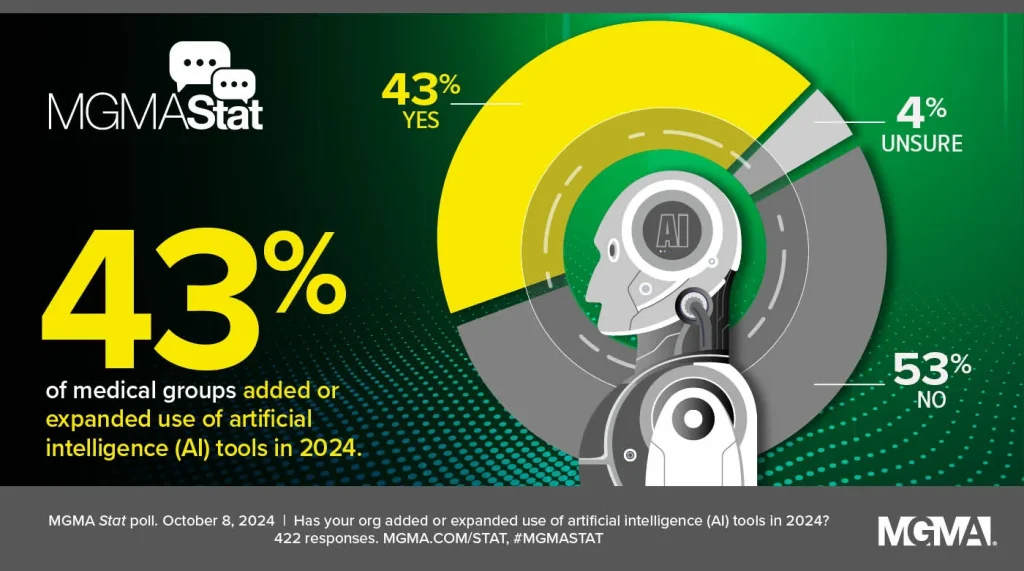

Historically, healthcare is not the fastest-moving industry when it comes to adopting new technology - and for good reason, since lives are at stake. Today, many health systems are cautiously exploring AI by running pilot programs or limited deployments. The question of "runway" essentially asks: How long do hospitals and clinics have before AI becomes standard care, and are they ready for it? Based on current trends, the answer seems to be that the runway is short and getting shorter. However, many organizations are not yet fully prepared. Recent surveys indicate that adoption is accelerating. In 2024, for example, over 43% of medical groups reported adding or expanding AI tools that year (up from 21% the previous year). This shows a quickening pace - essentially, many providers went from experimenting in 2023 to actively scaling up in 2024. If this trend continues, a majority of practices may have some form of AI assisting in care or operations within a few years.

However, there’s still a significant portion (over half in that survey) that had not introduced new AI tools as of 2024. Reasons given include limited IT budgets, the need for more time to plan and integrate systems, and uncertainty among clinicians about the benefits and workflow impact. Many healthcare IT infrastructures also remain outdated - some hospitals are still struggling with basic electronic records and interoperability, which is a prerequisite to effective AI. Furthermore, cultural readiness varies; some clinicians are enthusiastic early adopters, while others remain skeptical or uncomfortable with AI.

Given these factors, healthcare systems likely have a window of a few years to get “AI-ready” - upgrading their data systems, training staff, and establishing governance for AI - before falling behind the curve. The runway is the next 5-10 years during which AI will shift from optional pilot to standard practice in leading centers. Hospitals that invest in the groundwork now (data quality, IT infrastructure, clinician education) will be in a better position to safely and effectively implement AI as it becomes mainstream. Those that wait too long may find themselves scrambling to catch up as AI-driven care becomes a competitive necessity for quality outcomes and operational efficiency. In essence, the time to start preparing for AI is now, even if full deployment for some organizations might still be a few years out.

What real-world value, training, and policy are needed for wide AI adoption?

For AI to be widely adopted in healthcare, several things must fall into place.

- Demonstrated real-world value: Healthcare providers and payers will only embrace AI at scale when there is clear evidence of its benefits, such as improved patient outcomes, greater efficiency, and cost savings. Current AI pilot projects must publish results demonstrating that an AI diagnostic tool reduces misdiagnoses or that an AI workflow tool saves nurses time without compromising care, for instance. As more studies emerge proving value, confidence in AI will grow. Healthcare is rightly evidence-driven, so rigorous clinical validation of AI systems in diverse settings is essential to drive broader adoption.

- Training and education: Introducing AI into clinical settings requires training at multiple levels. For example, clinicians need to know how to interpret AI outputs, such as understanding what a risk score means, what an algorithm’s limitations are, and how to integrate those insights into decision-making. Currently, many clinicians have limited knowledge of how AI works, which leads to skepticism or misuse. Developing curricula in medical schools and offering continuous professional development on AI in medicine will help bridge this gap. Non-clinical staff, such as medical administrators and IT personnel, also require training to manage AI tools and workflows. Additionally, institutions may need to create new roles, such as clinical data scientists or AI specialists, who can act as liaisons between technical and clinical teams.

- Policy and Governance: Thoughtful policies are necessary to guide the integration of AI in a way that ensures its safety, ethics, and equity. This includes regulatory guidelines from bodies like the FDA on approving AI-based medical devices and software. Current frameworks are evolving - for example, the FDA is developing an adaptive algorithm policy because traditional approval processes do not account for AI systems that learn and update. Professional associations, such as the American Medical Association (AMA), have outlined principles focusing on AI transparency, oversight, and responsibility. Key policy considerations include requiring AI systems to undergo thorough clinical testing, mandating a level of explainability so that decisions can be understood and trusted, and setting standards for data quality and bias mitigation. Policies regarding liability and reimbursement are also crucial. For example, if an AI system improves care but isn’t reimbursed, its adoption will lag.

In terms of governance, hospitals will need internal AI oversight committees or frameworks. These committees or frameworks can evaluate AI tools for safety and effectiveness, monitor outcomes, and periodically review algorithms for issues such as drift or emerging biases. These committees should also include diverse stakeholders, such as clinicians, data scientists, ethicists, and patient representatives, to ensure a comprehensive view of AI’s impact.

When strong evidence of real-world value, a well-trained workforce comfortable with AI, and robust policy and ethical guardrails come together, we will likely see AI transition from promising experiments to indispensable components of healthcare. This process has already begun, but continuing to build trust through evidence and education is essential to reaching the tipping point at which AI in medicine becomes as commonplace as stethoscopes and MRIs.

Benefits and Challenges of AI in Healthcare

Benefits of AI in Healthcare

- Improved speed and accuracy: AI systems can analyze data and images at lightning speed with high accuracy. For instance, an AI system can review hundreds of radiology images in the time it would take a human radiologist to examine just a few, and it doesn't experience fatigue. This enables faster diagnoses, which is critical in emergencies. For instance, Stanford’s CheXNet, a deep learning model, can analyze a chest X-ray for 14 types of pathology in under 90 seconds. A human would take much longer to interpret complex cases. By quickly flagging potential issues, such as a tumor on a scan or an abnormal lab pattern, AI enables earlier intervention and treatment, which can significantly improve patient outcomes.

- Cost and operational efficiency: AI has the potential to make healthcare more cost-effective by automating routine tasks and optimizing workflows. Administrative tasks such as scheduling, billing, and documentation can be streamlined with AI (e.g., AI scribes that automatically transcribe and organize clinic notes). In clinical care, AI-driven predictive models can reduce unnecessary hospital admissions by identifying patients who can be managed in outpatient settings with proper support. As AI scales up, the savings could be even greater, potentially amounting to billions of dollars, by reducing duplicate tests, shortening hospital stays through better preventive care, and avoiding costly complications. This economic benefit is crucial as healthcare systems everywhere grapple with rising costs and resource constraints.

- 24/7 monitoring and support: Unlike human staff, AI doesn't sleep. AI-powered monitoring systems can watch patients around the clock without missing a thing. In intensive care units, "smart" monitors can continuously analyze vital signs and alert caregivers at the first sign of trouble, such as subtle changes that precede patient deterioration. At home, wearable sensors paired with AI can monitor chronically ill patients and send alerts if something deviates from the norm. For example, an AI system monitoring a heart failure patient’s weight and blood pressure could warn of decompensation before the patient realizes they are experiencing symptoms. This constant vigilance helps catch problems early and can prompt timely interventions. It also provides patients with a safety net, potentially reducing anxiety because they know their health is being actively monitored.

- Personalized and precision medicine: AI excels at finding patterns in complex datasets, which is a cornerstone of personalized medicine. By analyzing an individual’s unique combination of data — genetics, medical history, lifestyle, and even data from smartphone apps — AI can help tailor prevention and treatment strategies for that person specifically. For example, it can identify which patients will respond to a new cancer drug, sparing others from experiencing its side effects if it won't be effective for them. It can also suggest the lifestyle interventions that are most likely to benefit a particular patient based on their habits and risk factors. AI can also facilitate precision surgery. For example, augmented reality with AI can guide a surgeon to a tumor with sub-millimeter precision based on the patient’s scans. Overall, this personalization results in more effective care, avoiding the trial-and-error approach - patients receive treatments more likely to work for them from the beginning rather than after multiple attempts.

- Augmenting Clinical Decision-Making: AI can serve as a "second brain" for clinicians by providing data-driven insights that support, rather than replace, their judgment. For instance, clinical decision support systems use AI to synthesize medical literature, guidelines, and patient-specific factors, suggesting diagnostic possibilities or treatment options that a doctor might have overlooked. In complex cases, AI can provide probabilities, such as, "Given the data, there's an 85% chance this patient's chest pain is cardiac-related," which physicians can use in discussions with patients and in developing management plans. By performing computational tasks such as calculating risk scores or simulating outcomes, AI frees clinicians to focus on the human side of medicine, such as communicating with patients. Clinicians armed with AI insights can work smarter and faster, potentially improving the quality of care. As one health tech expert noted, AI won’t replace doctors, but it can improve their performance by equipping them with advanced tools and information.

Challenges of AI in Healthcare

- Bias and equity concerns: AI systems are only as good as the data they are trained on. If that data contains biases or gaps, the AI can perpetuate or even amplify those biases. This is a well-documented concern in healthcare. For example, an algorithm used in some health systems to prioritize patients for additional care was found to underestimate the needs of Black patients due to the cost-based proxy used for health. As a result, Black patients were less likely to be identified for care programs than equally sick white patients. Similarly, AI models trained mostly on data from one demographic may not perform well for others. For example, an AI model that reads skin lesion images might be highly accurate for lighter skin tones but less accurate for darker skin tones if the training dataset lacks diversity. These biases can lead to disparities in care or even dangerous errors. Ensuring representative training data and incorporating fairness checks are critical steps. The industry is becoming more aware of this issue, and many developers are now testing their algorithms for bias and collaborating with institutions to collect more diverse data sets. Nevertheless, vigilance is needed to prevent "encoded" biases from harming patient care. This challenge is not just technical, but moral as well - AI should enhance health equity, not undermine it.

- Data privacy and security: As discussed earlier, AI requires large amounts of data, which increases the importance of privacy. Patients’ medical information is some of the most sensitive data. A major challenge is harnessing big data for AI without compromising confidentiality. Even with de-identified data, there’s a risk of re-identification, albeit small, especially if AI models are later attacked or if their outputs inadvertently reveal information about individuals through complex inference. Furthermore, healthcare data breaches are already a serious problem, and adding more data flows for AI could increase security vulnerabilities. A leak of health data violates privacy and can erode public trust in healthcare providers and AI technologies. Thus, strict data governance is essential, including encryption, access controls, and transparency about data use. Techniques such as federated learning and homomorphic encryption, which allow data to be analyzed in an encrypted form, are promising ways to enable AI model training while keeping raw data private. Any AI project must adhere to regulatory compliance standards such as HIPAA. In summary, maintaining patient trust through robust privacy protection is an ongoing challenge. Any suggestion that AI is handling data carelessly could significantly hinder adoption.

- Black-box algorithms and lack of explainability: Many powerful AI algorithms, such as deep neural networks, are black boxes. They do not explain how they arrive at a given prediction or recommendation in a way that humans can easily understand. In medicine, this is problematic. Doctors and patients are accustomed to receiving rational explanations for decisions. For example, if an AI flags a CT scan as showing early signs of cancer, the radiologist would want to know what features the AI identified - was it a tiny nodule in the upper lobe? If the AI cannot provide that rationale, a doctor may hesitate to trust it. Furthermore, from a medico-legal standpoint, clinicians must justify their decisions, and "the computer said so" is not an acceptable justification. A lack of transparency also makes it difficult to detect when the AI might be incorrect due to an unusual quirk in the data. The challenge, then, is to develop explainable AI (XAI) - models that are inherently more interpretable or can produce explanations, such as heat maps on an image showing where the AI focused its attention. Some progress has been made; for example, there are AIs that highlight the pixels influencing their decisions on X-rays. However, interpretability often comes at the cost of accuracy. Finding the right balance between high performance and explainability is an active area of research. For AI to be fully embraced in healthcare, it must either provide reasons that clinicians can evaluate or restrict itself to roles where a lack of explanation is acceptable. Building trust will require revealing the inner workings of the AI so that human experts can validate and understand its logic.

- Healthcare organizations face a skills gap on two fronts. First, clinicians need training in how to use AI tools - not just how to click buttons, but also how to understand the output in a clinical context. This is new territory for many providers. For instance, if an AI provides a doctor with a risk score indicating that a patient will develop complications, the doctor needs to know how reliable the score is, how to interpret it, and how to discuss it with the patient. Currently, relatively few clinicians have formal training in data science or AI. Incorporating basic AI literacy into medical education and ongoing training is vital so clinicians feel comfortable and competent working with these tools. Second, there is a need for more data specialists in healthcare - people who can manage AI integration, validate models using the hospital's own data, and monitor performance over time. These specialists may be new hires, such as data scientists or machine learning engineers, or current IT staff who have been upskilled. Many smaller hospitals lack this expertise, creating a barrier to adoption. If an AI tool requires significant local customization or maintenance, some organizations may struggle. Although vendors are trying to simplify deployment, the human capital element is key. In short, to really leverage AI’s potential, healthcare needs to invest in people as much as technology — training the existing workforce and bringing in new talent.

- Regulatory and legal uncertainty: Navigating the regulatory landscape is challenging. The rules for AI in healthcare are still evolving. For instance, getting FDA approval for an AI diagnostic algorithm can be a lengthy process. Currently, the FDA typically treats these algorithms as medical devices. However, AI can change over time as it learns from new data. How can you regulate a non-static algorithm? The FDA has been working on a framework for "adaptive" AI that requires procedures for updates. In Europe, the forthcoming AI Act will impose stringent requirements on "high-risk" AI systems, which medical AIs certainly are, including transparency and human oversight. Compliance with these emerging regulations will be challenging and could impede innovation if not managed properly. Similarly, from a legal perspective, liability issues remain unsettled, which makes some institutions wary of widespread deployment. Until laws clearly delineate responsibilities, hospitals may limit AI assistance to an advisory role. Over time, as standards are codified and specialized insurance products for AI-related risks emerge, this barrier will ease. In the meantime, however, the uncertainty of the regulatory environment is a challenge that every healthcare AI project must contend with.

Future Outlook

Regulatory Trends

As AI becomes more prevalent in healthcare, regulators are working to keep up and develop guidelines that ensure safety without hindering innovation. In the United States, the Food and Drug Administration (FDA) is at the forefront of overseeing medical AI. The FDA historically treats software that performs medical functions as "Software as a Medical Device" (SaMD), which requires evaluation and clearance. However, AI — especially machine learning, which can update its own algorithms — does not fit neatly into the traditional model of one-time approval. The FDA is exploring a new regulatory framework that would involve approving not only the algorithm, but also the process by which it will continually be improved and tested ("predetermined change control plan"). We can expect more formal guidance on this soon. In the meantime, the FDA has approved over 300 AI/ML medical devices, primarily for imaging and diagnostics, and is refining how to evaluate their performance and monitor them in the real world.

In Europe, the proposed EU AI Act is poised to classify most healthcare AI systems as "high-risk," meaning they will face strict requirements for risk assessment, documentation, transparency, and human oversight. This could become law by 2025–2026. Additionally, the existing EU Medical Device Regulation (MDR) covers software, including AI-based apps, and requires evidence of clinical efficacy and safety. Regulators in other jurisdictions, such as Health Canada and agencies in Asia, are developing similar AI-specific guidelines or adapting existing medical device regulations.

Another important regulatory trend involves data governance and privacy. Recognizing that AI requires data, regulators are clarifying rules for sharing data for research and AI development. For example, HIPAA in the U.S. permits certain uses of data for healthcare operations and research with de-identification or consent. However, there is a push to update laws to better address modern data uses. Some countries are establishing frameworks for health data partnerships that allow AI models to be trained on large national datasets in a privacy-preserving manner.

Check out a related article:

Building a Healthcare Software Product: A Startup Founder’s Guide

Transparency mandates are also on the horizon. For example, regulators might require that AI systems provide some level of explainability, or that patients be informed when AI is involved in their care. The EU AI Act draft includes a provision about informing users when they are interacting with AI. As previously mentioned, clarifying liability is also on the agenda. For example, the UK is considering updates to its liability laws to account for decisions made by AI systems.

Overall, regulatory bodies are shifting from a hands-off approach to active oversight as AI’s influence on healthcare grows. In the near future, we will likely have a standardized approval process for medical AI, clear guidelines for monitoring AI performance after deployment, and international collaborations to harmonize standards. These changes should mitigate risks and build public trust that medical AI is held to the same high standards as drugs and traditional devices. For developers and healthcare providers, staying informed about these evolving regulations will be essential - compliance will be a core part of any AI implementation project.

Emerging Innovations: Generative AI and Federated Learning

The next wave of AI in medicine is being driven by two of the most exciting new technological innovations: generative AI and federated learning.

Generative AI, as demonstrated by large language models such as GPT-4 and image generators, has captivated the world with its capacity to produce human-like text and realistic images. In healthcare, generative AI opens up new possibilities. One immediate application is in medical documentation and data synthesis. For example, generative AI can draft clinical summaries or discharge instructions in natural language after being provided with the key points, thereby saving doctors time on paperwork. Companies are developing AI assistants for physicians that can converse with them and provide evidence-based answers to clinical questions. Essentially, these are advanced medical chatbots that can summarize the latest research or explain a condition to a patient in layman’s terms. Indeed, some large language models have demonstrated the ability to pass medical licensing exams with a score around or above the passing threshold, highlighting their potential knowledge of medical information. However, they can still make factual errors or "hallucinate," so caution is

Generative AI can also be applied to diagnostics. For instance, there are models that generate synthetic medical images. These images could augment training data for other AIs. For instance, one could create thousands of realistic, labeled MRI images to train a radiology algorithm, including rare findings that a hospital might not have many examples of. Another diagnostic application is using generative AI to suggest diagnoses or treatment plans for complex cases, almost like an AI "consultant." Early research is exploring generative models that can generate a differential diagnosis list based on a patient’s symptoms and history or simulate how a patient’s condition might progress under different treatments.

A fascinating area is drug discovery using generative AI, which involves algorithms that can invent new molecular structures from scratch. AI is already designing novel drug candidates, but generative models take it further by exploring the chemical space for molecules that meet desired criteria. This significantly expands the range of potential drugs to test.

On the other hand, federated learning tackles the data hurdle in a practical way. As previously mentioned, federated learning enables the training of AI models across multiple datasets (e.g., from different hospitals) without centralizing the data. Each hospital computes updates to the model on its own data, and only those updates (which are numbers, not identifiable patient information) are sent to a central coordinator that aggregates them into a global model. No raw patient data leaves the hospital. This approach has already been piloted. For example, a federated learning project connected hospitals in different countries to train an AI model to segment brain tumors on MRI scans. This model achieved performance similar to one trained on pooled data, while keeping each hospital’s data secure. We will see more of this: collaborative networks of hospitals and research labs will train joint AI models for applications ranging from cancer detection to predicting outcomes of patients with SARS-CoV-2, all while preserving privacy. Combined with techniques like differential privacy, which adds noise to data or model updates to make re-identification mathematically improbable, federated learning will likely become standard practice in medical AI development.

Another notable innovation is multimodal AI, which refers to models that can process multiple types of data simultaneously, such as images, text, lab results, and genomic sequences, to provide a more comprehensive overview. Similarly, the human brain considers multiple modalities when making a diagnosis (e.g., observing a patient, listening to them, reviewing lab results, etc.). Future AI could do the same by analyzing a patient’s medical notes, radiology images, and genetic data together to provide a nuanced assessment or prediction. Early versions of these multimodal models are in development and represent an exciting frontier where AI’s pattern recognition could discover connections between different data sources that humans might overlook.

In summary, the future looks bright. Generative AI will make AI more interactive, creative, and useful for tasks such as documentation, decision support, and discovery. Federated learning and other privacy-preserving techniques will provide access to much larger training datasets by connecting institutions around the world while maintaining privacy. Combined with advances in model architectures and computing power, the next generation of medical AI will be more powerful, capable of handling multiple tasks, and trustworthy. The key will be to thoughtfully integrate these innovations into clinical practice, ensuring that they solve real problems and undergo rigorous evaluation.

The Role of AI Software Development Companies in Shaping Healthcare

While much of the focus is on algorithms and data, one often-overlooked aspect is who actually builds and implements these AI solutions. Specialized technology partners — essentially, healthcare software companies with AI expertise — play a pivotal role here. Most hospitals and healthcare organizations lack large in-house teams of AI researchers and developers, so they collaborate with external companies to develop and integrate custom solutions into existing systems. As AI becomes more central to healthcare, these partnerships become increasingly crucial.

Many healthcare providers are turning to AI software development solutions offered by tech firms to assist with their digital transformation. These companies provide a combination of software engineering expertise, AI/ML proficiency, and knowledge of the healthcare industry, including familiarity with regulations such as HIPAA and interoperability standards. They work closely with clinicians to identify issues that AI can address, then create customized applications to solve them. For example, a hospital could work with an AI software company to develop an AI-powered radiology workflow system that integrates with its PACS (picture archiving and communication system). The company would handle the complex tasks of model development, user interface design, integration, testing, and maintenance.

An AI app development company, such as Intersog, can provide end-to-end artificial intelligence development services for a healthcare client. This could involve consulting on strategy, building and training models, and deploying the final product as a user-friendly app or platform for doctors and patients. These development companies often employ data scientists, software engineers, UI/UX designers, and compliance experts who ensure that the AI solutions are effective and meet the stringent requirements of the healthcare industry. They also stay on top of the latest AI research so they can incorporate state-of-the-art techniques, such as a new natural language processing (NLP) model for analyzing clinical text, into the product they're building for a client.

Another role of these companies is adapting AI tools to each organization's specific workflows. A solution that works out of the box for one hospital may require adjustments for another due to different IT environments or user preferences. By developing customizable solutions, these companies ensure that AI tools are adopted and used rather than gathering dust on a shelf. They often provide on-site training sessions and ongoing support, acting as long-term innovation partners for healthcare organizations.

Furthermore, as new innovations like generative AI and federated learning emerge, these development partners will bring them to market in practical forms. They serve as the bridge between cutting-edge AI research and real-world healthcare implementation. Many startups and established tech firms in the medical AI sector follow this model — they develop a specific AI solution, such as an AI diagnostics platform, and then collaborate with various healthcare providers to integrate it.

These collaborations ensure that frontline healthcare workers have a say in how AI tools evolve through feedback loops because development companies gather requirements and iterate based on user input. As AI proliferates, it also means that best practices and successful solutions can disseminate more quickly via these companies, which deploy similar solutions across dozens of hospitals, learning and refining generalizable features each time.

Essentially, AI provides the intelligence, and AI development companies provide the capability to implement that intelligence in the complex environment of healthcare. Their role is likely to expand, with some acting as full-service partners that handle everything from data infrastructure to AI analytics for hospitals. The most successful outcomes appear to occur when healthcare institutions form strong, trust-based partnerships with tech experts, combining medical domain knowledge with technological prowess. As we move into the next phase of AI in medicine, these teams are ensuring that the theoretical benefits of AI turn into tangible improvements in care delivery.

Conclusion

Artificial intelligence in medicine has evolved from a speculative concept to a tangible reality, offering significant opportunities and critical challenges. We’ve seen AI technologies augment doctors in various ways, including algorithms that detect diseases on scans with superhuman accuracy, predictive models that identify early warning signs of illness, and virtual assistants that expand healthcare access beyond clinic walls. These innovations offer a glimpse into a future of healthcare that is faster, more precise, and more personalized. The potential benefits are profound: earlier diagnoses, treatments tailored to individuals, streamlined workflows that give clinicians more time with patients, and accelerated drug discoveries for conditions once deemed intractable.

However, realizing these benefits widely and safely will require significant effort. It requires addressing the challenges we’ve discussed head-on. AI systems must be developed and deployed with rigorous attention to ethics and fairness, minimizing biases, protecting patient privacy, and maintaining transparency so clinicians and patients can trust AI-driven insights. Proper governance and oversight are essential. As one expert aptly said, "In healthcare, change moves at the speed of trust." Building that trust requires thoroughly validating AIs in real clinical settings, keeping humans involved in critical decision-making processes, and establishing clear accountability and regulatory frameworks for when things go wrong.

Clearly, AI is not a magic wand that can solve all problems. Successful integration of AI into medicine requires substantial human effort, including training clinicians to work effectively with new tools, updating processes, and ensuring technology supports the patient-doctor relationship. Interdisciplinary collaboration will be essential. Data scientists, software engineers, ethicists, and healthcare professionals must continue to work closely together (often through partnerships with specialized tech firms) to develop AI solutions that improve care quality and patient outcomes.

Looking ahead, the future of AI in medicine is both exciting and cautiously optimistic. We can expect regulatory clarity to improve, providing guardrails that encourage responsible innovation. Emerging techniques like generative AI and federated learning will likely make AI systems more powerful and appealing (by safeguarding privacy). Most heartening of all, the narrative is shifting from "Will AI replace doctors?" to "How can AI help clinicians and patients?" The focus is shifting from replacement to augmentation and solving real-world problems. If we continue on this path, balancing innovation with ethics and governance, AI will become an invaluable ally in the medical field. In conclusion, AI’s journey in healthcare is just beginning. The road ahead will involve ongoing learning and adaptation, but the destination - a smarter, more efficient, and more personalized healthcare system - promises to be well worth the effort. By embracing opportunities, proactively managing challenges, and keeping patient well-being at the center of every development, we can ensure this AI-driven evolution in medicine fulfills its potential to save lives and improve health for all

Leave a Comment