In 2025, almost every major breach has one thing in common - human error. Cyber incidents are making headlines worldwide, and a stunning statistic underpins them: roughly 95% of cybersecurity breaches are caused by human mistakes. This means that, despite all the firewalls, encryption and AI-based defences, it is often a simple slip-up by an employee or user that is the root cause of the breach. Why does this happen? People click on convincing phishing emails, use weak passwords, misconfigure cloud storage or forget to apply critical patches, thereby inadvertently opening the door to attackers.

Human fallibility has always been a factor in security, but the stakes are higher than ever before. One careless click or oversight can render millions of dollars' worth of security technology ineffective and lead to major data leaks or ransomware shutdowns. For instance, an employee falling for a phishing scam or an IT administrator leaving a cloud database unsecured could result in personal records being exposed or servers being encrypted. The good news is that, since human errors are predictable and preventable, they can be addressed. By identifying the common errors that lead to breaches and implementing robust training, processes and technologies, organisations can significantly reduce their risk.

In this article, we’ll explore why humans are “the weakest link” in cybersecurity and how to turn that around. We’ll examine the data behind the 95% figure, the most frequent errors (from phishing emails to misconfigured servers), and real-world breach examples that cost companies millions. Crucially, we’ll discuss practical strategies to prevent these mistakes - from better security awareness training and Zero Trust architectures to multi-factor authentication (MFA) and automated security tools. The goal is to help business leaders and IT managers foster a culture and infrastructure of security that balances cutting-edge tech with informed, vigilant people.

By the end, it will be clear that while people cause most breaches, people - empowered with the right knowledge and tools - are also the key to preventing them. Cybersecurity in 2025 is a shared responsibility, and with the right approach, your organization can greatly strengthen its human defenses.

The Numbers Behind the “95%”

It’s not an exaggeration: the vast majority of breaches truly stem from human lapses. This was first quantified by IBM in 2014, when an intelligence report found that 95% of all security incidents involved human error. In other words, only 1 in 20 breaches was caused purely by technology failures or zero-day attacks - the rest had a preventable human element. Fast forward to today, and multiple studies confirm the same pattern. The World Economic Forum notes that 95% of cybersecurity issues can be traced to human mistakes. A 2025 Mimecast report likewise found 95% of breaches involved human error - underscoring that human mistakes surpassed technological flaws as the top cause of breaches.

It’s important to clarify what “human error” means in this context. Generally, it refers to unintentional actions or omissions by users that enable a breach. This includes mistakes like clicking on a malicious link, misconfiguring a server, using a weak password, or sending sensitive data to the wrong recipient. These are accidental insider threats - the employee isn’t trying to cause harm, but their slip-up creates the vulnerability. This is distinct from malicious insider threats, where a disgruntled staff member or contractor intentionally steals data or sabotages systems. Those do happen, but they are far less common than inadvertent mistakes. (In fact, research shows 63% of “insider” incidents are due to employee negligence, not malice.) In short, when we say human error causes 95% of breaches, we mean everyday people unwittingly doing something that lets attackers in - rather than rogue employees or purely technical exploits.

Why do human errors dominate so heavily? Put simply, attackers find it easier to exploit people than to overcome cutting-edge technology. Tricking a user with a convincing phishing email or persuading someone to reuse a stolen password is often the easiest option for hackers. This bypasses the need for expensive security hardware and software, as it targets the one thing that cannot be patched with a software update: human judgement. As IBM’s 2025 Security Report emphasises, even as cyber defences improve, the human factor remains a critical vulnerability. This is why CISOs around the globe identify human error as their greatest risk and why security strategies now focus as much on user behaviour as on networks and devices.

To tackle this, organizations need to recognize that cybersecurity isn’t just a tech issue - it’s a human issue. The following sections will break down the most frequent human errors leading to breaches and how we can mitigate each one. By addressing the human element head-on, companies can close the gap that’s responsible for the lion’s share of cyber incidents.

The Most Common Human Errors

Not all mistakes are equal - a handful of specific human errors cause the bulk of breaches. Here are the top culprits to watch for (with recent stats and examples for each):

1. Phishing & Social Engineering

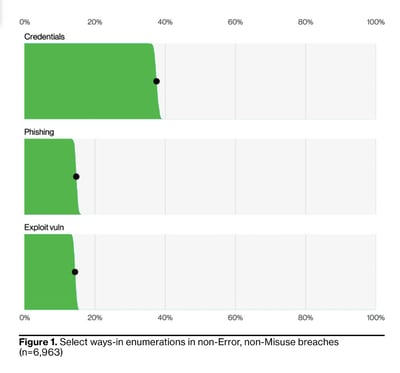

Phishing - fraudulent messages that trick people into divulging credentials or downloading malware - remains the number-one path to breaches. In the 2020s, cybercriminals have perfected the art of phishing, using psychology and even AI to create convincing lures. The result is a record number of phishing attacks and many victims. By 2024, 96% of organisations had reportedly experienced at least one phishing attack, with phishing implicated in a significant proportion of breaches. Verizon's data indicates that phishing and pretexting (e.g. business email compromise scams) are the primary cause of incidents, accounting for 73% of breaches in certain sectors. Overall, Verizon noted in 2023 that 74% of breaches included a social engineering component such as phishing. In short, if an attack involves humans, it often begins with phishing.

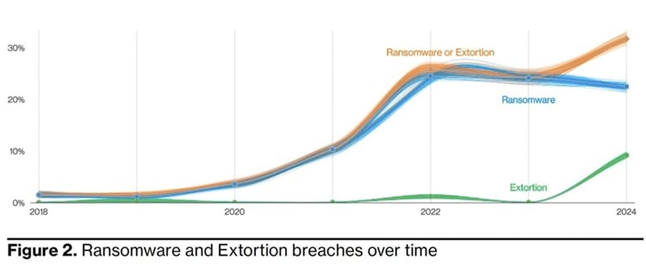

Modern phishing isn’t limited to “Nigerian prince” emails. It includes spear phishing (targeted, personalized emails), vishing (voice calls), smishing (text messages), and more. Attackers impersonate trusted brands, colleagues or authorities in order to lower users' guard. For instance, in 2023, a campaign spoofed Microsoft Teams messages to steal passwords. Another high-profile case was the MGM Resorts breach, also in 2023, where hackers called the IT help desk impersonating an employee. That single social engineering call gave them access to the company's systems, leading to a $100 million ransomware attack on the casino giant. This demonstrates how phishing can extend beyond email and the potentially devastating consequences of a successful attack.

Why do people fall for it? Attackers exploit curiosity, fear, a sense of urgency, and authority. They might masquerade as an urgent request from the CEO, an account security alert or a lucrative job offer - anything to prompt a quick, unthinking click. New tools make phishing emails more convincing than ever. In fact, it is estimated that 80% of phishing emails are now AI-generated in order to refine language and evade detection. With deepfake voice calls and AI-written emails, social engineering is becoming increasingly sophisticated. In short, phishing is the main cause of breaches resulting from human error, so anti-phishing training and technical controls are absolutely critical (more on that in the prevention section).

2. Weak or Reused Passwords

Despite years of warnings, poor password practices continue to compromise security. Many breaches occur when an attacker guesses, cracks or reuses a password set by an authorised user. Stolen credentials are now the number one method of breaching security, even surpassing phishing in some reports. Verizon found that, over the past decade, 31% of breaches involved stolen or weak passwords. Why is this figure so high? Users often choose passwords that are easy to remember (and easy to hack) or reuse the same password across multiple accounts. If one site is breached, the stolen passwords are sold on the dark web, where hackers can use them to access other accounts. In 2024 alone, more than 2.8 billion compromised passwords were posted on criminal forums, ready and waiting for attackers to use.

It only takes one reused password to undermine an organization’s defenses. Consider the 2019 Capital One breach: a hacker exploited a cloud misconfiguration and obtained credentials, accessing 100 million credit applications. Or the 2021 Colonial Pipeline incident, where attackers used a leaked password (with no MFA protection) to remote into systems and deploy ransomware, forcing a fuel pipeline shutdown. These incidents highlight how weak authentication is a root cause of many high-profile hacks. In fact, a recent analysis noted that compromised credentials were more common in breaches than exploiting software vulnerabilities. Attackers either phish the password, buy it, or use techniques like password spraying (trying common passwords) to break in.

The problem of bad passwords is enormous. Surveys reveal that 23% of small-to-medium business (SMB) employees use passwords that are easy to guess, such as a pet’s name or simple number patterns. Even worse, industry estimates suggest that 65% of people reuse passwords across sites, meaning that a breach of one service can lead to breaches of others. Default and blank passwords in IT systems also persist. These weak credentials are an easy target for attackers and account for countless 'no-hack-required' breaches. For example, in 2023, a series of attacks occurred on Microsoft Exchange servers simply because thousands of administrators had not changed the default passwords or applied patches.

Although password management may seem basic, its impact on security is huge. Verizon's 2024 report revealed that breaches involving stolen credentials had increased by 180% compared to the previous year. Conversely, proper password hygiene and multi-factor authentication can prevent this entire type of attack. Microsoft found that MFA (a second authentication factor) defeats 99% of bulk credential-stuffing attacks. Similarly, Google eliminated all workplace phishing attacks by requiring physical security keys for logins. After switching 85,000 employees to hardware MFA, they reported no account takeovers due to phishing. These statistics emphasise that weak or stolen passwords are a common human error that leads to breaches, but it is a problem that can be solved with better practices and tools.

3. Misconfigured Cloud Services

As companies have transitioned to cloud-based systems, a new form of human error has emerged: misconfiguration of cloud resources. Although cloud providers such as AWS, Azure and Google Cloud offer powerful features, if IT staff or developers set them up incorrectly, sensitive data can be left exposed to anyone on the internet. Misconfigured storage buckets, databases or access controls are one of the main causes of data breaches in recent years. One analysis found that over half of all cloud breaches were partly due to human error in configuration. Gartner famously predicted that, by 2025, 99% of cloud security failures would be the customer’s fault, primarily due to misconfiguration. Unfortunately, this prediction has proven to be accurate.

There have been numerous real-world examples of this mistake.

In each case, no hacker 'broke in' - the data was left unlocked due to setup errors. The result is a total loss of confidentiality, often affecting thousands or even millions of records. Regulators can impose hefty fines for such breaches (under GDPR, for example), and organisations suffer reputational damage for failing to safeguard data.

Cloud misconfigurations extend beyond storage buckets. Examples include not enabling authentication on a database, using the default 'open' settings on virtual machines (VMs) or containers, setting up improper network access control lists (ACLs), and mismanaging encryption keys. Even a minor configuration error can have significant consequences. For example, Pegasus Airlines, a Turkish airline, had an open test environment in 2022 that exposed 6.5 million records. The massive Capital One breach in 2019 was partly due to a misconfigured web application firewall in their AWS environment, which an attacker exploited to access S3 data, resulting in $150 million in remediation costs and fines.

The data shows this is widespread. 44% of companies report having suffered a cloud data breach due to human error (misconfiguring settings). Similarly, IBM's 2025 report states that cloud misconfigurations have been a primary cloud security concern for over a decade. This is easy to understand: cloud consoles and policy languages can be complex, and IT teams may lack the necessary visibility or expertise. Under the shared responsibility model, the cloud provider secures the infrastructure, but customers must securely configure their own applications and data - a task with which many struggle. As cloud footprints grow, so does the risk of critical information being exposed by an overlooked setting.

4. Unpatched Systems & Outdated Software

Another common human error that remains a leading cause of data breaches is failing to apply software updates and security patches promptly. When a vulnerability is disclosed in operating systems, applications or firmware, attackers race to exploit it. If organisations delay patching, they essentially put out a welcome mat for intruders. 'Unpatched vulnerability' breaches are essentially human-caused because the necessary fixes were available but not implemented in time. Unfortunately, this scenario is all too common. A Sophos report found that 32% of cyberattacks in 2024 started with an unpatched security vulnerability being exploited. Another study by Automox revealed that unpatched software was directly responsible for around 60% of all data breaches. In other words, more than half of all breaches could potentially be prevented by applying updates in a timely manner.

High-profile attacks demonstrate the consequences of neglecting security updates.

These cases highlight a painful truth: failing to apply patches promptly is a serious mistake that hackers exploit ruthlessly.

Even well-publicised critical bugs often remain in systems long after fixes are released. A striking example of this is Log4j (Log4Shell), a severe vulnerability that was revealed in late 2021. Even over a year later, scans showed that 38% of Log4j users were still running vulnerable versions, meaning that they had not applied the updated library. Similarly, months after the issue was raised, over 40% of all Log4j downloads were of outdated, vulnerable releases. This highlights the persistence of patching inertia. Reasons for this vary: fear of breaking systems, concerns about downtime, or simply poor asset management leading to missed updates. However, attackers exploit these delays. In 2023, 'old' vulnerabilities continued to be exploited - there was a 10% increase in the exploitation of CVEs from several years ago, even as new bugs emerged.

The bottom line: not keeping software up-to-date is a critical human-driven risk. This effectively invites threat actors to exploit known vulnerabilities. Ransomware groups, for example, often target unpatched servers (e.g. VPN appliances or database servers) by scanning for them. The 2023 DBIR noted that the exploitation of known vulnerabilities had increased significantly, accounting for 14% of breaches that year - a clear sign that delays in applying patches are having an impact.

5. Accidental Data Sharing and Mishandling

Not all data breaches are the result of hackers breaking in. A significant proportion stem from employees accidentally exposing or sharing data with unauthorised parties. These 'oops' moments include emailing a spreadsheet to the wrong person, accidentally sending a file to a public Slack channel, losing an unencrypted USB drive or misplacing a laptop. Such incidents are classified as human error breaches (often labelled as 'miscellaneous errors' in reports) and occur with alarming frequency. In fact, misdirected emails are the most commonly reported data security incident to regulators in many regions. One study found that 60% of organisations experienced data loss or exfiltration caused by an employee’s email error in a single year. In other words, more than half of companies experienced an incident where someone sent sensitive information they shouldn’t have.

Consider the following scenarios: An employee might accidentally attach the wrong file (containing client PII) when emailing a vendor. Alternatively, they might use a personal cloud drive to transfer work documents and forget to set the correct permissions, thereby leaving the files publicly accessible. Alternatively, a salesperson might CC all customers in an email instead of BCCing them, thereby exposing everyone’s contact information. Such unintentional data leaks can violate privacy laws and lead to breaches that are just as damaging as an external hack. For example, the UK’s ICO reported in 2020 that 90% of cyber data breaches in the UK were caused by human error, with mis-sent emails being a particular issue. More recently, the use of collaboration tools has become a growing area of concern, as employees sharing files over platforms such as Teams, OneDrive or Dropbox increase the risk of accidentally sharing a link publicly or with the wrong group. Mimecast's 2025 survey revealed that 43% of companies experienced an increase in internal data leaks due to employee errors, particularly with the increased use of collaboration apps.

Check out a related article:

Cybersecurity in FinTech: Tips for Creating a Bulletproof Financial App

Accidents involving insiders can also facilitate external attacks. A classic example is leaving a sensitive document on a public website or in an open AWS bucket, which overlaps with the misconfiguration issue - attackers regularly scan for such exposed data. Similarly, an employee uploading company data to a personal email account or device (in violation of company policy) could inadvertently expose it to less secure environments where attackers can access it. According to research by the Ponemon Institute, 65% of IT professionals say that email is the riskiest channel for data loss, followed by cloud file sharing (62%) and messaging (57%). This aligns with the fact that employees often do not realise how sensitive the data they handle is. 73% of organisations are concerned that their staff do not understand what data they can or cannot share via email.

The consequences of accidental data leaks can range from embarrassment and loss of trust to hefty fines. For example, misdirected emails have resulted in hospitals revealing patient identities and law firms leaking client strategies, resulting in regulatory penalties. One notable case involved a bank employee who accidentally emailed a file containing the account details of thousands of customers to a random Gmail address, resulting in a breach that had to be reported. These examples demonstrate that not all data breaches are sophisticated cyberattacks; many are simple human errors. Preventing such breaches requires better user awareness to ensure that recipients are checked and files are encrypted, as well as technical safeguards such as DLP (data loss prevention) tools that can detect and block mistakes.

Why Human Error Is So Prevalent

Given the stakes, why do human errors continue to abound in cybersecurity? There are several underlying reasons why well-intentioned people so often slip up:

1. Information Overload, Stress & Decision Fatigue: Modern workers are bombarded with emails, messages and alerts all day long. When under pressure or facing tight deadlines, they may click 'approve' or 'open' without exercising their usual caution. Attackers exploit this. Studies show that employees under time pressure are three times more likely to fall for phishing. Fatigued or distracted users tend to prioritise convenience, such as reusing a password or ignoring a security prompt, just to get the job done. After hours of constant notifications, security warnings simply become more noise. This cognitive overload erodes the diligence needed to spot scams or follow security procedures. For example, late on a Friday, a tired employee might bypass a policy and email a document to their personal account so they can work from home, failing to consider the risk. Alternatively, they might click on a phishing link about an 'urgent invoice' while multitasking on a busy morning. Attackers count on these moments of human weakness. They often send phishing emails at lunchtime or towards the end of the day, when people's vigilance tends to wane. In short, work-related stress and decision fatigue increase the likelihood of errors.

2. Lack of Training and Overconfidence in Technology: Security awareness training for staff is often inadequate or infrequent. Many organisations still use a slide deck once a year and consider the matter closed. Without regular, engaging education, employees may not recognise the latest phishing tactics or understand how serious a single mistake can be. Compounding this issue is a certain degree of overconfidence, both on the part of employees and with regard to the protective tools available to them. A recent KnowBe4 survey found that 86% of employees were confident that they could spot a phishing email, yet nearly half of them had fallen for one. This 'I won't be fooled' attitude is dangerous as it leads to complacency. Similarly, some companies rely too heavily on security technology and assume that users don't need to consider security ("our spam filter will catch it" or "our IT team will handle patches"). This mindset can lead to negligence. For example, an employee might think that they don't need a complex password because they use a VPN, which they believe to be secure. In reality, that weak password could be cracked and used to breach the VPN. Only around 37% of businesses evaluate the security of new AI tools before deploying them, which indicates that users often trust these tools blindly. The combination of insufficient training and misplaced confidence in technology can leave organisations with a false sense of security until an error triggers an incident.

3. Remote Work and Expanding Attack Surfaces: The shift to remote and hybrid working, which was accelerated by the pandemic, has fundamentally changed the security equation. When employees are working from home, they are more likely to blur the line between personal and professional activities. Home networks and personal devices are not as secure as corporate ones, yet remote staff may still use them for work (a 'Bring Your Own Device' - BYOD - culture). They may save work files on a home PC or use personal email or messaging apps to send information, especially if it is more convenient. This greatly increases the likelihood of errors and data leaks. Indeed, during the surge in working from home, many companies experienced spikes in incidents: 60% of firms reported that employee negligence via email had caused data loss in the past year, with usage of personal accounts being a particular concern. Furthermore, the increasing use of cloud collaboration tools means that employees are sharing data in many ways beyond the traditional monitored corporate email. Examples include Slack, Google Drive and Zoom chat. Each of these tools is a potential avenue for error or social engineering if not properly secured. Meanwhile, attackers exploit remote working by crafting lures related to WFH logistics or by targeting home IT setups. For example, a phishing email may appear to come from 'IT Support' and ask a remote worker to install a VPN update (which is actually malware). Remote working also makes it more difficult for IT departments to enforce updates and policies, resulting in more unpatched machines outside the firewall. Overall, the expanded digital workspace has multiplied opportunities for human error.

4. Attackers Exploit Human Psychology: Hackers are essentially social engineers, adept at manipulating human emotions and biases. They leverage a range of psychological tricks: fear (e.g. “Your account will be disabled, act now!”), urgency (“Invoice overdue, pay immediately!”), authority (“This is your CEO, wire $50k now.”), curiosity (“See attached bonus schedule”), or sympathy (charity scams). They also prey on cognitive biases - for instance, people’s tendency to trust messages that look professional or to click links from senders they think they know. As one security expert noted, cybercriminals exploit more than 30 different susceptibility factors in humans - from cognitive biases to situational awareness gaps. A simple example: an employee might be less vigilant when using a smartphone versus a PC, so attackers send mobile-friendly phishing texts knowing a smaller screen and on-the-go context make scrutiny harder. Moreover, new technologies like deepfakes are emerging to dupe people - e.g. an AI-generated voice message that sounds like your boss instructing you to take an action. In a world of information overload, humans rely on mental shortcuts, and attackers take advantage of those shortcuts. This is why even well-trained users can slip up; a cleverly crafted phishing email might bypass their analytical thinking and trigger a quick, emotional response. Attackers study human behavior as much as software code, constantly adjusting their tactics to find the chinks in our psychological armor. Until we address the human factors with equal sophistication, errors will continue.

In summary, human error remains prevalent due to human nature and modern working conditions. People are busy and fallible, and they are also targets of professional manipulators. They often lack sufficient guidance and may underestimate the risks they face. Understanding these root causes is half the battle - it helps us to identify where to focus our solutions. Next, we’ll look at how businesses and individuals can counteract these tendencies specifically and significantly reduce the likelihood of an error resulting in a breach.

Real-World Examples of Human Error Breaches

To illustrate how human errors play out in practice, let’s look at a few real-world breach examples. These cases show the chain reaction from a simple mistake to a major incident, reinforcing the need for preventative measures:

Misconfigured Cloud Bucket - Capita (2023): Capita, a major outsourcing firm in the UK, suffered a significant data leak due to a misconfigured AWS S3 storage bucket. The bucket, which was used to store internal data, was left publicly accessible without proper authentication. As a result, sensitive information belonging to local government councils and their residents was exposed to anyone who knew the URL. Although security researchers discovered the leak before cybercriminals did, a trove of personal data had already been compromised. The incident forced Capita to notify clients and regulators, and the company is reportedly facing potential fines. This breach exemplifies how an IT cloud configuration error can lead to serious data exposure. The solution was straightforward (securing the bucket), but the human error went unnoticed until the data had already been compromised. Capita's experience highlights the importance of rigorous cloud configuration reviews and the high cost of a single misstep in cloud settings. It has also prompted other organisations to double-check their S3 and Azure Blob permissions - nobody wants to be the next headline for an 'open database' leak.

Phishing & Social Engineering - MGM Resorts (2023): In September 2023, MGM Resorts (the company behind famous Las Vegas casinos such as the MGM Grand and the Bellagio) fell victim to a crippling cyberattack that began with a seemingly innocuous phone call. A hacking group called 'Scattered Spider' found an MGM IT employee's details on LinkedIn, then called the MGM help desk, impersonating that employee. Using some personal details and social engineering finesse, the attackers convinced the support staff to reset the employee’s account credentials, giving the hackers a foothold in the system. They then escalated their privileges within MGM’s network and eventually deployed ransomware that shut down hotel operations for days - slot machines stopped working, digital room keys failed and the reservation and payment systems went offline. Guests stood in long lines while staff reverted to using pen and paper. MGM had to notify regulators and law enforcement. In an SEC filing, MGM disclosed that this incident resulted in an estimated loss of $100 million in Q3 2023 due to remediation costs and business downtime. The company is also now facing lawsuits over the breach. Astonishingly, all that damage can be traced back to a single help desk employee being duped by a phone scam. This example vividly demonstrates how a social engineering attack - essentially a phishing attack via voice (vishing) - can exploit human trust to bypass technical controls. It also highlights the importance of employee verification procedures, even for internal support. Around the same time, Caesars Entertainment, another casino giant, was hit by a similar attack and chose to pay a ransom of around $15 million to avoid an MGM-style fallout. These casino breaches show that even large enterprises with state-of-the-art security systems can be brought down by targeted human deception.

Ransomware via a Single User Action - CNA Financial (2021): CNA Financial, one of the largest insurance companies in the U.S., provides a cautionary tale of how one wrong click can cost millions. In March 2021, the company fell victim to a ransomware attack dubbed 'Phoenix Cryptolocker'. The initial intrusion appears to have occurred when an employee opened a malicious email attachment or link (reports suggest that a fake browser update was used as bait). Once inside the system, the attackers escalated their access and deployed ransomware that encrypted CNA’s entire network, taking the business offline. The situation was so dire that CNA ultimately paid a record-breaking ransom of $40 million in the hope of quickly restoring operations. This is one of the largest ransomware payments known to date. Although paying is not recommended (and is often ineffective), CNA felt that they had no choice in order to protect their data and customers. The incident highlights how executing a file can unleash a company-wide crisis. In CNA’s case, an employee fell for a phishing email carrying malware, leading to a major financial and reputational hit. Despite having received phishing training, the employee clicked on the wrong thing in a distracted moment, demonstrating the need for constant awareness. The CNA attack also illustrates the rising stakes of ransomware and the direct contribution of human error: the 'accidental insider' who triggers the ransomware is a necessary link in the kill chain.

These examples - and sadly, many more like them - drive home a few points. First, small mistakes can have outsize consequences: one mis-set checkbox in a cloud console, one gullible moment on a phone call, or one click on the wrong email can snowball into multi-million-dollar breaches. Second, attackers will target the human element from every angle - via email, phone, or by simply taking advantage of neglected security hygiene. Third, organizations that respond proactively (like Caesars paying ransom or others quickly fixing misconfigs) may limit damage, but responding after the fact is always costlier than prevention. Each of these cases could likely have been thwarted by better practices (e.g., strong internal verification at MGM, aggressive patching and zero trust at CNA, cloud security posture management at Capita).

For every publicly known breach, there are hundreds of quieter incidents stemming from human mistakes - from the hospital fined because a nurse’s email typo exposed patient data, to the small business burned down by ransomware after an employee opened “invoice.pdf.exe”. The lesson is clear: we must address the human factors to prevent these stories from repeating.

How to Minimize Human Error

Given that people are both the biggest risk and the first line of defense, how can businesses and individuals significantly reduce human-error breaches? The solution is a layered approach combining training, culture, and technology. Here are key strategies:

For Businesses: Building a Human-Centric Security Program

Organizations should institute policies and tools that mitigate mistakes and teach employees to act securely. Some effective measures include:

- Security Awareness Training & Phishing Simulations: Regular, engaging training helps employees recognize threats and practice safe behavior. Move beyond annual check-the-box sessions - do frequent bite-sized trainings and simulated phishing tests. This builds a security mindset over time. Companies that implement ongoing phishing simulations see dramatic improvements (one study found 76% fewer clicks on real phishing emails after such training). For example, a mid-sized bank ran monthly gamified phishing drills and reduced click-through rates from 18% of staff to just 4% within a year. The key is to reinforce lessons continuously so that caution becomes second nature. Also, include training on handling data (e.g. proper document sharing) and incident reporting - employees should know how to react if they make a mistake (report it, don’t hide it). Modern “human risk management” programs even tailor training to the riskiest users, which Mimecast found can be effective since just 8% of employees cause 80% of incidents.

- Adopt a Zero Trust Architecture: Zero Trust is a security model that assumes no user or device is inherently trustworthy and enforces least privilege access everywhere. In practice, this means segmenting networks and cloud resources so that a single compromised account can’t access everything, and continuously verifying user identity and context for access requests. By implementing Zero Trust principles, businesses can contain the blast radius of human mistakes. For instance, if phishing steals an employee’s password, multifactor authentication and device checks can still block the attacker from logging in. Or if an employee’s account is abused, micro-segmentation limits what that account can reach. Many breaches (like the MGM case) spread widely because once the hacker got in, the network was flat. Zero Trust would challenge that lateral movement. Embracing Zero Trust is a cultural shift as well - “trust but verify” becomes “verify every time.” Companies like Google pioneered this with their BeyondCorp framework, which helped them mitigate phishing and device compromise internally. While Zero Trust can be complex to implement, even incremental steps (network segmentation, strict identity verification) dramatically reduce the harm from any one error.

- Patch and Update Automation: To tackle unpatched systems, organizations should take the human element out of the equation as much as possible. Automate software updates and patch management using tools that can deploy patches enterprise-wide rapidly, and use policies that force reboot/installation by certain deadlines. Where possible, enable auto-update features for applications and use modern endpoint management that monitors patch compliance. Additionally, keep an inventory of all software and firmware so nothing falls through the cracks. Many IT teams delay patches due to fear of disrupting operations - address this by scheduling regular maintenance windows and testing updates in small pilot groups before wide release. Some companies have turned to cloud-based virtual desktops or mobile device management which ensure all user environments are up-to-date centrally. The difference can be night and day: Organizations using automated, AI-assisted patching fixed vulnerabilities 3-4 times faster in studies, greatly shrinking the window of exposure. IBM’s data shows that using AI and automation for security cut the average breach containment time by 108 days, saving $1.76M per breach on average. Those time savings often come from quickly plugging known holes. In short, make it hard for human forgetfulness or procrastination to leave systems outdated. A strong patch management process, supplemented by vulnerability scanning to catch any missed updates, will eliminate the majority of “easy target” flaws that attackers prey on.

- Enforce Multi-Factor Authentication (MFA) and Strong Access Controls: MFA adds an extra login step (such as a one-time code, mobile app approval, or hardware token) on top of passwords. It’s one of the most effective tools to prevent breaches resulting from phishing or stolen passwords. As noted earlier, Microsoft observed that basic MFA (like SMS codes) can block 99% of automated account takeover attempts. Every business should require MFA for all remote access, email accounts, and especially administrator accounts. This ensures that even if an employee’s password is compromised, the attacker likely cannot get in without the second factor. It’s critical to choose MFA methods wisely - app or key-based MFA is stronger than SMS, and phishing-resistant authenticators (like FIDO2 security keys or platform biometrics) are best for high-risk users. Google’s success is instructive: after giving every employee a physical security key, they have had zero successful phishing-induced account breaches. In addition to MFA, implement least privilege access controls: employees should have access only to the data and systems necessary for their role. Regularly review and revoke unnecessary privileges. This way, if one account is compromised or misused, the potential damage is limited. Privileged access management (PAM) solutions can help manage and monitor admin accounts. The principle is simple - by hardening authentication and narrowing access rights, you greatly reduce the chances that a single human error opens the floodgates.

- Foster a Security-Conscious Culture: Technology alone isn’t enough; the organization’s culture must encourage vigilance and accountability. Leadership should communicate that cybersecurity is a shared responsibility of every employee, not just the IT department. Encourage employees to speak up if they spot suspicious activity or even if they make a mistake - a no-blame approach to reporting incidents helps issues be fixed faster. Many companies now conduct fun security awareness weeks, internal phishing contests, and reward employees who detect phishing tests or improve their security behaviors. Cultivating this engagement turns employees from liabilities into assets (“human firewalls”). It’s also wise to integrate security into onboarding and ongoing HR processes - make it clear from day one that security is part of job performance. Some firms include security objectives in performance reviews or offer incentives for completing extra training. Creating a positive security culture reduces negligence born of apathy. When workers understand why certain rules exist (e.g. the cost of breaches, the importance of protecting client data) and feel personally invested, they are far less likely to take risky shortcuts. Lastly, ensure executives and managers lead by example - if leadership uses password managers, adheres to policies, and takes training seriously, the rest of the staff will follow suit.

Implementing the above measures can dramatically decrease the probability and impact of human errors. For instance, one tech company, after rolling out mandatory MFA, continuous phishing simulations, and weekly patch cycles, saw its measured “phish risk click rate” drop to under 2% and has avoided any major incidents in recent years. Another organization adopted a strict “verify before trust” policy at their IT help desk after seeing the MGM fiasco - now, even if someone calls claiming to be the CEO, the service desk must call back on a known number and get managerial approval before password resets. These kinds of procedural tweaks, born of a security-aware culture, can stop attackers in their tracks.

For Individuals: Staying Safe Online (and at Work)

Whether you’re a non-technical business leader, a startup founder, or an employee handling daily digital tasks, there are personal steps you can take to prevent being the cause of a breach. Here are some key tips for individuals:

- Think Before You Click (Safe Browsing & Email Habits): Always exercise caution when receiving unsolicited emails, messages or calls. If something feels 'off' or too urgent to be true, it could be a phishing attempt. Check sender addresses carefully and avoid clicking on links or downloading attachments from unknown sources. Verify any requests involving money or sensitive information through a second channel. For example, if you receive an email from 'IT' asking you to reset your password, call the IT department to confirm this. Get into the habit of hovering over links to see where they actually go. When browsing, be wary of pop-ups or download prompts - many 'Your computer is infected!' alerts are fake malware lures. By slowing down and verifying information, you can outsmart the scammers. Remember that reputable institutions (banks, Microsoft, etc.) won’t ask for your password via email, and no prince will really send you $5 million. Healthy skepticism is your best defense.

- Use Strong, Unique Passwords (or a Password Manager) and Enable MFA: Weak or reused passwords are a direct line to trouble. Ensure your passwords are long (12+ characters), complex, and unique for each account. Since it’s impractical to memorize dozens of strong passwords, use a password manager application - it will generate and store complex passwords for you, so you only remember one master password. This vastly reduces the chance of credential reuse across services. Additionally, enable multi-factor authentication on any account that offers it (email, social media, banking, work accounts). Typically, this means even if someone steals your password, they’d need your phone or physical token to get in. It’s an extra 5 seconds when you log in, but it makes you exponentially safer. Statistics show that accounts with MFA are 99% less likely to be compromised. Also, consider using modern authentication options like biometrics or security keys for critical accounts - they are more phishing-resistant than SMS codes. Overall, unique passwords + MFA is one of the most effective combos to protect your personal and work accounts.

- Keep Your Software and Devices Updated: Regularly install updates on your computers, smartphones, and apps. Many updates include critical security patches. It’s wise to turn on automatic updates for your operating system and web browser at a minimum. The sooner you patch, the smaller the window for hackers to exploit known vulnerabilities. This applies to everything - not just your laptop and phone, but also IoT devices, routers, and any other connected tech you use. If your workplace issues you equipment, cooperate with IT’s patch schedule and reboot when asked. By using updated antivirus/anti-malware tools and ensuring your systems are current, you greatly reduce the chances that you’ll fall victim to drive-by downloads or known exploits. In short, don’t procrastinate on updates - those pop-ups might be annoying, but a malware infection or ransomware is far worse.

- Be Careful with Sensitive Data - Verify Recipients and Use Encryption: Many data leaks happen because someone sent information to the wrong place. Double-check email recipients, especially when sending sensitive or confidential data. If you’re sending a file with customer info or financial data, confirm that the person on the other end is authorized to receive it. Avoid using personal email or consumer file-sharing apps for work data - your company likely provides secure methods. If you must transfer sensitive files, consider encrypting them (e.g., using password-protected ZIP files or secure file transfer services). Also, be mindful of what you share on collaboration tools or social media. For instance, don’t share screenshots that inadvertently contain private info, and don’t overshare details about work projects publicly (attackers scour LinkedIn and Twitter for info to target you or your company). When in doubt, ask “Could this data cause harm if someone else saw it?” If yes, handle with care - use secure channels and limit who you send it to.

- Back Up Important Data Regularly: Despite precautions, mistakes (or malware) sometimes happen. Having secure backups of your important files means that even if data is accidentally deleted, corrupted by a virus or held hostage by ransomware, you have a backup. If you are an individual or a small business, use an external hard drive or a reputable cloud backup service to automatically back up your documents, photos and other important data. Follow the 3-2-1 rule if possible: Three copies of your data on two different media, with one offsite (the cloud counts as offsite). In a corporate setting, save work to servers or cloud drives approved by the IT team, rather than keeping sole copies on your local machine. Backups won’t prevent a breach, but they will significantly improve your ability to recover from one without paying ransoms or losing valuable information. For example, if an employee accidentally encrypts files with ransomware, the company can restore them from backups with minimal impact (as many did during the WannaCry outbreak). Similarly, regular people have avoided disaster by having an extra copy of their thesis or tax archive when their laptop died. It’s part of being resilient to both mistakes and attacks.

By adopting these personal best practices, individuals can contribute to overall cybersecurity and greatly reduce the likelihood of their own mistake causing a breach. It’s empowering to know that you don’t need to be a security expert to make a significant impact; simple habits such as hovering over links or using a password manager can prevent most threats. If you suspect that you have fallen for a scam, report it immediately to your IT team or the relevant service - quick reporting can contain incidents before they spread. Many major breaches became so because someone was too afraid or embarrassed to speak up early. Organisations are increasingly encouraging a 'report even if it might be nothing' philosophy, which is a healthy approach. Remember that cybercriminals are constantly refining their tricks, so staying informed and alert will serve you well (read those security awareness emails!).

Check out a related article:

Remote Work Security Risks and 6 Tips on How to Avoid Them

Looking Ahead: AI & Automation in Cybersecurity

As we move further into 2025 and beyond, both defenders and attackers are increasing their use of artificial intelligence (AI) and automation, which will have a significant impact on the role of human error. Here's what to expect:

AI-Powered Defense: Security teams are increasingly using AI and machine learning tools to detect anomalies that may indicate human error or malicious activity. For instance, AI-driven user behaviour analytics can identify unusual activity on an employee’s account (such as downloading large amounts of data at 2 a.m. or logging in from a different country) and automatically flag or block it. These tools can detect the early signs of an insider breach or hacked account, potentially stopping an incident before it escalates fully. AI is also enhancing threat detection in networks and emails - machine learning models analyse billions of events to identify patterns of phishing emails or malware, and can adapt to new variants in real time. In a recent Mimecast survey, 95% of organisations said they are using AI to defend against cyberattacks and insider threats. Furthermore, companies are automating routine security tasks. For example, if a critical patch is released, automated systems can swiftly test and apply it across the enterprise (reducing the problem of delayed patching), and if an employee clicks on a potentially malicious link, automated isolation can immediately remove that system from the network. Essentially, the aim of automation is to eliminate the delay and inconsistency of human response. IBM found that companies using security AI and automation had a much shorter breach lifecycle: they identified and contained breaches 108 days faster on average, resulting in $1.7 million less cost per breach. This significant reduction in impact is thanks to the speed and precision that outstrip manual efforts.

AI-Augmented Training and User Support: We’ll also see AI helping users to avoid errors directly. For example, imagine an intelligent email client that displays a warning banner if it detects suspicious content in an email, such as “This looks similar to known phishing content” or “The sender’s domain is slightly off - be cautious”. Or an AI assistant that appears when you’re about to share a file containing sensitive data and asks, 'Are you sure you want to share this outside the company?' Some advanced DLP solutions are moving towards this kind of smart, context-aware prompting - like a real-time coach riding alongside employees. Additionally, AI could personalise security training by analysing which users might be more susceptible to certain attacks and tailoring education to them. For instance, if Bob frequently almost clicks on phishing links, he could receive extra micro-training on phishing. We might also see AI chatbots helping employees to make secure decisions. For example, if an employee asks, 'Is it safe to use this app for client data?', an AI chatbot trained on company policy could provide a prompt response. Over time, such supportive AI could reduce unintentional violations and reinforce good practices as part of the daily workflow.

The Flip Side - AI-Empowered Attackers: Of course, it’s not only the good guys who are leveraging AI. Cybercriminals are already using generative AI to craft more convincing phishing emails and generate malware code. They can even create fake personas and voices at scale. A 2024 survey found that 85% of cybersecurity professionals believe that the increase in cyberattacks is partly due to attackers using generative AI. We have seen AI-written phishing texts that are grammatically flawless and tailored to the recipient's context, making them harder to distinguish as scams. Deepfake technology has enabled fake CEO voices that have duped employees into transferring funds. Going forward, attacks may feature AI capable of having live chat conversations with targets that sound human in order to socially engineer them, or malicious code that can adapt intelligently to avoid detection. Another concern is that AI could be used to identify new vulnerabilities or misconfigurations more quickly than humans can, essentially automating the hacker's reconnaissance. The World Economic Forum’s 2024 report revealed that almost half of organisations rank malicious AI as a top concern, fearing more advanced and widespread attacks facilitated by AI. While AI can help reduce human error, it also raises the stakes by creating more frequent and deceptive attacks that test our defences.

Human Vigilance and Judgment Remain Vital: With all the talk of automation, one might ask whether AI will solve the problem of human error entirely. The reality is that human vigilance will remain the final line of defence. While AI can provide significant assistance by automatically identifying many errors, it is not infallible. It may miss a new type of phishing scam or produce false alarms that only a human can interpret correctly. There is also the issue of trust: if users become overly reliant on AI, for example thinking that an email is safe just because it wasn't flagged by the system, that could introduce a new kind of risk. A better approach is AI-human collaboration: AI handles the heavy lifting and routine protections, while humans focus on critical thinking, oversight and handling ambiguous cases. Imagine a modern aeroplane: it has an autopilot, but the pilot is still there to monitor the situation and take control when necessary. Similarly, employees and security staff will need to understand AI tools and respond appropriately when those tools delegate decisions to them. Cyber awareness training will probably expand to include 'how to work with AI' - for example, learning that an AI email filter letting a message through does not guarantee that it is safe.

Future Outlook: Expect to see more security automation in compliance and incident response by 2025 and beyond. Intelligent software will increasingly handle mundane tasks such as access reviews, log analysis and incident triage. This will reduce the likelihood of human error (such as missing a critical alert) and free up staff to focus on higher-level strategy. Regulations may also require certain types of automation. For example, governments could mandate the prompt patching of critical vulnerabilities, effectively pushing companies to adopt auto-patching. In terms of user authentication, we may see passwordless methods (such as biometrics or cryptographic keys) become mainstream, which would eliminate the issue of weak passwords. Microsoft, Apple and Google are all promoting passkeys as a user-friendly, phishing-resistant method in 2025. If widely adopted, this alone could eliminate a significant proportion of breaches related to credentials caused by human error.

In summary, the future will bring more AI-driven defences that can significantly reduce the risk of human error, from anomaly detection to real-time user coaching. However, attackers will also use AI to improve their tactics, so the battle will continue. Organisations should invest in these advanced tools while also investing in their people, training them to work with new technology and maintaining a culture of healthy scepticism and alertness. It is hoped that many of the errors that plague us today, such as phishing clicks and misconfigurations, will be caught by AI safety nets in the near future. Nevertheless, as new technologies and threats emerge, the human element - creativity, intuition and, yes, error - will remain part of the equation. The winning formula for cybersecurity in the AI age is likely to be keeping humans in the loop and empowering them with smart automation.

Conclusion

Cybersecurity breaches in 2025 may involve advanced malware or cunning adversaries, but ultimately, they are mostly people problems. The data is overwhelming: approximately 95% of breaches can be traced back to human error, whether that's an employee falling for a scam, an administrator misconfiguring a server, or someone using a weak password. This doesn’t mean technology is futile; it means that we must prioritise human factors in our approach to security. Even the smartest firewall cannot stop an authorised user from inadvertently leaving the front door open. Therefore, organisations must balance technical defences with robust training, user-friendly security tools and a culture that values cybersecurity. Likewise, individuals should recognise their role in keeping data safe and practise good digital hygiene in both their professional and personal lives.

The encouraging news is that human-driven breaches are largely preventable. By addressing common causes such as phishing, weak passwords, unpatched systems, misconfigurations and mishandling of data, we can significantly reduce incident rates. Many companies have already achieved success by implementing multifactor authentication, running regular phishing drills and automating their updates (leading to far fewer day-to-day security issues). Cybersecurity is a continuous improvement journey: it isn't a one-time project, but rather an ongoing commitment to reducing risk. Mistakes will still happen, but if we learn from them and implement mitigation strategies, they need not lead to a full-blown breach.

Ultimately, everyone has a stake in this, from the CEO and IT admin to the newest intern and the home user. Cybersecurity is a shared responsibility. Just as we rely on individuals to wash their hands or get vaccinated to protect the community in public health, we rely on each person to play their part in cyber security (such as using strong passwords and being alert to scams) to keep the whole organisation safe. Executives should champion this ethos, managers should reinforce it, and employees should embrace it. When technology, processes and people work together, the result is a far more resilient defence.

Ultimately, the goal is to reverse the '95% caused by human error' statistic by ensuring that human vigilance and preparedness are the reasons that 95% of attacks fail. With the right investments in security awareness and smarter infrastructure - and perhaps a little help from AI - we can achieve this. Breaches may never be eliminated entirely, but they don't have to result in inevitable negative publicity for your organisation.

How Intersog Can Help: At Intersog, we understand that building secure software and a security-first culture is critical to reducing human error risk. We help businesses worldwide develop applications with security built-in from day one, following secure coding practices to minimize vulnerabilities (so your team doesn’t have to worry about avoidable flaws). Whether you need to implement a Zero Trust architecture, improve your cloud security posture, or check your system via penetration testing, Intersog offers the cybersecurity consulting and development support to strengthen your defenses. With a blend of technical expertise and user education, we’ll partner with you to significantly cut down the likelihood of breaches - and protect your company’s valuable data and reputation.

In a world where human error is the biggest threat, Intersog helps you empower your people and technology to be the best defense. For more information on our secure development services and training offerings, feel free to reach out - together, we can build a safer future for your business.

Leave a Comment