In Part 1 of this guide, we examined strategies for incorporating domain knowledge into AI systems, comparing RAG, fine-tuning, and hybrid approaches. In Part 2, we focus on the model layer and whether to build on closed-source, open-source, or local (on-premises) models. This choice is vital to the development of AI applications because your foundational model determines everything from data compliance and cost structure to performance ceilings and the speed of iteration. Selecting the right model approach can mean the difference between a scalable enterprise AI solution and an experimental dead end.

Why does this decision matter?

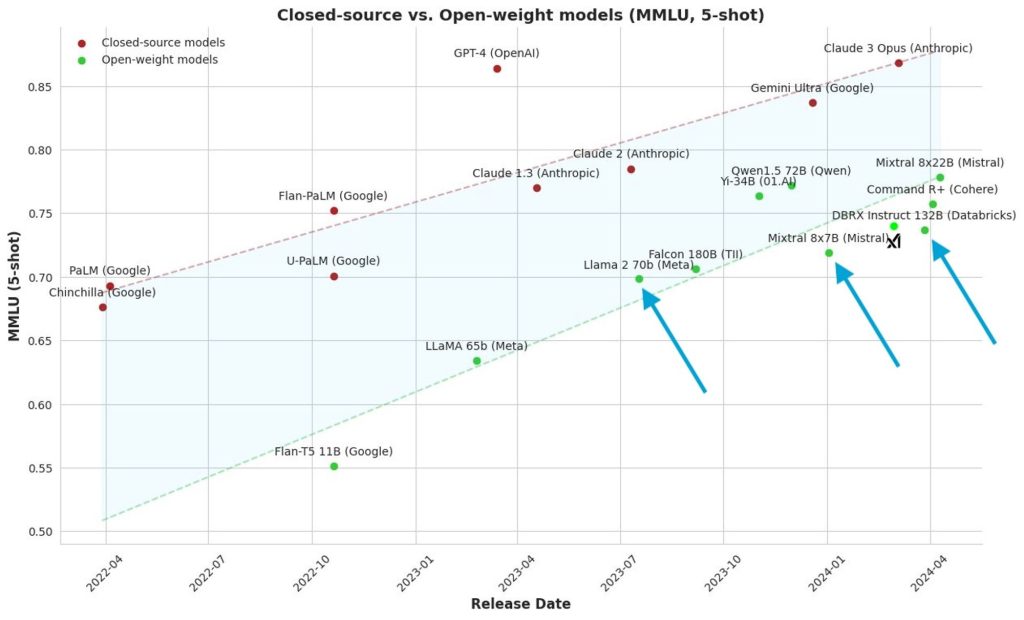

The LLM landscape is evolving at breakneck speed. Proprietary services like OpenAI’s GPT and Anthropic’s Claude remain at the cutting edge, offering best-in-class accuracy and massive 100K+ context windows. However, open-source LLMs, such as Meta’s Llama family, Mistral’s models, and China's DeepSeek series, have quickly narrowed the performance gap with proprietary platforms. In fact, the DeepSeek-V3 open model, released in late 2024, was noted to “outperform every other open-source LLM” and provide strong competition to closed-source models like GPT-4 and Claude 3.5. Meanwhile, organizations are increasingly interested in running models on-premises for data control, even if it means using slightly smaller models. Microsoft’s Phi series, for example, demonstrated that small local models (e.g., Phi-1 to Phi-4) can deliver excellent results on consumer-grade hardware, highlighting the potential of on-premises AI in certain situations.

In this article, we’ll break down closed, open-source, and local models in depth. First, we provide an overview of each model type, explaining what they are and how their architectures differ. Then, we highlight their strengths and weaknesses. Next, we introduce a decision matrix that covers key dimensions such as privacy, cost, performance, customization, and vendor risk to help you evaluate which model best fits your needs. We will discuss use-case fit - when a closed-source API makes sense versus when to opt for an open or local model - with real examples. We will also explore hybrid architectures that combine multiple models for optimal results. Finally, we provide a CTO playbook with step-by-step guidance on making this decision strategically, including whether to build in-house or partner with experts. By the end, you should have a clear framework for choosing the right model layer for your AI stack and enterprise AI solutions.

Model Layer Overview: Closed-Source vs Open-Source vs Local Models

Choosing a foundation model for your AI application essentially comes down to three options:

- Closed-Source Models (Proprietary SaaS LLMs)

- Open-Source Models (Pre-trained LLMs with open weights)

- Local Models (On-Premises or Custom-Deployed LLMs)

Each option represents a different approach to the model layer of your AI stack. Let's define each one and compare their architectures, deployment patterns, and inherent advantages and disadvantages.

Closed-Source Models (Proprietary APIs)

Closed-source models are proprietary AI models that are provided as a service, usually via a cloud API. With these models, you do not have access to the weights or code; you simply send inputs to an endpoint and receive the model’s output. Examples include OpenAI’s GPT-4 family (e.g., the latest GPT-4.2 update) and Anthropic’s Claude series (e.g., Claude 3.5 "Sonnet"). Companies develop and fine-tune these models using massive computing and data resources, and they often represent the state of the art in capability:

Performance and Architecture: Closed models tend to be large, highly optimized Transformer architectures with tens or hundreds of billions of parameters, sometimes with proprietary enhancements. These models undergo extensive training on diverse data and rigorous fine-tuning, such as reinforcement learning from human feedback, to achieve top-tier performance. For example, GPT-4 has achieved top scores on reasoning and knowledge benchmarks, and Anthropic’s Claude 3.5 excels at lengthy dialogue and coding assistance. Vendors continually improve these models. For example, OpenAI's GPT-4.2 Turbo update in late 2025 extended the context length to 32K tokens and reduced inference costs for better scalability.

Deployment: Using a closed model usually involves calling a cloud API (e.g., via HTTP/JSON). The model runs on the provider’s infrastructure. While this makes integration relatively simple — no ML infrastructure is needed on your end — it also means your data (prompts) are sent off-site to be processed.

Strengths: The main attraction is the cutting-edge capability that comes standard. These models are often the most accurate for general tasks thanks to their training on enormous datasets and expert tuning. They may offer features that are not yet available in open models, such as multimodal input with GPT-4 Vision or 100k+ token context with Claude. Closed models also come with fully managed infrastructure, so you won't need to worry about provisioning GPUs or optimizing inference; the vendor will handle scaling and uptime. Proprietary APIs are very convenient for teams that want quick results with minimal ML operations. Additionally, vendors may provide enterprise support, SLAs, and security certifications, which can provide reassurance for production use.

Weaknesses: The flip side is loss of control. You are trusting a third party with your data and relying on their service. This raises data privacy and compliance concerns — even if providers promise not to use your data for training, sensitive information is leaving your controlled environment. Many regulated industries, such as finance, healthcare, and government, have restrictions that can render pure SaaS AI impractical for core data. There is also vendor lock-in and strategic risk. You depend on the provider's pricing, which may change, and uptime.

You also have limited recourse if the API is discontinued or its terms become unfavorable. Customization is limited - generally, you cannot fine-tune the full model yourself (aside from narrow options like OpenAI’s fine-tuning for GPT-3.5, which still doesn't expose model internals). You must accept the model's built-in behaviors and biases to a large extent. Finally, cost can be a major downside at scale. With pay-per-call pricing, costs grow linearly with usage. For high-volume enterprise applications, API fees can far exceed the cost of running your own model. In short, closed models trade openness and cost control for convenience and performance.

Open-Source Models (Foundation Models with Open Weights)

Open-source models are large language models (LLMs) whose model weights are publicly available, sometimes under permissive licenses and sometimes with certain use restrictions. Examples include Meta’s LLaMA 2 and LLaMA 3 series and open releases by startups such as Mistral 7B/14B, Falcon 40B, Vicuna, and GPT-NeoX. Anyone can obtain and run these models on their own hardware or in the cloud. Some key points:

Architecture and Performance: Open LLMs vary greatly in size and capabilities. They range from small models with 7-13 billion parameters that can run on a single GPU to 65 billion+ parameter models or mixture-of-experts (MoE) models that rival the performance of closed models. The quality gap between open and closed models has narrowed quickly. By 2024, Meta's Llama 3 models (and the intermediate Llama 2.x models) had become widely used, improving training efficiency to the point that an 8B open model could outperform older 13B models. In late 2024, DeepSeek-R1 (developed by a Chinese research team) and Alibaba’s Qwen-1.5 (72B) matched or surpassed some GPT-4 benchmarks. Additionally, Mistral's new 8×22B MoE model achieved one of the highest scores for an open model.

In February 2025, a McKinsey report noted that "the performance of more open foundation models is closing the gap to proprietary AI platforms," citing Meta's Llama family, Google's open Gemma models, DeepSeek, and Qwen as examples. In other words, the best open models in 2025 are close to the performance of GPT-4 on many tasks, and they can even outperform it in certain niche domains or benchmarks. However, it’s worth noting that closed models like GPT-4 and Claude are typically more thoroughly fine-tuned and evaluated for safety, reliability, etc. As one review put it, OpenAI’s GPT-4 and Anthropic's Claude 3.5 were the "best LLMs of 2024" because they have been tested across many tasks, even though newer models have surpassed them on certain benchmarks.

Deployment: With open models, you have the freedom to deploy them however you want. You can run the model on your own servers, in a private cloud, or even on edge devices if the model is small enough. Many open LLMs are available through platforms like Hugging Face, and cloud providers like AWS, Azure, and GCP offer them via managed services or marketplaces. For example, Amazon Bedrock hosts Llama 3 and Mistral models as managed endpoints. You can also fine-tune open models with your data and deploy customized versions internally. Essentially, open source provides the model as a component that you can integrate into your stack rather than a black box service.

Strengths: The obvious benefits are control and flexibility. Since you have the model weights, you can extensively customize the model - for example, you can fine-tune it on proprietary data, modify its architecture or prompt handling, or combine it with other models. This is crucial if your use case requires a specific tone or expertise that general models lack. Open models also enable data privacy, as you can keep all data processing in-house. More on "local" deployment in the next section. Another strength is cost efficiency at scale. Open models are free to use (many have no licensing fee, and others have a one-time license, such as Apache 2.0 or Meta’s community license). Your costs are primarily for infrastructure, such as computing power to run the model. If you have high query volumes, running an open model on cloud instances or your own hardware can be far cheaper per query than paying a per-call fee to a SaaS API. For instance, one cost analysis determined that Mistral 7B (an open model with 7 billion parameters) was approximately 65% less expensive to run per 1,000 tokens than a larger Llama 3 8B model on the same cloud service. At large scale, these savings compound, especially if you optimize the model. Additionally, open-source fosters a community and ecosystem. There are abundant tools for optimizing open models, such as quantization libraries and custom kernels. There are also community-driven fine-tuning datasets and forums where you can get help. You’re not beholden to one vendor’s roadmap - if a model doesn't perform a certain task, you or the community can potentially improve it.

Weaknesses: The primary downside is that you’re taking on the operational complexity. Running LLMs in production is not trivial. It requires expertise in ML operations (LLMOps), including serving infrastructure (e.g., GPU/accelerator management and autoscaling), latency optimization (e.g., batching and quantization), monitoring, and updating models. While a closed API "just works" out of the box, an open model requires your team (or your cloud platform) to do significant work to achieve the same level of reliability.

Open models may also lag slightly behind the absolute frontier in raw performance. For instance, if OpenAI releases GPT-5 in 2025 with superior reasoning capabilities, open models might not catch up to that new benchmark for months or even a year. Open releases are sometimes "under-trained" due to limited budgets. For example, many open models aren't trained as long or on as much data as their closed counterparts, which can result in suboptimal performance.

Check out a related article:

Artificial Intelligence in a Nutshell: Types, Principles, and History

Another consideration is that, although the model is free, the computing power isn't. You need sufficient GPU resources to match a closed provider's throughput. If you underestimate serving costs, you could end up spending a lot on cloud GPU time, though you have the option to optimize or scale down.

Finally, you are responsible for supporting and maintaining the model. If the model malfunctions, there’s no vendor to file a support ticket with; you need in-house experts or partners to troubleshoot and retrain as needed. That said, some open-source model creators, like Mistral AI, are now offering enterprise support contracts and custom training services, which is a sign that the open model ecosystem is maturing and becoming more enterprise-friendly.

Local Models (On-Premises or Custom-Deployed)

In this context, “local model” can refer to two related things: (1) a model deployed on infrastructure fully under your control, such as an on-premises data center, private cloud, or on-device; and (2) a custom model your organization trains (or heavily fine-tunes) for a specific purpose. In practice, most local models are open-source or derivatives of open-source models because proprietary providers generally do not allow you to self-host their flagship models. One exception might be if a vendor offers an on-premises appliance or a containerized version of their model for enterprise clients, but such cases were rare as of 2025. Therefore, local models are best viewed as a special case of open-source usage, emphasizing deployment environment and data sovereignty.

What characterizes a “local” approach?

Deployment and Architecture: With a local model setup, the model runs in your controlled environment. This could be on-premises servers, such as a GPU cluster in your data center, or an edge device, such as a factory robot or mobile device with limited internet access. The model itself can be any open LLM, ranging from a 3B-7B parameter model that fits on a single device to a 70B model sharded across on-premises GPUs. Sometimes, organizations develop their own proprietary model from scratch. Examples include BloombergGPT, a 50 billion parameter finance domain model trained by Bloomberg in 2023, and other industry-specific LLMs. These models are "local" in that they are owned and used by the company internally and are not available to the public. However, building a model from scratch requires significant resources and is only feasible for large companies. More commonly, a "local model" involves using an existing open model and fine-tuning or deploying it within your environment.

Strengths: The strongest advantage of local models is complete data control and privacy. No data leaves your trust boundary when inference occurs on local hardware. This is often non-negotiable for certain industries and government applications. For example, a bank handling private financial data or a healthcare provider with sensitive patient information can deploy an LLM behind their firewall to comply with regulations and avoid third-party cloud exposure. Local deployment also gives you full stack control, allowing you to optimize the model and system for your needs. Want to cut latency? Run the model on high-end GPUs next to your application servers (or on an edge device for real-time response). Need offline capabilities? A local on-device model can work without an internet connection. Additionally, running models locally provides a predictable cost structure — you invest in hardware or dedicated instances, and the marginal cost of queries is effectively fixed and often lower than API pricing. If you have underutilized hardware, local models can leverage it. Finally, local models mean independence from vendor agendas. You won't be subject to an API's usage policies or content filters, which is critical for some applications. Many closed models have strict content moderation policies that may block or alter certain responses. With your own model, however, you can define policies that align with your business needs.

Weaknesses: The challenges of local models are essentially the same as the challenges of open-source models, but they are amplified by infrastructure demands. You must procure and manage suitable hardware. For large models with tens of billions of parameters, this may require expensive GPU machines or specialized AI accelerators on-premises. Not every company has an HPC setup readily available or wants to maintain one, which is why many opt for cloud deployment, even with open models, thus blurring the line between "open" and "local." There is also a limitation in terms of scale: if your usage exceeds your hardware capacity, you are responsible for scaling up, whereas a cloud service might scale up seamlessly. In that sense, local deployments can be less flexible — unless you build a hybrid cloud bursting capability, you're limited by what you have on site. Another consideration is that keeping up with model improvements is your responsibility. If the state of the art improves (e.g., new architectures or versions, such as the Mistral 3 MoE models in 2025, which significantly improve efficiency), you will need to decide when and how to upgrade your local model. This can lead to technical debt if not managed properly. In terms of performance, local models may require the use of smaller models or distilled versions if your hardware is limited. For instance, running a 70 billion parameter model with acceptable latency might not be feasible on a single server; you might instead use a 13 billion parameter model locally, sacrificing some accuracy in the process. Thus, a quality trade-off may be necessary unless you invest heavily in infrastructure. Finally, as with all open deployments, you will need an in-house (or contracted) team for support, maintenance, and optimization. The complexity is highest when you fully self-host because you effectively take on the role of "AI service provider" for your organization. This is manageable with the right expertise, and many companies do it, but it’s a significant commitment.

In summary, closed-source, open-source, and local models each have distinct implications for your AI system’s architecture:

- Closed models = proprietary cloud service, easy to use, highest performance, but less control (data leaves your environment, costs per call, no weight access).

- Open-source models = open weights you can host or adapt anywhere, giving flexibility and lower cost at scale, but requiring ML ops work and possibly a slight quality gap to frontier models.

- Local models = self-hosted (often open) models running in your environment for maximum data control and customization, but demanding the most infrastructure and expertise.

Next, we’ll compare these options across key decision dimensions to provide a structured framework for evaluation.

Decision Dimensions and Trade-offs

Several key dimensions must be weighed when deciding on your AI model layer. There is no one-size-fits-all solution - each organization must prioritize what matters most for their use case. For example, is strict data compliance a must-have? Is state-of-the-art accuracy worth any cost? Do you have an ML engineering team ready to manage models, or do you need a plug-and-play solution? Below, we break down the trade-offs between closed, open-source, and local models based on the most important decision factors:

- Data Privacy & Compliance – Where does data reside and how are security/privacy concerns handled?

- Cost Structure & Scalability – Upfront and ongoing costs, and how costs scale with usage.

- Performance & Capabilities – Relative model quality, including accuracy, context length, and special features.

- Customization & Control – Ability to fine-tune or modify the model, and integrate deeply into your product.

- Operational Complexity – The level of infrastructure and expertise needed to deploy and maintain the solution.

- Vendor Dependency & Risk – Degree of vendor lock-in, support availability, and risk of service changes.

Below is a comparison matrix summarizing how Closed-Source, Open-Source, and Local model approaches rank for these dimensions:

| Decision Factor | Closed-Source Model (Proprietary API) | Open-Source Model (Self-Hosted) | Local Model (On-Premises Deployment) |

| Data Privacy & Security | Low – Data leaves your environment and is processed by the vendor (even if not retained); may not meet strict compliance requirements without special contracts. | High – You can deploy in your own cloud VPC or servers, keeping data in your control. Open weights mean full transparency, though using a third-party cloud still involves some external infrastructure. | Very High – All data stays on-premise or on-device. Meets the most stringent privacy needs (e.g. sensitive or regulated data) since nothing is sent externally. |

| Cost Structure | Usage-based & Potentially Expensive – Pay-per-1K tokens or API call. Low upfront cost, but at scale costs can accumulate significantly. Little ability to optimize beyond what vendor offers. | Infrastructure-based & Potentially Efficient – No license fees; you pay for cloud instances or hardware. Higher upfront investment (setting up servers or cloud infra), but lower marginal cost per query at high volumes. Can optimize model (quantize, batch, etc.) to reduce costs. | CapEx Heavy but Controlled – Requires purchasing/renting hardware (GPUs, etc.). High initial cost, but after that, usage is essentially “free” aside from maintenance and power. Good for steady, high-throughput scenarios. Scaling requires more hardware purchases. |

| Performance & Quality | Excellent – Cutting-edge model quality, large context windows, and multimodal capabilities available. GPT-4/Claude-level performance (often top of benchmarks). Continuously improved by vendor (GPT-4.2, 4.3, etc). However, improvements are incremental (GPT-4.2 was an iterative upgrade) and heavily depend on vendor’s roadmap. | Good to Excellent – The best open models (Llama 3, Mistral 14B MoE, DeepSeek, etc.) now approach or match closed models on many tasks. Some open models have beaten closed models in specific benchmarks. But ultra-high-end capabilities (e.g. proprietary 100B+ parameter effects, some safety tuning) may lag slightly. Context length is improving (e.g. 32k in some open models) but closed leaders may still have an edge in extreme cases. | Variable – If deploying a top-tier open model on-prem (with sufficient hardware), you can achieve comparable performance to open-source above. In constrained environments (edge devices, limited GPUs), you might use smaller or distilled models, which could mean moderate quality. Thus, performance can range from moderate (for small local models focused on quick responses) to very good (if you invest in running large models locally). |

| Customization & Flexibility | Limited – You cannot alter the base model. Tuning is limited to prompt engineering or vendor-provided fine-tuning if available (e.g. OpenAI fine-tuning on small models). Integration options are what the API allows. You rely on vendor for new features. | High – Full flexibility to fine-tune on domain data, adjust model architecture or combine with other tools. You can integrate at model weight level (e.g. inject retrieval, add adapters, etc.). The model can be embedded in your application pipeline however you like. Open code/weights allow auditing for bias or errors. | Highest – Because it’s running in your environment, you have end-to-end control. You can even train a model from scratch or modify how it’s deployed on custom hardware. Local models can be part of bespoke architectures (custom pre/post-processing, ensemble with other internal models). This approach enables ultimate customization – but with that freedom comes full responsibility for making it all work. |

| Operational Complexity | Low – Very easy to get started. No ML infrastructure needed; integration via API/SDK. Vendor handles scaling, updates, model monitoring, etc. Ideal if you have a small team or lack ML ops expertise. (Note: complexity can grow if you need many safeguards around the API calls, but relative to self-hosting it’s minimal.) | Moderate – You (or your cloud provider) must handle deploying the model, allocating GPU resources, optimizing for latency, monitoring uptime, etc. Requires DevOps/MLOps work: containerizing models, using inference frameworks, setting up autoscaling. Fine-tuning an open model also requires ML expertise and pipeline for training. Many tools exist, but you need skilled people to use them. | High – Running models on-premises is most demanding. You manage physical/virtual hardware, deal with library dependencies, possibly obtain enterprise support for open-source software, ensure security updates, and more. Also, scaling up means procuring more machines – a longer cycle than cloud. This option is best if you have a robust IT/ML engineering team or an integration partner. The trade-off is you gain maximum autonomy at the cost of significant ops overhead. |

| Vendor Risk & Support | Vendor-Dependent – You are tied to the provider’s fate. Changes in pricing, service outages, or policy shifts directly impact you. However, top vendors often provide support channels or enterprise contracts, which can be a plus for help in troubleshooting. Still, you have minimal bargaining power (especially with monopolistic providers). Lock-in is a concern: switching to another model means reworking integration and possibly losing quality or features. | Independent (Community-Supported) – No single vendor can pull the plug on your model; you have the weights. Risk of obsolescence is lower since community-driven projects can be forked or extended. That said, you are responsible for maintenance. There may be open-source support forums, and possibly commercial support from third parties (e.g. consulting firms, or the model creators offering services), but it’s not a turnkey support like with a paid API. Upgrades are under your control (which is both good and an added task). | Independent (Self-Supported) – Similar to open-source: you avoid external vendor risks entirely by keeping it in-house. But you also shoulder all risks of operating the model. If something breaks, your internal team (or hired consultants) must fix it. There’s no SLA from an external provider. On the flip side, you won’t suddenly lose access to a capability due to a company shutting down an API. For long-term stability and mitigating vendor lock-in, local models are the safest bet (with the caveat that you need a continuity plan for maintaining the tech). |

This matrix illustrates the traditional trade-offs. Closed solutions excel in performance and ease of use, while open and local solutions excel in control, customization, and cost. Your organization’s priorities will determine which factors carry the most weight.

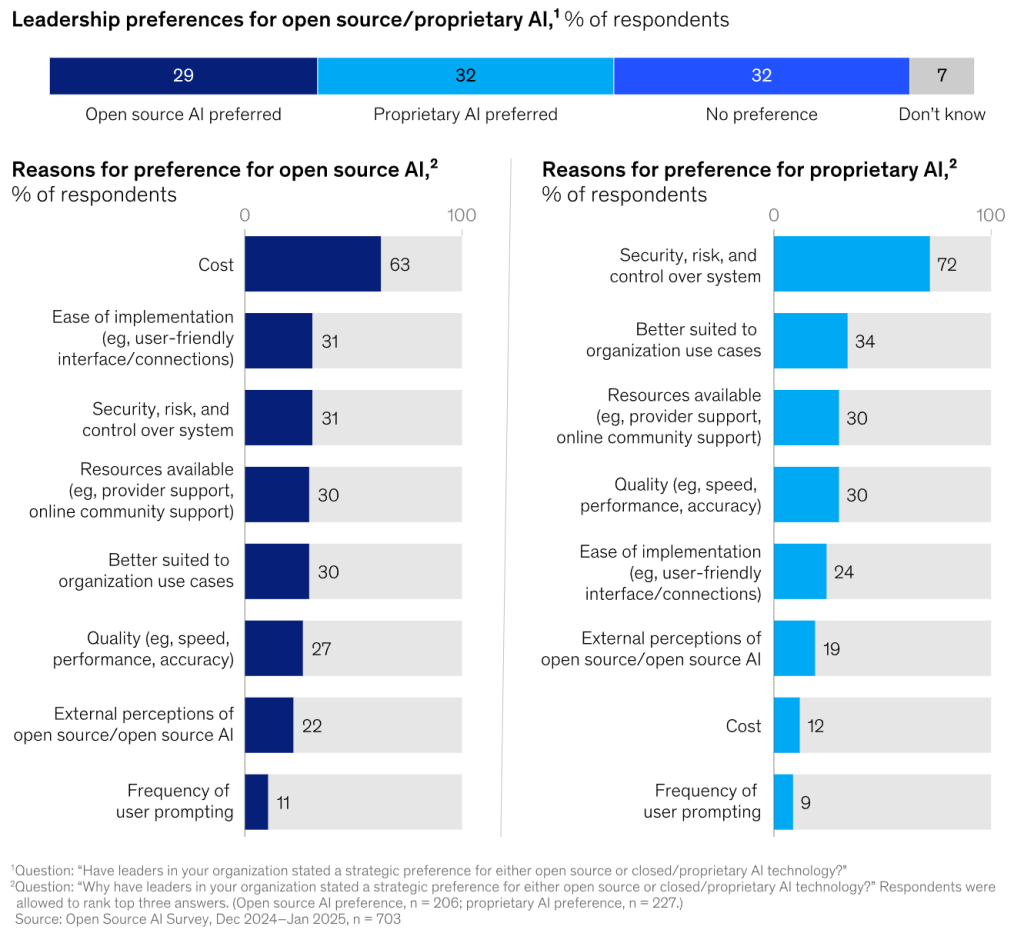

For example, a 2024 McKinsey/Mozilla survey of over 700 tech leaders found that cost savings and customization were major reasons for adopting open-source AI (60% of decision-makers reported lower implementation costs with open-source tools), whereas security and support concerns were the top barriers. Those who favored proprietary tools often cited faster time-to-value and ease of use as reasons. In essence, open/local solutions offer potential cost efficiency and flexibility, while closed solutions provide speed and convenience. Each option comes with its own risk profile: open solutions require more security due diligence, while closed solutions require more vendor trust.

In practice, many enterprises use a mix of these approaches. Now, let's consider how to evaluate the fit for your specific use case and examine scenarios in which each model type is the best choice.

Use Case Fit: When to Use Closed vs Open vs Local Models

Choosing the right model setup depends heavily on the context of your application. Here we outline scenarios where closed-source, open-source, or local models tend to be the best fit, along with concrete examples:

When Closed-Source Models Make Sense

Choose a closed-source LLM (API service) if your top priority is maximizing capability quickly and you’re less constrained by data privacy or long-term cost:

- Rapid Prototyping and MVPs: If you’re building a new AI feature and need to prove its value fast, using a best-in-class API like GPT-4 or Claude can get you results literally in a day. For example, a startup adding an AI chatbot to its app can integrate an API and have a working demo immediately, rather than spending weeks selecting and tuning an open model. Early in development, speed and quality matter more than cost, and you might not even have any sensitive data involved yet.

- Highest Accuracy or Complex Reasoning Needs: Some applications demand the absolute pinnacle of model performance – e.g. a medical diagnostic assistant where accuracy could impact patient outcomes, or a legal AI that must parse very complex contract language. In late 2025, models like GPT-4/4.2 and Claude 3.5 still hold a slight edge in complex reasoning and depth of understanding. If that edge makes a difference for your use case, a closed model may be justified. For instance, an R&D team building an AI code assistant might use the latest OpenAI model to get state-of-the-art code generation and reasoning about code, which currently outperforms most open models in coding tasks.

- Limited ML Infrastructure/Expertise: If your company does not have machine learning engineers or DevOps skilled in ML deployment, a closed model API spares you the need to hire that expertise initially. A small company or a non-tech enterprise (say a manufacturing firm experimenting with AI for the first time) might opt for a managed API to avoid the overhead of setting up ML ops. The ease-of-use of closed solutions – with clients, dashboards, and monitoring provided – is a big fit here.

- Variable or Low-Volume Usage: If your AI feature will be used relatively infrequently or usage is highly spiky/unpredictable, paying per API call might actually be cost-effective. For example, a small-scale internal tool used by a few analysts can run on GPT-4 at negligible cost; building and hosting an open model would be overkill. Similarly, if you cannot predict user traffic, leveraging a provider’s scalable infrastructure can handle spikes without you pre-provisioning GPUs for worst-case load.

- Content Safety and Moderation: Closed AI providers often incorporate robust moderation filters and have teams improving the model’s safety. If your use case is public-facing and you want to minimize the chance of the AI producing harmful content, a proprietary model might be the safer starting point (though you still need to test and add guardrails). Open models can be fine-tuned for safety too, but that requires effort on your part. With closed models, you benefit from the vendor’s safety training (OpenAI, for instance, heavily fine-tunes to refuse disallowed content).

Example: A global e-commerce company that is developing a multilingual customer support assistant has chosen a closed model, such as Azure OpenAI’s GPT-4 service, because it provides advanced natural language capabilities in many languages. The company does not want to maintain ML systems in-house and negotiates a volume discount with the vendor. Since the data being processed are general customer inquiries and not highly sensitive personal data, using a cloud API passes their compliance check. This allows them to deploy the feature in weeks. Later, as usage grows, they will reevaluate costs, but initially, the closed model meets their needs for quality and rapid deployment.

When Open-Source Models Make Sense

Choose an open-source model (self-hosted in cloud or custom environment) if control, cost at scale, or customization are top priorities, and you have (or can get) the means to manage the model:

- Data Privacy with Cloud Flexibility: If you handle sensitive data but still want to use cloud infrastructure, open-source models allow you to deploy in a private cloud environment, such as your AWS, Azure, or GCP account. This ensures that your data never goes to a third-party service beyond your control. Many enterprises fall into this category. For example, a financial firm that wants to use AI on internal documents can deploy a Llama 2 or Llama 3 model inside a virtual private cloud (VPC). While the model may be open, it runs in an isolated environment that the company fully controls, often with encryption, audit logging, and other cloud security measures in place. This satisfies internal compliance requirements that would not be met by using an external AI API.

- Cost Efficiency for High Volume: It’s time to consider open models if your application is at scale (processing millions of queries per day) or if the marginal cost of API calls would exceed your budget. For example, a customer service chatbot handling tens of thousands of conversations daily could generate substantial API costs. With a self-hosted model, you pay for a fixed number of machines and can process many queries for a fraction of the per-call cost. Many companies start with a closed API but switch to an open model as they grow. Their tipping point is often when their monthly API costs exceed the cost of leasing a small GPU cluster. Open models also allow for cost optimization - for example, you could use a smaller model during off-peak hours or for simpler queries, which APIs typically don't allow.

- Domain-Specific Customization: Open models shine when your AI needs to speak a specific domain language or adhere to company-specific knowledge and tone because you can fine-tune them. For instance, imagine you’re developing an AI to provide medical coding suggestions in a hospital. You have a large dataset of medical records and codes. Fine-tuning an open large language model (LLM) on this data can make it highly specialized, ensuring it uses the correct medical terminology and abbreviations. This would far outperform a generic model for this task. Closed models generally won't allow such deep customization, and you might be uncomfortable sending proprietary data to the vendor, even if they have fine-tuning options. Open-source models give you the freedom to embed your knowledge directly into the model. For this reason, many enterprises producing enterprise AI solutions prefer open models - they can effectively create a model that belongs to them and captures their competitive knowledge.

- Feature and Integration Flexibility: Open models are ideal for applications requiring tight integration or extended features beyond standard text completion. For example, if you want to perform retrieval-augmented generation, you can modify the model's prompts at a low level or perform model surgery, such as adding a custom retrieval layer. You might also want to combine multiple models (e.g., have a second model verify the first model’s response). With open source, all of this is possible. For example, if you need to deploy the model in an environment with limited connectivity or custom hardware, you can optimize and compile the model for that environment. We’ve seen open models compressed to run on mobile devices or behind corporate firewalls without an internet connection. This level of integration is only possible when you control the model.

- Avoiding Vendor Lock-in (Strategic Control): Some organizations choose open source as a strategic move to avoid becoming overly dependent on Big Tech vendors. They may fear becoming dependent on a provider for essential AI capabilities. By investing in an open-source stack, they can ensure that they have the flexibility to switch clouds or run on-premises as needed. This is particularly relevant for government agencies and companies in jurisdictions concerned about data locality and sovereignty. Open-source LLMs offer self-determination in AI development, aligning with the open-source strategies of many enterprises (similar to choosing Linux over a proprietary operating system). It's about having a solution that is both future-proof and community-supported rather than one that is controlled by a single entity's roadmap.

Example: A multilingual media company needs AI technology that can summarize and translate news articles in real time across ten languages. Initially, they tried a closed API, but they found that costs were skyrocketing, and some languages weren’t well supported. They switched to an open-source model, Meta’s Llama 3, which was instruction-tuned on multilingual data and hosted in their Azure cloud. They further fine-tuned it on their past news articles to improve domain accuracy. The result is a custom model that reliably summarizes news in all the required languages in the tone their editors prefer. It runs on a set of GPU virtual machines (VMs) that cost a fixed amount of ~$X per month. This is 50% cheaper than the API approach for the same volume. Furthermore, they integrated the model with their internal CMS so that it could automatically pull context from their archive when generating summaries — a level of integration that was not possible with the generic API. The open-source route provided cost savings, customization, and integration depth that perfectly aligned with their needs.

When Local Models (On-Prem or Edge) Make Sense

Choose a local or on-premises deployment model (which could involve open-source or custom models) if data sovereignty and latency are critical, or if cloud usage is impractical or undesirable in your environment:

- Strict Regulatory or Security Requirements: This is the classic case for on-premises AI. If you work with highly sensitive data, such as personally identifiable information, trade secrets, or classified information, and there is absolutely no way such data can be sent to external servers, even to a managed cloud in your account, then your AI must run locally. For example, think of a defense agency that wants to use an LLM to analyze intelligence reports. By policy, that model likely has to run on an air-gapped network. The same goes for a healthcare provider dealing with patient records in a country with laws requiring all processing to happen on-prem. Local deployment using an open model can satisfy these needs. For this reason, many hospitals, banks, and governments in 2025 are testing LLMs behind firewalls. Additionally, some companies have internal policies against using certain big tech services for competitive reasons — they'd rather not send data to a third party at all. Local models align with these policies.

- Edge Computing and Low-Latency Environments: If your use case involves devices or locations with limited connectivity or requires real-time inference with very low latency, an on-device or on-premises local model is ideal. For instance, an AI assistant in a car might need to function offline. A small local LLM embedded in the car’s onboard computer can handle voice queries without relying on a cellular connection. Similarly, a factory floor robot that uses an LLM to parse instructions might be too slow or unreliable to call a cloud API for every command. In this case, an on-premises model server in the facility can provide responses in under 50 ms over the local network. By keeping the model close to the source of the data, network latency and dependence are eliminated. Early versions of smartphone-based LLMs are already emerging (e.g., Qualcomm is running 7B–13B models on devices). By 2025–2026, "tiny LLMs" with a few billion parameters or fewer will be more capable. Specialized models, such as Microsoft's Phi models, demonstrate the extent to which optimization can be achieved in resource-limited settings. For edge cases, local models are the way forward.

- Custom Proprietary Models: If your organization has the resources and unique data to train its own model from scratch or a heavily customized version, the model will inherently be local. For example, BloombergGPT (mentioned earlier) was trained using Bloomberg’s internal financial data and public data. This resulted in a model tailored to finance tasks. Bloomberg runs it internally to power financial natural language processing (NLP) applications. Firms with extraordinary data advantages or a need for a model whose knowledge can’t be obtained via general models typically undertake this kind of project — building a foundation model. While not common, this scenario results in a model that truly becomes a proprietary asset. Running it locally ensures that none of the proprietary model or data leaks out. Even if you don't train a full model from scratch, a significantly fine-tuned model on your data that essentially encodes a lot of your intellectual property (IP) might be treated as a "local model" asset to keep in-house.

- Resilience and Independence: Organizations concerned with resilience, such as maintaining continuity during internet outages or avoiding reliance on external infrastructure that could fail, may deploy local models as a backup or primary system. For instance, consider a large contact center solution. If the connection to a cloud LLM fails, operations could halt. By hosting a model on-premises, they can ensure continuous service. Local models also mean that external changes do not affect you: if a provider rate-limits usage or changes an API, it does not impact you. This independence is crucial for mission-critical applications that require guaranteed availability and performance at all times.

Example: A government tax agency plans to use an AI assistant to answer citizens' tax questions at an interactive kiosk. However, the system cannot legally send taxpayer data or queries to any external cloud. The solution is to deploy a local large language model (LLM) in a secure data center operated by the agency. They selected an open-source 20 billion parameter model and fine-tuned it using public tax codes and FAQs, making it highly accurate for tax-related questions and answers. They expose this model via an API to their kiosks and website, all within their private network. Responses are generated in a few hundred milliseconds, and no data ever leaves the government’s servers. While this model may not be as powerful as GPT-4, it is sufficiently accurate and fully compliant. The agency also avoids ongoing API costs - aside from hardware and maintenance costs, the model can handle thousands of queries per day at no additional cost. Most importantly, they have full oversight. Their data scientists can audit the model’s outputs for consistency with official guidelines, which would not be possible with a black-box external model.

These scenarios are not mutually exclusive. In fact, many organizations adopt a hybrid approach, using different models for different needs. Let’s explore how hybrid and multi-model architectures can combine the best of each world.

Hybrid & Multi-Model Architectures

Often, the optimal solution is not an either/or situation, but rather a combination of closed, open, and local models working together. A hybrid approach enables you to leverage the strengths of each model where they have the greatest impact. Two common patterns emerge by 2025–2026:

- Split by Use-Case or Data Type: Use a closed-source model for some tasks and an open/local model for others, depending on requirements.

- Split by Pipeline Stage or Ensemble: Use multiple models in a sequence or ensemble for a single task, mixing closed and open capabilities.

When does it make sense to combine models?

- Keeping Sensitive Data Local, Using Cloud for General Knowledge: One popular approach is to use a local model for sensitive queries or data and route other queries to a powerful cloud model. For example, consider a corporate chatbot. If a user asks a question involving proprietary strategy or unpublished data, the system uses an internal large language model (LLM) that has been fine-tuned on the company’s internal knowledge base. This ensures that no secrets leave the premises. However, if the user asks a generic question, such as "What's the weather in Paris?" or something requiring broad world knowledge, the system might call an external API, like GPT-4, which has superior general knowledge and multilingual abilities. Essentially, the architecture has a router that classifies queries by sensitivity or type and delegates them to the appropriate model. This way, you get the best of both worlds: privacy for sensitive matters and raw power for everything else. While it adds complexity (maintaining two models and routing logic), it can optimize both compliance and performance. Tools and frameworks are emerging for such orchestration. In fact, the AWS Bedrock service introduced a feature that facilitates multi-model workflows. This feature allows teams to A/B test and route between models like Claude, GPT, and others within a single pipeline.

- Using Local Models as a First Pass or Filter: Another hybrid pattern is to use a smaller, less expensive model to handle most requests or preliminary processing and only escalate to a larger, closed model for the toughest cases. This is similar to a triage system. For instance, an AI customer support agent could use a 7B-parameter local model to respond to basic FAQs quickly and at minimal cost. If the agent detects a complex or uncertain query, it forwards the query, along with the context of what it has already attempted, to a more powerful model, such as GPT-4, for a final answer. This drastically reduces API usage while maintaining high-quality answers for difficult questions. The smaller model effectively filters or handles the easy workload. One variant of this is cascading models by confidence. If the first model is not confident in its answer, a second model is called. Implementing this strategy requires calibrating model confidence and establishing a mechanism to determine when to invoke the larger model. However, it can reduce costs while maintaining quality of service. Some companies report success with this "local plus cloud backup" strategy for controlling expenses.

- Multi-Model Ensembles for Different Strengths: For certain tasks, you can run multiple models (of any type) simultaneously and combine their outputs for better results. For example, in a coding assistant, you could query both GPT-4 and an open-source, code-specific model, such as CodeLlama or PolyCoder, and then use a system to select the best response or combine insights. In a creative task, such as story generation, one model could generate an outline, and another model with a different "style" could flesh it out, combining their strengths. When combining closed and open models, an ensemble could leverage the strengths of each model in different areas (e.g., language vs. technical accuracy). Research papers and tools on model ensembles suggest that outputs can be more reliable when models agree or when one model critiques another. In practice, however, ensembles increase inference cost, so they are used when quality trumps efficiency. For example, a research analysis system that requires the highest accuracy might run multiple models and cross-verify answers.

- Hybrid Cloud-Edge Deployments: One form of hybrid involves deploying a smaller model on edge devices and relying on a cloud model for more complex tasks. Consider a smartphone voice assistant: A small local model might handle wake-word detection and simple commands offline. However, for complex requests, it sends them to a cloud LLM. This approach provides users with some offline functionality and faster responses for simple commands, as well as the power of a cloud model for more complex requests. This setup requires a seamless handoff and consistency, meaning the assistant should behave coherently whether on the device or in the cloud. This is a design challenge, but a manageable one. As interest in on-device AI grows, such setups will likely increase.

Example Hybrid Architecture: A large enterprise software vendor has implemented a hybrid AI assistant in its product. For clients who require everything to run locally, they incorporate an open-source LLM (Llama 3.2, 11B) within the on-prem version of their software. This local model is fine-tuned for the enterprise domain and can answer how-to questions about the software and draft reports using the client’s own data, all of which remains behind the client’s firewall. For clients who opt into the cloud-connected version and allow data sharing, the assistant can access OpenAI’s GPT-4.2 via API for complex queries and when users request more creative, generalized assistance. The software's internal orchestration layer decides which model to use based on the nature of the query. If the query is about private user data or a routine support question, the local model is used. If the query is a broad question that benefits from external knowledge, such as "Compare our company’s efficiency to industry trends," the cloud model is used. The team validated this hybrid approach because, during development, they found that GPT-4.2 was slightly better at answering broad questions. However, they could not send proprietary data to it for privacy reasons. They achieved an optimal mix. Consequently, the product’s AI assistant can be marketed as both secure and smart, leveraging the best model for the task.

Considerations for Hybrid Setups:

Although they are powerful, hybrid architectures introduce complexity. You need to maintain multiple models and potentially multiple code paths. Monitoring and debugging become more difficult (you have to track which model responded in each case and evaluate performance holistically). There’s also the user experience (UX) aspect to consider: ensuring consistent tone and behavior between models so the user doesn’t feel like they’re talking to two different AIs. Strong LLMOps practices are required, including logging model decisions, evaluating cost/latency trade-offs, and continuously training any custom models involved. It is often useful to start with a simpler approach, such as one model, and evolve into a hybrid as you identify clear needs to do so.

However, given the rapid evolution of models, multi-model ecosystems are likely to become the norm in enterprises. As in Part 1, where we discussed combining RAG and fine-tuning for better results, here we see that combining model choices (closed and open) can yield the best outcome. The key is to design your AI stack in a modular way so you can plug in the appropriate model for each context. You should also have a control layer, sometimes called an "LLM Orchestrator" or agent manager, that handles routing and combining results.

Decision Playbook for CTOs and Technical Leaders

Having covered the landscape and trade-offs, let’s synthesize this into a practical playbook. If you are a CTO, technical founder, or product leader deciding on your AI model strategy, here’s a step-by-step approach:

1. Clarify Your Priorities & Constraints: Begin by identifying the non-negotiable aspects of your application. For example, is data compliance (privacy, residency, and IP protection) a strict requirement? Is time to market critical? What level of quality do you truly need? Is "good enough" sufficient, or do you need the best? What is your budget for the short term (prototyping) and the long term (scaling)? Also, assess your team’s capabilities. Do you have ML engineers to run models, or would you need to rely on external partners? Listing and ranking these factors will clarify the decision. For example, if no user data can leave your servers, you should consider open/local options. If you know that you have zero ML engineers on staff, that leans you toward closed APIs or hiring a partner.

2. Pilot and Benchmark Different Models: If feasible, it’s often helpful to conduct a brief pilot study with each approach. Try a sample of queries on a top closed model via API and on a leading open model. You can use a cloud instance or a platform like HuggingFace for quick testing. Compare the quality and note the differences. Measure the approximate latency and costs. This doesn't have to be a full integration — running a few dozen key queries through each can reveal a lot. If an open model's output is nearly as good as a closed model's for your specific task, that's a sign that you could use an open-source model without sacrificing much. Conversely, if the closed model clearly outperforms, especially on edge cases that matter to you, that might justify a closed or hybrid approach. Also, consider domain-specific models. For example, if your task is code generation, an open, code-specialized model may outperform a general model. Collecting these empirical insights will ground your decision in evidence rather than vendor claims.

3. Weigh Immediate vs Long-Term Needs: Strategically, you may choose one approach for the initial rollout and a different one for scaling up. For example, many teams start with a closed API during the MVP/prototype phase to validate the product. Then, they switch to an open or custom model for the production phase to reduce costs and gain more control. This is a valid strategy — just design your system with that future switch in mind (abstract the model interface so you can swap backends). Conversely, if you anticipate regulatory audits or client scrutiny from day one (e.g., enterprise clients asking, "Does our data leave the system?"), you may need to start with an open/local model. It's about balancing short-term expediency versus long-term optimization. Using closed models for a pilot or internal prototype can be perfectly sensible, even if your end goal is to deploy locally, so you don't invest heavily until the concept is proven.

4. Consider a Hybrid Approach Early: As we explained, a hybrid solution can mitigate many trade-offs. Don’t fall into binary thinking ("We must choose closed or open") — you can mix and match. For example, you could decide that the primary model will be open source and deployed in the cloud, but you'll use ClosedAI’s API for specific niche capabilities, such as summarizing very long documents if the closed model excels at that with its 100k context. Alternatively, you could use the closed model as the primary model and use an open local instance to handle any data that the legal team says can’t be sent out. Mapping specific functions to model types is a powerful strategy. Just be sure you have a clear architecture for how these will interact. As noted, plan a routing/orchestration layer.

5. Address LLMOps and Expertise Gaps: If leaning towards open or local, make an honest assessment of who will build and maintain the model infrastructure. Do you have the people in-house? If not, you have a few options:

- Upskill or hire ML Ops engineers (time-consuming and expensive, but builds internal capability).

- Use a managed platform for open models – for example, some vendors offer “LLM hosting” where they manage scaling for an open model of your choice. This can be a middle ground (you use open source but outsource the ops).

- Partner with an AI development firm that specializes in custom AI solutions. This is often a smart move if you want the benefits of open-source or custom models but don’t have the expertise. A partner can set up the pipelines, integrate the model, and even monitor it post-deployment as a service. Bringing in experienced partners can de-risk the adoption of open or local models by filling your team’s knowledge gaps. They can also assist in fine-tuning models or implementing hybrid architectures correctly – essentially acting as your extended AI R&D team.

If you choose to remain with closed models, your ops burden is lighter, but you should still designate someone to keep track of API usage, costs, and any model updates the provider releases (for example, adopting GPT-4.2 Turbo when it became available to cut costs). In all cases, establish at least basic monitoring for your model’s outputs (open or closed). You want to track quality and any drift or issues over time.

6. Plan Governance and Compliance: Work closely with your security, legal, and compliance officers from the beginning. Make sure that, whichever route you choose, you have answers to questions such as: Where is our data going? Is it encrypted? Do we comply with GDPR, CCPA, etc.? How will we handle model mistakes or biases? If you are using closed models, review the provider’s terms. Do they use your data for training purposes? Most top vendors allow enterprises to opt out, but you might need a contract. If you are using an open model, make sure that your use of the model weights complies with the license. For example, some open models have non-commercial use clauses or require attribution. Also, consider intellectual property ownership, as outputs of AI can raise questions about ownership. With a closed API, you usually have the right to use the outputs, but check to see if any are restricted. If you fine-tune a custom model, you generally own the improvements. Having a governance framework in place will facilitate adoption, especially for enterprise or customer-facing deployments.

7. Develop a Switch/Mitigation Strategy: Technology and business needs are constantly changing. You may start with one approach and later need to switch. It’s wise to design modularly so that you can replace a model component without rewriting your entire application. For example, if you initially build against OpenAI’s API, encapsulate all of those calls in one service layer so that you can later redirect them to an internal model. Similarly, if you start with open-source technology and find that it doesn't meet your needs in one area, you could swap in a closed model for that part. Keep an eye on emerging models, too. The landscape changed dramatically from 2023 to 2025 (who knew in early 2023 that an open model would beat GPT-4 on some benchmarks by 2024?). It will likely continue to shift. Be prepared to upgrade your chosen model or pivot to a better one in the future. This might involve retraining or re-testing. In your project planning, budget time periodically to evaluate new models or updates. For example, when GPT-5 or Llama-4 is released, you’ll want to determine whether the benefits justify switching.

8. Leverage Expertise and Partners: If in doubt, consult with experts. Reach out to the AI community or specialists who have done similar implementations. Many companies engage an AI consulting or development partner early to help make this decision and even build POCs. For instance, working with a team like Intersog’s AI development services (who has experience in both integrating third-party AI and developing custom AI solutions) can accelerate your decision-making. We can provide a vendor-neutral perspective on what combination might work best given your unique case. Moreover, a partner can help implement the solution and even provide ongoing support, which is valuable if your internal team is lean. The cost of bringing in external expertise is often far less than the cost of a misstep in such a core technology choice.

9. Pilot, then Gradually Expand: Once you have decided on a path (or a hybrid path), begin with a pilot deployment. This could involve a subset of users or a limited feature set that you can test in the real world. Measure everything: model output quality, user satisfaction, latency, and cost. This will validate or challenge your assumptions. Be prepared to iterate. Perhaps the pilot will show that your open model needs further fine-tuning or that users don't mind slightly slower responses, meaning you can batch requests for cost savings. Use these insights to refine the system. Once you are confident, scale up to full deployment. Maintain an iterative mindset and treat the model choice as something you continually evaluate, even after deployment. Don't treat it as a static, one-and-done decision.

10. Keep an Eye on the Horizon: The 2025–2026 period will undoubtedly bring new models, such as GPT-5, Claude 4, and Llama 4, as well as new paradigms, including efficient fine-tuning methods and improved knowledge integration. Stay informed about these developments. In a year, it's possible that an open model could surpass the proprietary ones in your field (or vice versa). Being aware of these developments will allow you to quickly take advantage of improvements. For example, if a new open model emerges with twice the performance at the same cost, you can plan to adopt it. This doesn't mean chasing every new release, but you should allocate some R&D time to benchmark new models against your current solution. Adopting a flexible mindset will ensure that your AI stack remains optimal over time, not just at the moment you built it.

A Note on Build vs. Partner

For many leaders, one critical decision is whether to develop AI capabilities in-house or partner with a vendor or developer. The same applies to the model layer. Building in-house, especially for open or local models, provides the most control, but it requires substantial expertise and investment. Partnering, whether by using a closed model API or by hiring a specialized AI software development company, can accelerate progress and offload risk.

Consider partnering in scenarios like:

- You want a custom open-source solution but lack an experienced ML engineering team – a development partner can create the solution and even train your staff to maintain it.

- You plan a hybrid model deployment and need guidance on architecture – consulting with experts who’ve done it before can prevent costly mistakes.

- You’re open to closed models but want to negotiate enterprise terms (cost, data handling) – working with your cloud provider’s experts or a consultancy can help secure a good deal and implement it correctly.

- You foresee needing ongoing support (e.g. model updates, monitoring) that your team can’t handle alone – a partner can provide managed services.

On the flip side, if AI is core to your business’s competitive advantage, building internal capability (even if slower at first) might be worth it long-term. Many large enterprises are investing in internal “AI Centers of Excellence” that eventually handle open model deployments and even develop custom models. This path demands commitment from leadership to hire and retain AI talent.

For most, a balanced approach works best: use external help to get started, while growing internal competency in parallel. For example, you could engage a firm to deliver the first version of a custom model solution while your engineers shadow and train with them. Later, your team could take over incremental improvements.

Remember, the goal is not just to choose a model but also to deliver business value with AI. Sometimes the fastest way to achieve this is to get help from those who have traveled this road before.

Conclusion: Strategic Model Layer Decisions Drive AI Success

The choice between closed, open-source, and local models is a strategic decision that will have a long-lasting impact on the success of your AI application. There is no universally “right” answer — the best choice depends on your unique mix of requirements regarding data, cost, performance, and resources.

To recap:

- Closed-source models offer unparalleled ease and cutting-edge capabilities, making them ideal for quick wins and scenarios where quality trumps all else and data sensitivity is low. They can jump-start your AI features in days, but require trust in vendors and a watchful eye on cost as you scale.

- Open-source models empower you with control and flexibility. They shine when you need to customize the AI deeply or keep costs manageable at large scale. With the performance gap to closed models rapidly shrinking, open LLMs in 2025 are a viable engine even for enterprise-grade solutions – provided you invest in the MLOps to support them. They embody the “build your own AI stack” ethos, giving tech leaders autonomy and freedom to innovate without vendor constraints.

- Local models ensure your AI works within your four walls (or devices), which is indispensable for stringent compliance, low-latency edge use cases, and organizations prioritizing data sovereignty. Running models on-premise is no trivial feat, but for many it is the only acceptable path. With the right optimizations and hardware, local models can deliver impressive results (like modern 13B models running on a single server, or 7B models on a high-end laptop) and eliminate external dependencies entirely.

We also explored how hybrid approaches can offer the best of both worlds. For example, you can use the strengths of a closed model while keeping certain interactions on an open model for privacy. This underscores a key point: the future of AI in business is likely to be multimodal, in the sense of having multiple model modalities. Successful strategies will often involve orchestrating several models and continually reassessing their roles as technology evolves.

As a CTO or product leader, your role is to make these choices in alignment with business strategy. This means asking not just “Which model is better?” but also “Which model choice gives our product and users the most value while aligning with our risk tolerance and capacity?” Always tie the decision back to strategic goals, such as user experience, competitive differentiation, cost efficiency, and compliance. For example, if user trust is paramount, it may be worth leaning toward a solution that maximizes privacy, even if it results in slightly lower accuracy. Alternatively, if the goal is to be first to market with a breakthrough AI feature, using a ready-made closed API to deploy quickly might outweigh other concerns (you can refine it later).

Check out a related article:

Artificial Intelligence in a Nutshell: Types, Principles, and History

We encourage you to use the provided frameworks and matrix when making your decision — they are designed to prompt the right questions and clearly reveal the trade-offs. Engage stakeholders from IT, security, legal, and business units early so the decision is well-informed from all angles. This holistic approach will prevent roadblocks, such as discovering late in the process that compliance won't approve a cloud API or that the cost model doesn't work at scale.

Finally, remember that the field of AI is dynamic. The capabilities of models in 2026 will likely surpass those of the best models in 2025. Additionally, the open versus closed landscape could change further. For example, we might see more "partially open" models, new licensing schemes, or proprietary models offered in on-premises containers. Maintain agility. The right AI stack is not a static set of tools but rather a continually evolving ensemble that you refine as the ecosystem grows. Staying educated (reading research and following industry benchmarks) and building modular systems that allow you to swap out components are both part of that.

In summary, treat model layer decisions as a strategic exercise, not just a technical one. The choices you make will influence your AI application’s ROI, risk profile, and ability to deliver value to users. By carefully evaluating closed, open, and local options — and possibly combining them — you can craft an AI solution tailored to your organization's needs and primed for sustainable success. Whether you deploy the most advanced closed LLM via API, fine-tune an open model, or run an ensemble on your own servers, the important thing is that it achieves your desired outcomes in a way that you can support and scale.

The AI stack is like a puzzle; with this guide (Part 1 on RAG/Fine-tuning and Part 2 on model selection), you now have key frameworks to put the pieces together. As you move forward, approach new AI technologies with excitement and pragmatism. Evaluate how each technology can fit into your architecture and determine if it advances your strategy. With a clear understanding of trade-offs and a willingness to adapt, you will be well-equipped to navigate rapid AI advancements and consistently select the appropriate model for each task.

Good luck on your AI journey, and may your stack choices propel you to innovative, effective solutions!

Leave a Comment