The era of simple bots is giving way to genuinely autonomous AI agents. Advances in large language models (LLMs), retrieval‑augmented generation and multi‑agent orchestration have made it possible for software systems to handle complex objectives with minimal supervision. Surveys show that around 65 % of companies already employ generative AI and agentic solutions, and the pace of adoption continues to accelerate. Organisations from banks to marketing agencies are asking a simple question: “What are AI agents?” and how do they differ from chatbots, assistants, copilots and agentic AI? The difference matters because startups and enterprises are making decisions about investing in automation, and misjudging the capability or cost of a solution can lead to underperformance or waste.

This article demystifies the landscape for 2025, explains how AI agents work, compares them with other AI tools and provides guidance on when (and when not) to use AI agents. It also highlights development best practices and illustrates real‑world applications, including Intersog’s own Deana AI assistant.

From chatbots to autonomous agents: making sense of the buzzwords

Chatbots: rule‑bound conversational scripts

Chatbots are often the entry point for businesses exploring conversational AI. They simulate human conversation but operate on scripted decision trees and pre‑defined responses. Chatbots handle simple repetitive tasks by following rules; they answer FAQs, automate HR leave requests and push marketing promotions. Because their behaviour is rigid, chatbots scale easily and cut support costs as they can handle up to 80 % of routine inquiries. However, their limited adaptability and weak contextual memory mean they struggle with nuanced conversations and require well‑structured data.

Assistants: context‑aware helpers with human‑like dialogue

AI assistants go beyond script‑driven chatbots by drawing on user context, past interactions and multimodal inputs. Tools like Alexa and Google Assistant can interpret voice commands, retrieve information, and personalize responses. These assistants are useful in situations where multitasking, information retrieval, and real-time responsiveness are critical. They can handle tasks such as smart scheduling, real-time translation, and health tech data analysis. However, even these assistants struggle with vaguely defined prompts or complex, multi-turn workflows. Most require human validation for decisions and operate within limited domains.

Copilots: domain‑specific productivity partners

AI copilots are are specialized collaborators that accelerate work in specific domains. Using generative AI, they anticipate user needs, automatically generate outputs, and recommend next steps. In software development, for example, a copilot might automatically generate SQL queries, suggest code fixes, or draft technical documents. A McKinsey study found that generative AI enables developers to complete coding tasks twice as fast. However, copilots often require significant system integration, continuous fine-tuning, and human approval.

Autonomous AI agents: digital workers with their own goals

AI agents represent the pinnacle of automation. Unlike chatbots or copilots that assist a human, AI agents independently plan, make decisions and act to accomplish multi‑step objectives. They can be described as “autonomous digital workers” that observe their environment, analyse data, strategise solutions and execute actions. Agents break down goals into sub‑tasks, determine execution paths and adjust strategy based on real‑time feedback. They possess deep contextual memory, multimodal input capability and can coordinate with other agents. This autonomy allows them to handle complex domains like logistics or cybersecurity, where rapid decisions at scale are crucial.

Comparing chatbots, assistants, copilots and AI agents

Businesses must choose the right tool for the right problem. The table below summarises differences in interaction style, autonomy, context retention, proactivity and integration complexity.

This progression illustrates that AI agents are not just “fancier chatbots.” They incorporate elements of chatbots, assistants and copilots but add decision‑making, planning and true agency. When considering AI agents vs. chatbots, decision makers must assess whether their problem requires autonomy and adaptation or can be solved with a scripted workflow. Startups may find that using a chatbot or copilot is sufficient until the complexity of their operations demands a fully autonomous AI agent.

How AI agents work under the hood

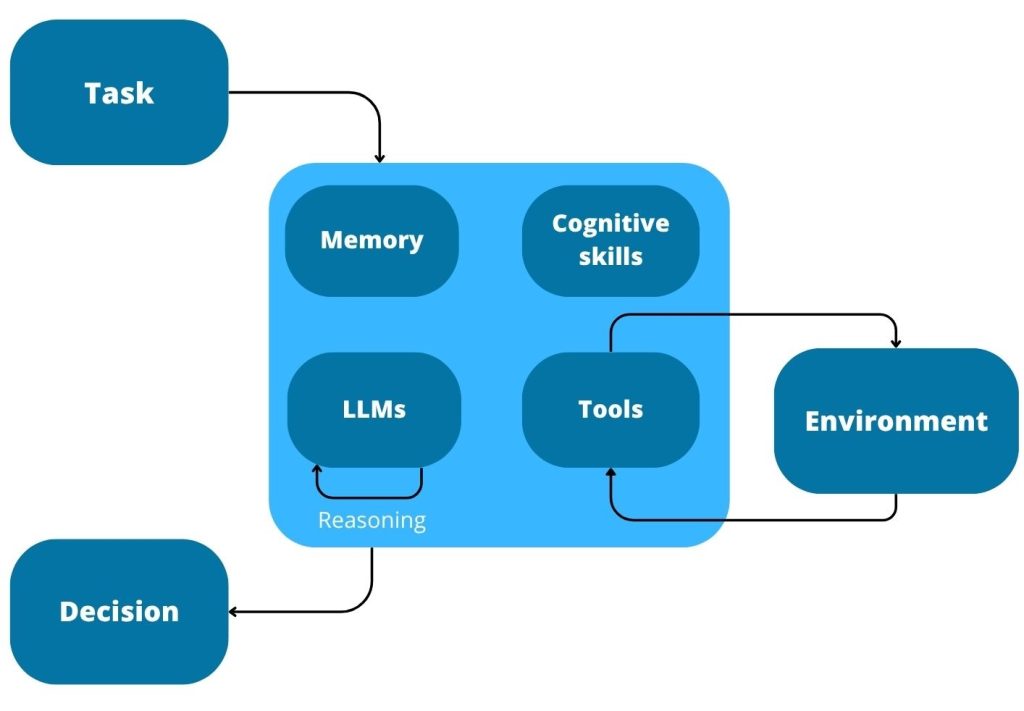

Understanding how AI agents work helps demystify their capabilities and limitations. The architecture of an agent can be described as a combination of five key components:

- LLM “brain” – At the core is a large language model capable of understanding natural language, reasoning and generating coherent responses. This model provides the agent with a conceptual understanding of tasks and instructions.

- Memory and context – Agents must remember past interactions and maintain context across multi‑step tasks. A memory module stores conversation history and relevant data so the agent can recall previous states.

- Retrieval‑augmented generation (RAG) – Agents augment LLM responses with external information. When the agent needs facts outside its context window, it issues search queries or database lookups, then integrates the retrieved content into its output.

- Tool integrations – Tools are functions the agent can call to perform actions beyond text generation. They might include APIs for sending emails, updating calendars, executing scripts or controlling IoT devices. Agents decide which tool to invoke based on the task and can chain multiple tools.

- Goal‑driven decision engine – Finally, an agent’s planning module decomposes user goals into sub‑tasks, sequences tool calls and monitors progress. This decision engine uses chain‑of‑thought prompting, planning algorithms or reinforcement learning to adapt to changing conditions.

These components allow an agent to move beyond simple conversation and perform autonomous tasks. For example, a marketing agent might recognise that a campaign underperforms, retrieve relevant data, adjust ad budgets and schedule new content without a human telling it to do so.

Multi‑agent systems: distributed intelligence

Single agents can achieve impressive results, but many complex tasks require multiple specialised agents cooperating. Multi‑agent systems (MAS) are distributed networks where each agent focuses on a specific role, and an orchestration layer coordinates them. Specialisation allows agents to operate in parallel and offload repetitive work, while humans focus on strategy. A budget pacer agent can automatically reallocate spending across channels, reacting to real‑time performance.

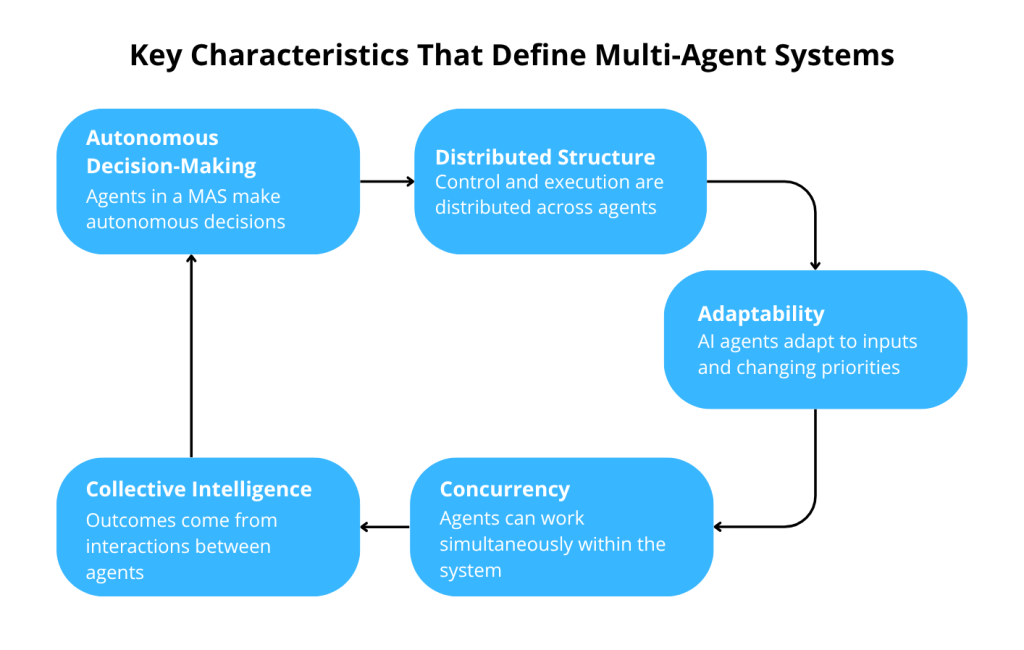

MAS are distributed systems of autonomous agents interacting through an orchestration layer; they feature autonomous decision‑making, distributed structures, adaptability, concurrency and collective intelligence.

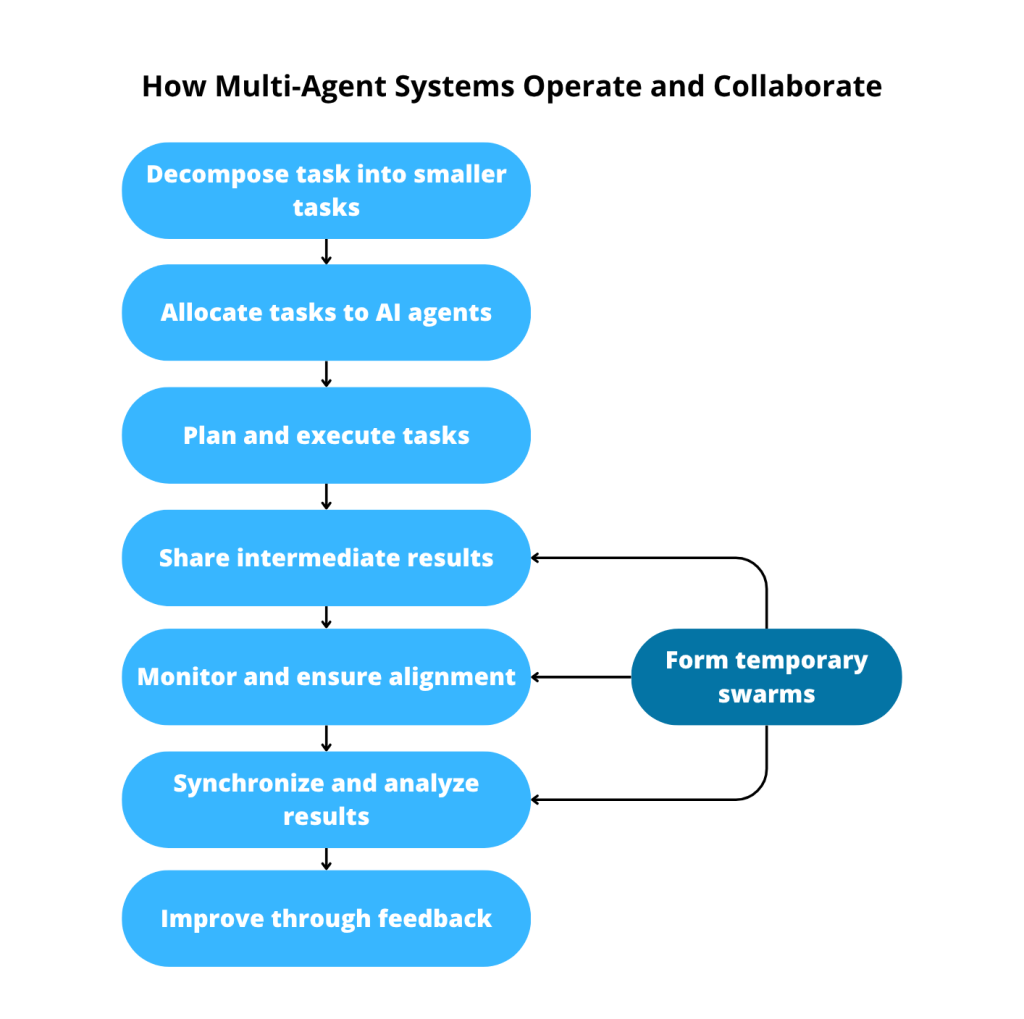

In practice, tasks are decomposed and allocated to agents; results are shared and aggregated; and the system can reassign tasks and handle errors. Orchestration agents manage task assignment, route data, enforce policies, handle parallel and sequential flows and monitor performance.

How multi‑agent systems work

At a high level, a multi‑agent system functions through decomposition, delegation and orchestration. When the system receives a complex request or objective, it breaks the problem into smaller, independent sub‑tasks. These sub‑tasks are then delegated to individual agents that specialise in those roles. Each agent performs its portion of the work - querying data, taking actions or generating reports -and returns its results to the system. The MAS then aggregates the outputs, checks for completeness and correctness, and either delivers the final result or feeds it into subsequent tasks. If an agent fails or returns an unsatisfactory result, the system reassigns the task to another agent or retries it.

An orchestration agent or controller plays a critical role in this process. It assigns tasks to the appropriate agents, routes inputs and outputs, enforces policies, manages parallel and sequential flows, handles errors and monitors performance. In more decentralised MAS, agents may negotiate or coordinate peer‑to‑peer, but most enterprise systems use a central orchestrator for predictability and compliance. This combination of specialised agents and a coordinating layer enables MAS to handle complex workflows efficiently and adapt to changing conditions.

Why MAS matter:

- Specialisation and parallelism: Each agent focuses on a narrow expertise and tasks can run concurrently, accelerating workflow completion.

- Tool management: Agents can each manage different tool sets (CRM, email, analytics) so the overall system can interface with numerous external systems.

- Fault tolerance and scalability: Distributed agents allow the system to recover from failures by reassigning tasks, and new agents can be added to scale workload.

In 2025, MAS are increasingly used for complex enterprise processes such as supply‑chain optimisation, financial trading and large‑scale IT operations. Understanding MAS helps differentiate AI agents (single autonomous units) from agentic AI (multi‑agent systems tackling complex, evolving goals).

Agentic AI vs. AI agents: clarifying the terminology

The terms “AI agent” and “agentic AI” are often conflated, but they refer to different things. Forbes clarifies that AI agents are specific applications built to perform multi‑step tasks on behalf of users, while agentic AI is a broader field of research aimed at creating AI systems capable of autonomous, goal‑oriented behaviour. Agentic AI underpins the development of AGI but is not itself a product.

Moveworks explains that agentic AI systems coordinate multiple agents to achieve goals; they emphasise autonomy, complex reasoning, learning and planning. We can use the analogy of an executive assistant (agentic AI) versus a specialised team member (AI agent): the executive assistant can set the agenda and plan meetings (agentic), while the team member executes a specific task. AI agents generally operate within a narrower domain and follow prescribed decision trees or protocols.

The table below summarises the key differences between these two concepts:

| Dimension | AI agent | Agentic AI |

| Autonomy | Executes tasks within predefined rules; can make decisions inside a well‑specified scope | Highly autonomous; sets its own goals, plans strategies and coordinates multiple agents |

| Scope & complexity | Narrow domain; specialises in monitoring or processing specific data or workflows | Broad, complex objectives that may span domains and require long‑term adaptation |

| Decision‑making | Follows decision trees and protocols; often reactive | Proactive, evaluates options and chooses actions based on expected outcomes |

| Learning & adaptability | Limited adaptation; must be reprogrammed for new situations | Learns from experience, adapts to new environments and refines strategies over time |

| Proactiveness & planning | Responds to user inputs and triggers tasks; seldom sets long‑term plans | Proactively identifies problems, generates plans and orchestrates multiple agents toward overarching goals |

Recognising these differences is important when researching AI agent vs agentic AI. For most business applications, especially startups, building an AI agent is appropriate because the tasks are well scoped (e.g., reset passwords, route tickets, schedule meetings). Agentic AI remains largely in research and high‑stakes enterprise settings where holistic, long‑term planning is needed.

When to use an AI agent and when not to

Deciding whether to invest in an AI agent depends on task complexity and business value. Here is a simple checklist for evaluating whether an agent is warranted. Before building an agent, consider four criteria:

- Task complexity: Can the task be cleanly decomposed into simple steps? If the goal is ambiguous or requires dynamic decision‑making across changing conditions, an agent may help. For straightforward or repetitive tasks, a single LLM call or scripted workflow might suffice.

- Value: Does the automation justify the increased token cost and latency of an agent? If the time saved or efficiency gained has high business value, the extra complexity may be worthwhile.

- Doability / viability: Are all sub‑tasks actually doable with the tools and data you can provide? De‑risk critical capabilities first and ensure the agent has the means to complete its tasks.

- Cost of error & error discovery: How high are the stakes if the agent makes a mistake? If errors are costly or hard to detect, start with human‑in‑the‑loop or read‑only agents.

Ross Stevenson’s framework for learning and development teams echoes these principles: avoid building agents unnecessarily and “don’t go after a fly with a bazooka”. His simple decision tree suggests:

- If the task is simple or infrequent, do it manually.

- If the task is repetitive, rule‑based and easily codified, build a workflow or automation script.

- Use an AI agent only when there is a clear goal with ambiguous steps, significant cognitive load or the need to react to changing conditions.

For startups, this means beginning with lean solutions, such as chatbots or conditional scripts, until customer demand or operational complexity warrants an autonomous agent. Building an agent too early can waste resources, while delaying may stall growth. Evaluate each use case with the criteria above and avoid hype‑driven adoption.

Real‑world applications and use cases

AI agents are already transforming multiple industries. Below are representative use cases, illustrating both single‑agent and multi‑agent implementations.

IT workflow automation and incident response

In IT service management, AI agents streamline repetitive tasks and respond to incidents faster than human teams. Agents can automate ticket routing, identify and triage incidents, create dynamic schedules and execute remediation protocols. An agent might continuously monitor logs for anomalies, trigger alerts, collect diagnostic data and apply fixes without human intervention. In multi‑agent systems, one agent monitors network health while another handles endpoint remediation; an orchestration agent coordinates them.

Device monitoring and predictive maintenance

Agents excel at monitoring devices and predicting failures. Agents scan network traffic, analyse resource consumption and flag irregularities. Predictive maintenance agents use machine data and sensor readings to forecast equipment failures and schedule repairs. In manufacturing, an autonomous agent can predict when a machine will break, order replacement parts and schedule maintenance, reducing downtime. MAS can manage fleets of devices across geographical locations.

HR onboarding and employee support

Agents can automate HR workflows such as onboarding, benefits enrollment and policy guidance. A new employee can chat with an HR agent to complete forms, order equipment and schedule orientation. If the question is too complex, the agent transfers the session to a human. Agents can also answer policy questions, update personal data and issue reminders about mandatory training - 24 hours a day.

Marketing and advertising optimisation

Agents unlock new levels of automation in marketing. A budget pacer agent automatically reallocates ad budgets across channels in response to performance. In a multi‑agent marketing system, one agent handles creative generation, another optimises targeting and a third manages bidding. These agents collaborate to adjust strategies in real time and experiment with new channels, enabling continuous optimisation without manual intervention. Because they learn from results, they evolve strategies faster than human marketers.

Customer support and service desk automation

Service desk agents answer common IT or HR questions, reset passwords and fix software issues. Agents reduce ticket resolution times by triaging requests, using NLP to classify issues and generating responses. More complex inquiries are routed to human experts. In agentic AI systems, multiple agents handle different categories (e.g., hardware, software, access), while an orchestration layer prioritises tasks and ensures consistent communication.

Healthcare and diagnostic support

AI agents show promise in healthcare, where they can interpret medical images, summarise patient histories and suggest diagnoses. Agents could combine MRI images, lab results and patient history to assist treatment planning. However, high stakes require rigorous oversight and regulation; such agents should remain under physician supervision until safety is proven.

Finance, compliance and fraud detection

Financial institutions use agents for fraud detection, risk assessment and regulatory reporting. Agents monitor transaction streams for anomalies, cross‑reference patterns against known fraud signatures and flag suspicious activity for human review. They can also assist with know‑your‑customer (KYC) tasks, verifying documents and populating compliance forms. Multi‑agent systems coordinate risk models, compliance checks and customer service tasks to maintain efficiency and accuracy.

Check out a related article:

Generative AI Like ChatGPT: What Tech Leaders Need to Know

Industry‑specific (vertical) agents

We can expect a rise of vertical AI agents tailored to specific industries. Examples include legal document drafting agents, real‑estate listing optimisers and scientific research assistants. Because vertical agents embed domain knowledge and specialised tools, they deliver high value in targeted contexts. Startups in niche markets can leverage vertical agents to differentiate their product offerings, but should ensure clear scope and rigorous testing.

Intersog’s Deana AI offers a practical illustration of how an AI assistant can deliver business value. Deana AI is a virtual assistant built with GPT‑3 and custom NLP models that interacts via email and messengers. It can update schedules, send meeting invites, track expenses by parsing receipts, manage files, search information, use maps and summarise documents. The assistant learns user behaviour patterns through machine learning and predictive analytics, becoming more personalised over time.

Development best practices for building AI agents

Adopting AI agents requires careful engineering and governance. Key points include:

- Document processes and provide clear SOPs. Agents are not employees; they need explicit instructions and well‑defined inputs. Documented processes help ensure consistent performance and reduce hallucinations.

- Start simple, less is more. Begin with a single agent that solves a well‑scoped problem; avoid deploying overlapping agents that confuse one another. Expand strategically after validating the first agent.

- Focus on tool building. Approximately 70 % of development effort should go into building and refining the tools an agent uses. Each agent should have 4–6 tools; too many tools slow reasoning and increase confusion.

- Master prompt engineering. Craft system prompts with clear instructions, context and examples. Use retrieval to provide relevant data and guardrails like Pydantic validators or regex checks to catch hallucinations.

- Implement safety mechanisms. Validate tool outputs, restrict sensitive actions (e.g., financial transfers) and include human‑in‑the‑loop approval for high‑risk operations. This mitigates the risk of errors or malicious use.

- Iterate and monitor. Development should be iterative - divide complex workflows into manageable components, test early and refine. Monitor agent performance, identify failure points and adjust prompts or tools.

- Plan for vertical and multi‑agent expansion. Once a single agent is stable, consider adding specialised agents or adopting a multi‑agent architecture. Remember that vertical agents require deep domain knowledge and additional safety layers.

Beyond these technical tips, organisations should consider ethical implications (privacy, fairness, transparency) and comply with regulations. When integrating third‑party tools, ensure data security and governance. The LLM’s context window is limited – you can think like the agent and providing only the most essential information. Regularly evaluate whether an agent is still needed or if a simpler automation could replace it, keeping costs and latency in check.

Conclusion: adopting AI agents thoughtfully

AI agents are poised to reshape business operations by 2025. Unlike chatbots, assistants, and copilots, they are fundamentally autonomous, proactive, and goal-oriented. Agentic AI refers to the broader field of multi-agent systems capable of complex, self-directed behavior. AI agents are specific tools that perform tasks within defined scopes. Organizations should assess task complexity, value, feasibility, and error impact to decide when to use AI agents, following the principle of not using a bazooka to kill a fly. Use chatbots or simple workflows for straightforward tasks and reserve agents for high-value, multi-step problems.

Real-world applications span IT service management, device monitoring, human resources, marketing, healthcare, and finance. Intersog’s Deana AI demonstrates how a well-designed assistant can save time and boost productivity. Best development practices: clear documentation, minimal toolkits, strong prompt engineering, safety checks, and iterative refinement are essential to building reliable agents. As vertical agents and multi-agent systems become more prevalent, teams must balance innovation with governance and ethical considerations.

For startups and enterprises considering AI agents in 2025, the message is clear: adopt intentionally. Invest in autonomous AI software development solutions where they deliver real value. Understand their inner workings and limitations. Build responsibly. This thoughtful approach will unlock the full potential of AI agents while avoiding the pitfalls of hype and over-automation.

Leave a Comment